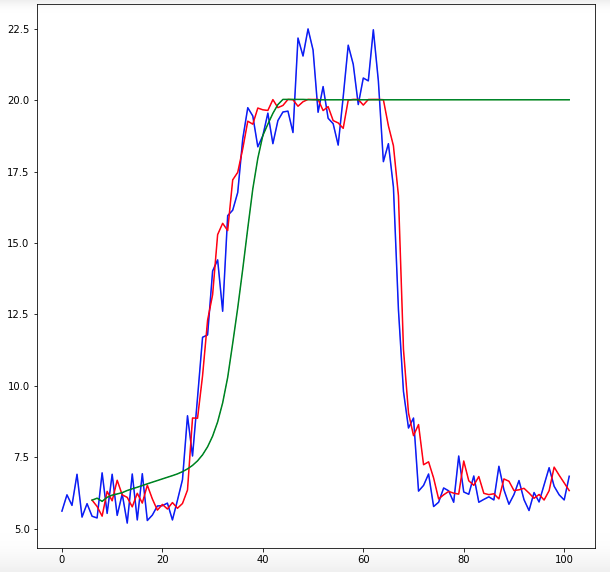

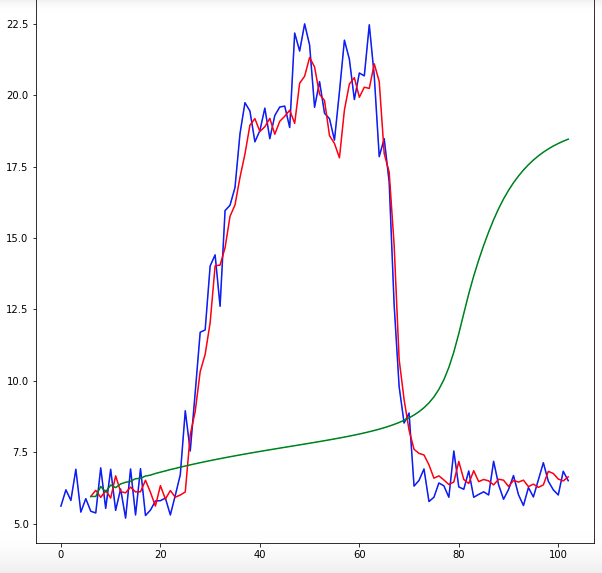

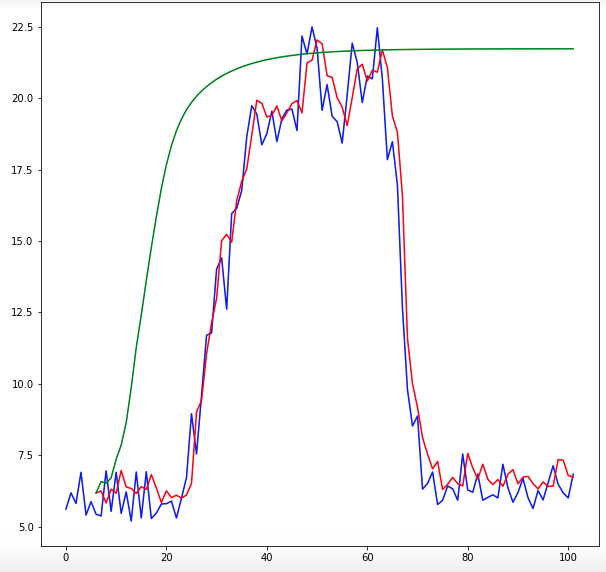

I am using the Sequential model from Keras, with the DENSE layer type. I wrote a function that recursively calculates predictions, but the predictions are way off. I am wondering what is the best activation function to use for my data. Currently I am using hard_sigmoid function. The output data values range from 5 to 25. The input data has the shape (6,1) and the output data is a single value. When I plot the predictions they never decrease. Thank you for the help!!

# create and fit Multilayer Perceptron model

model = Sequential();

model.add(Dense(20, input_dim=look_back, activation='hard_sigmoid'))

model.add(Dense(16, activation='hard_sigmoid'))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(trainX, trainY, epochs=200, batch_size=2, verbose=0)

#function to predict using predicted values

numOfPredictions = 96;

for i in range(numOfPredictions):

temp = [[origAndPredictions[i,0],origAndPredictions[i,1],origAndPredictions[i,2],origAndPredictions[i,3],origAndPredictions[i,4],origAndPredictions[i,5]]]

temp = numpy.array(temp)

temp1 = model.predict(temp)

predictions = numpy.append(predictions, temp1, axis=0)

temp2 = []

temp2 = [[origAndPredictions[i,1],origAndPredictions[i,2],origAndPredictions[i,3],origAndPredictions[i,4],origAndPredictions[i,5],predictions[i,0]]]

temp2 = numpy.array(temp2)

origAndPredictions = numpy.vstack((origAndPredictions, temp2))

update: I used this code to implement the swish.

from keras.backend import sigmoid

def swish1(x, beta = 1):

return (x * sigmoid(beta * x))

def swish2(x, beta = 1):

return (x * sigmoid(beta * x))

from keras.utils.generic_utils import get_custom_objects

from keras.layers import Activation

get_custom_objects().update({'swish': Activation(swish)})

model.add(Activation(custom_activation,name = "swish1"))

update: Using this code:

from keras.backend import sigmoid

from keras import backend as K

def swish1(x):

return (K.sigmoid(x) * x)

def swish2(x):

return (K.sigmoid(x) * x)

Thanks for all the help!!