When I'm trying to get through openGL wiki and tutorials on www.learnopengl.com, it never ends up understandable by intuition how whole concept works. Can someone maybe explain me in more abstract way how it works? What are vertex shader and fragment shader and what do we use them for?

The OpenGL wiki gives a good definition:

A Shader is a user-defined program designed to run on some stage of a graphics processor.

History lesson

In the past, graphics cards were non-programmable pieces of silicon which performed a set of fixed algorithms:

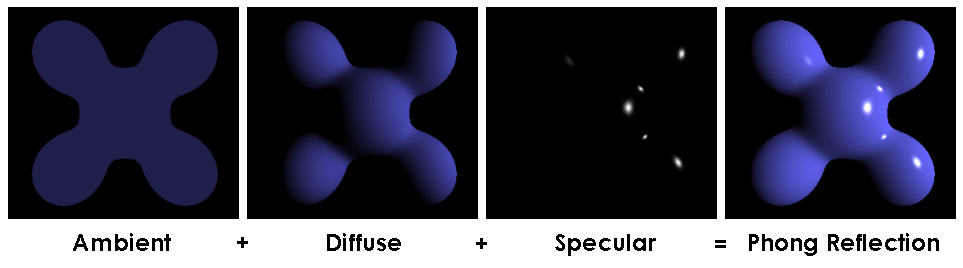

- inputs: 3D coordinates of triangles, their colors, light sources

- output: a 2D image

all using a single fixed parameterized algorithm, typically similar to the Phong reflection model. Image from Wiki:

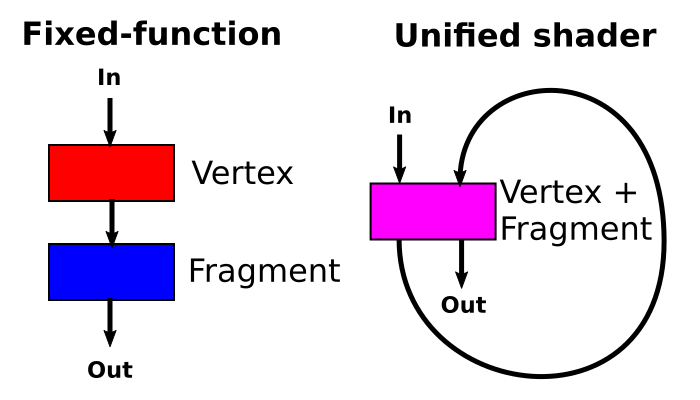

Such architectures were known as "fixed function pipeline", as they could only implement a single algorithm.

But that was too restrictive for programmers who wanted to create many different complex visual effects.

So as semiconductor manufacture technology advanced, and GPU designers were able to cramp more transistors per square millimeter, vendors started allowing some the parts of the rendering pipeline to be programmed programming languages like the C-like GLSL.

Those languages are then converted to semi-undocumented instruction sets that runs on small "CPUs" built-into those newer GPU's.

In the beginning, those shader languages were not even Turing complete!

The term General Purpose GPU (GPGPU) refers to this increased programmability of modern GPUs, and new languages were created to be more adapted to it than OpenGL, notably OpenCL and CUDA. See this answer for a brief discussion of which kind of algorithm lends itself better to GPU rather than CPU computing: What do the terms "CPU bound" and "I/O bound" mean?

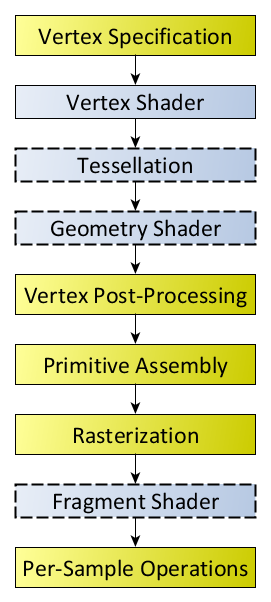

Overview of the modern shader pipeline

In the OpenGL 4 model, only the blue stages of the following diagram are programmable:

Shaders take the input from the previous pipeline stage (e.g. vertex positions, colors, and rasterized pixels) and customize the output to the next stage.

The two most important ones are:

vertex shader:

- input: position of points in 3D space

- output: 2D projection of the points (using 4D matrix multiplication)

This related example shows more clearly what a projection is: How to use glOrtho() in OpenGL?

fragment shader:

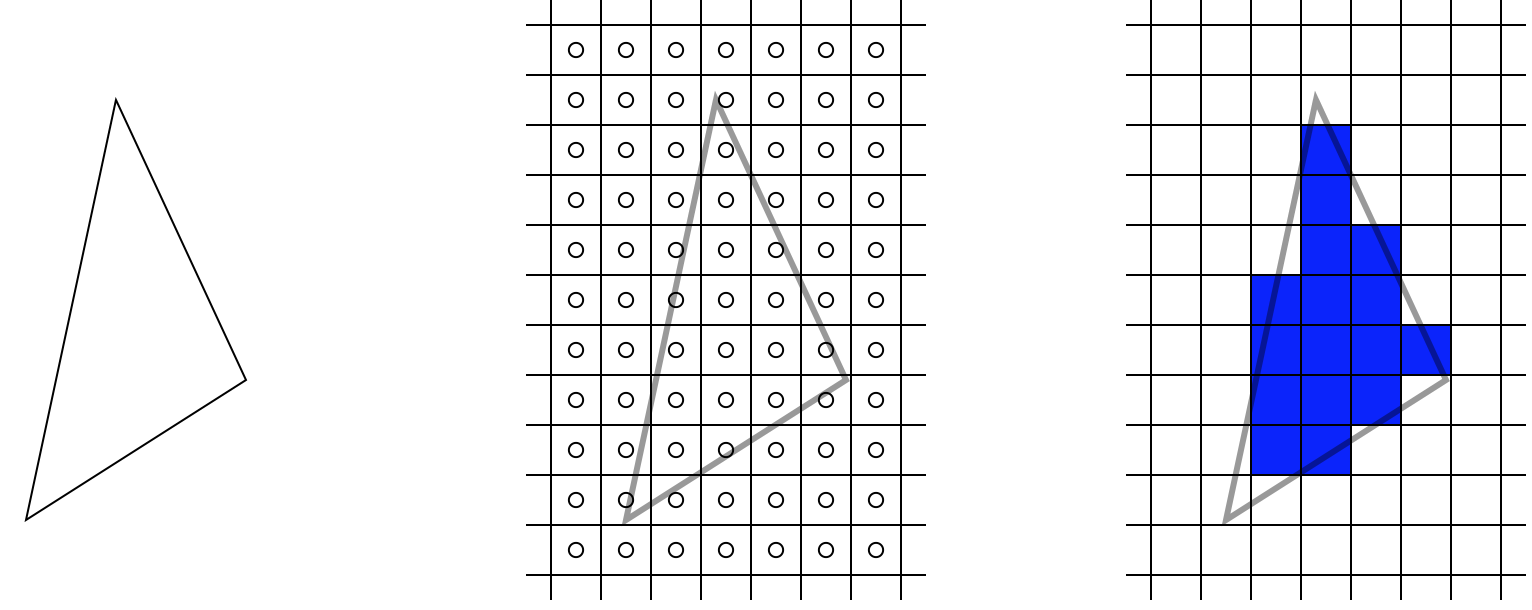

- input: 2D position of all pixels of a triangle + (color of edges or a texture image) + lightining parameters

- output: the color of every pixel of the triangle (if it is not occluded by another closer triangle), usually interpolated between vertices

The fragments are discretized from the previously calculated triangle projections, see:

Related question: What are Vertex and Pixel shaders?

From this we see that the name "shader" is not very descriptive for current architectures. The name originates of course from "shadows", which is handled by what we now call the "fragment shader". But "shaders" in GLSL now also manage vertex positions as is the case for the vertex shader, not to mention OpenGL 4.3 GL_COMPUTE_SHADER, which allows for arbitrary calculations completely unrelated to rendering, much like OpenCL.

TODO could OpenGL be efficiently implemented with OpenCL alone, i.e., making all stages programmable? Of course, there must be a performance / flexibility trade-off.

The first GPUs with shaders even used different specialized hardware for vertex and fragment shading, since those have quite different workloads. Current architectures however use multiple passes of a single type of hardware (basically small CPUs) for all shader types, which saves some hardware duplication. This design is known as an Unified Shader Model:

Adapted from this image, SVG source.

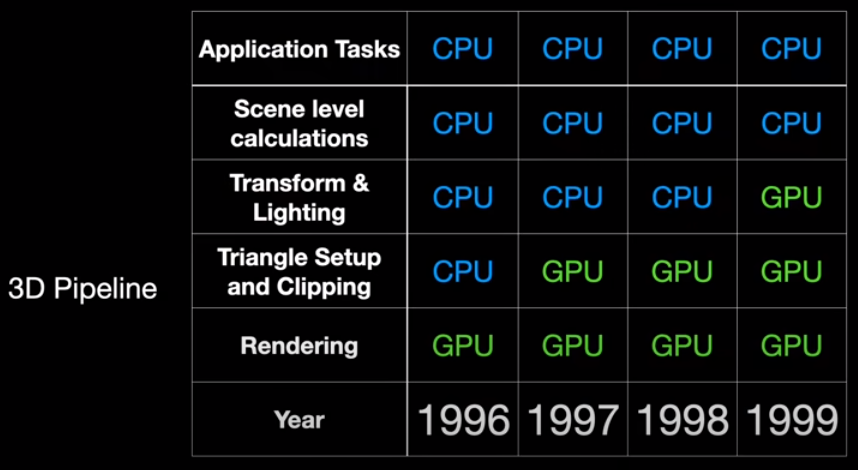

The following amazing summary from the great channel Asianometry https://youtu.be/GuV-HyslPxk?t=350 also clarifies that some of the pipeline was actually handled by the CPU itself rather than GPU in earlier technology, largely led by NVIDIA:

The same video then also goes on to mention how their GeForce 3 series from 2001 was the first product to introduce some level of shader programmability.

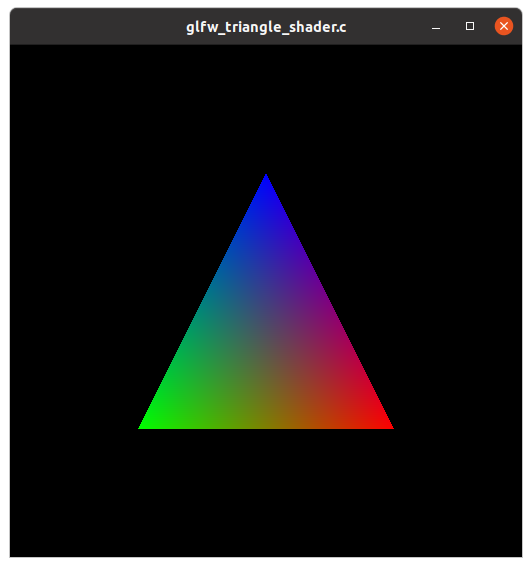

Source code example

To truly understand shaders and all they can do, you have to look at many examples and learn the APIs. https://github.com/JoeyDeVries/LearnOpenGL for example is a good source.

In modern OpenGL 4, even hello world triangle programs use super simple shaders, instead of older deprecated immediate APIs like glBegin and glColor.

Consider this triangle hello world example that has both the shader and immediate versions in a single program: https://mcmap.net/q/17103/-what-does-quot-immediate-mode-quot-mean-in-opengl

main.c

#include <stdio.h>

#include <stdlib.h>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

#define INFOLOG_LEN 512

static const GLuint WIDTH = 512, HEIGHT = 512;

/* vertex data is passed as input to this shader

* ourColor is passed as input to the to the fragment shader. */

static const GLchar* vertexShaderSource =

"#version 330 core\n"

"layout (location = 0) in vec3 position;\n"

"layout (location = 1) in vec3 color;\n"

"out vec3 ourColor;\n"

"void main() {\n"

" gl_Position = vec4(position, 1.0f);\n"

" ourColor = color;\n"

"}\n";

static const GLchar* fragmentShaderSource =

"#version 330 core\n"

"in vec3 ourColor;\n"

"out vec4 color;\n"

"void main() {\n"

" color = vec4(ourColor, 1.0f);\n"

"}\n";

GLfloat vertices[] = {

/* Positions Colors */

0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.5f, 0.0f, 0.0f, 0.0f, 1.0f

};

int main(int argc, char **argv) {

int immediate = (argc > 1) && argv[1][0] == '1';

/* Used in !immediate only. */

GLuint vao, vbo;

GLint shaderProgram;

glfwInit();

GLFWwindow* window = glfwCreateWindow(WIDTH, HEIGHT, __FILE__, NULL, NULL);

glfwMakeContextCurrent(window);

glewExperimental = GL_TRUE;

glewInit();

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glViewport(0, 0, WIDTH, HEIGHT);

if (immediate) {

float ratio;

int width, height;

glfwGetFramebufferSize(window, &width, &height);

ratio = width / (float) height;

glClear(GL_COLOR_BUFFER_BIT);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glOrtho(-ratio, ratio, -1.f, 1.f, 1.f, -1.f);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glBegin(GL_TRIANGLES);

glColor3f( 1.0f, 0.0f, 0.0f);

glVertex3f(-0.5f, -0.5f, 0.0f);

glColor3f( 0.0f, 1.0f, 0.0f);

glVertex3f( 0.5f, -0.5f, 0.0f);

glColor3f( 0.0f, 0.0f, 1.0f);

glVertex3f( 0.0f, 0.5f, 0.0f);

glEnd();

} else {

/* Build and compile shader program. */

/* Vertex shader */

GLint vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

GLint success;

GLchar infoLog[INFOLOG_LEN];

glGetShaderiv(vertexShader, GL_COMPILE_STATUS, &success);

if (!success) {

glGetShaderInfoLog(vertexShader, INFOLOG_LEN, NULL, infoLog);

printf("ERROR::SHADER::VERTEX::COMPILATION_FAILED\n%s\n", infoLog);

}

/* Fragment shader */

GLint fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

glGetShaderiv(fragmentShader, GL_COMPILE_STATUS, &success);

if (!success) {

glGetShaderInfoLog(fragmentShader, INFOLOG_LEN, NULL, infoLog);

printf("ERROR::SHADER::FRAGMENT::COMPILATION_FAILED\n%s\n", infoLog);

}

/* Link shaders */

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glLinkProgram(shaderProgram);

glGetProgramiv(shaderProgram, GL_LINK_STATUS, &success);

if (!success) {

glGetProgramInfoLog(shaderProgram, INFOLOG_LEN, NULL, infoLog);

printf("ERROR::SHADER::PROGRAM::LINKING_FAILED\n%s\n", infoLog);

}

glDeleteShader(vertexShader);

glDeleteShader(fragmentShader);

glGenVertexArrays(1, &vao);

glGenBuffers(1, &vbo);

glBindVertexArray(vao);

glBindBuffer(GL_ARRAY_BUFFER, vbo);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

/* Position attribute */

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(GLfloat), (GLvoid*)0);

glEnableVertexAttribArray(0);

/* Color attribute */

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(GLfloat), (GLvoid*)(3 * sizeof(GLfloat)));

glEnableVertexAttribArray(1);

glBindVertexArray(0);

glUseProgram(shaderProgram);

glBindVertexArray(vao);

glDrawArrays(GL_TRIANGLES, 0, 3);

glBindVertexArray(0);

}

glfwSwapBuffers(window);

/* Main loop. */

while (!glfwWindowShouldClose(window)) {

glfwPollEvents();

}

if (!immediate) {

glDeleteVertexArrays(1, &vao);

glDeleteBuffers(1, &vbo);

glDeleteProgram(shaderProgram);

}

glfwTerminate();

return EXIT_SUCCESS;

}

Adapted from Learn OpenGL, my GitHub upstream.

Compile and run on Ubuntu 20.04:

sudo apt install libglew-dev libglfw3-dev

gcc -ggdb3 -O0 -std=c99 -Wall -Wextra -pedantic -o main.out main.c -lGL -lGLEW -lglfw

# Shader

./main.out

# Immediate

./main.out 1

Identical outcome of both:

From that we see how:

the vertex and fragment shader programs are being represented as C-style strings containing GLSL language (

vertexShaderSourceandfragmentShaderSource) inside a regular C program that runs on the CPUthis C program makes OpenGL calls which compile those strings into GPU code, e.g.:

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL); glCompileShader(fragmentShader);the shader defines their expected inputs, and the C program provides them through a pointer to memory to the GPU code. For example, the fragment shader defines its expected inputs as an array of vertex positions and colors:

"layout (location = 0) in vec3 position;\n" "layout (location = 1) in vec3 color;\n" "out vec3 ourColor;\n"and also defines one of its outputs

ourColoras an array of colors, which is then becomes an input to the fragment shader:static const GLchar* fragmentShaderSource = "#version 330 core\n" "in vec3 ourColor;\n"The C program then provides the array containing the vertex positions and colors from the CPU to the GPU

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

On the immediate non-shader example however, we see that magic API calls are made that explicitly give positions and colors:

glColor3f( 1.0f, 0.0f, 0.0f);

glVertex3f(-0.5f, -0.5f, 0.0f);

We understand therefore that this represents a much more restricted model, since the positions and colors are not arbitrary user-defined arrays in memory that then get processed by an arbitrary user provided program anymore, but rather just inputs to a Phong-like model.

In both cases, the rendered output normally goes straight to the video, without passing back through the CPU, although it is possible to read to the CPU e.g. if you want to save them to a file: How to use GLUT/OpenGL to render to a file?

Cool non-trivial shader applications to 3D graphics

One classic cool application of a non-trivial shader are dynamic shadows, i.e. shadows cast by one object on another, as opposed to shadows that only depend on the angle between the normal of a triangle and the light source, which was already covered in the Phong model:

Cool non-3D fragment shader applications

https://www.shadertoy.com/ is a "Twitter for fragment shaders". It contains a huge selection of visually impressive shaders, and can serve as a "zero setup" way to play with fragment shaders. Shadertoy runs on WebGL, an OpenGL interface for the browser, so when you click on a shadertoy, it renders the shader code in your browser. Like most "fragment shader graphing applicaitons", they just have a fixed simple vertex shader that draws two triangles on the screen right in front of the camera: WebGL/GLSL - How does a ShaderToy work? so the users only code the fragment shader.

Here are some more scientific oriented examples hand picked by me:

image processing can be done faster than on CPU for certain algorithms: Is it possible to build a heatmap from point data at 60 times per second?

plotting can be done faster than on CPU for certain functions: Is it possible to build a heatmap from point data at 60 times per second?

Shaders basically give you the correct coloring of the object that you want to render, based on several light equations. So if you have a sphere, a light, and a camera, then the camera should see some shadows, some shiny parts, etc, even if the sphere has only one color. Shaders perform the light equation computations to give you these effects.

The vertex shader transforms each vertex's 3D position in virtual space (your 3d model) to the 2D coordinate at which it appears on the screen.

The fragment shader basically gives you the coloring of each pixel by doing light computations.

In a short and simple manner, GPU routines provide hook/callback functions so that you paint the textures of the faces. These hooks are the shaders.

© 2022 - 2024 — McMap. All rights reserved.