Yes, you can use App Engine to communicate with Google Cloud Machine Learning (referred to as CloudML from here on).

To communicate with CloudML from Python you can use the Google API client library, which you can use with any Google Service. This client library can also be used on App Engine, it even has specific documentation for this here.

I would recommend to first experiment with the API Client locally before testing it on App Engine. For the next part of this answer, I will make no distinction between using this client library locally or on App Engine.

You mentioned two different kind of operations you want to do with CloudML:

- Update a model with new data

- Getting predictions from a trained/deployed model

1. Update a model with new data

Updating a model on new data actually corresponds with two steps. First training the model on new data (with or without CloudML) and subsequently deploying this newly trained model on CloudML.

You can do both steps with the API client library from App Engine, but to reduce the complexity, I think you should start by following the prediction quickstart. This will result in you having a newly trained and deployed model and will give you an understanding of the different steps involved.

Once you are familiar with the concepts and steps involved, you will see that you can store your new data on GCS, and replace the different gcloud commands in the quickstart by their respective API calls that you can make with the API client library (documentation).

2. Getting predictions from a deployed model

If you have a deployed model (if not, follow link from the previous step), you can easily communicate with CloudML to either get 1)batch predictions or 2)online predictions (the latter is in alpha).

Since you are using App Engine, I assume you are interested in using the online predictions (getting immediate results).

The minimal code required to do this:

from oauth2client.client import GoogleCredentials

from googleapiclient import discovery

projectID = 'projects/<your_project_id>'

modelName = projectID+'/models/<your_model_name>'

credentials = GoogleCredentials.get_application_default()

ml = discovery.build('ml', 'v1beta1', credentials=credentials)

# Create a dictionary with the fields from the request body.

requestDict = {"instances":[

{"image": [0.0,..., 0.0, 0.0], "key": 0}

]}

# Create a request to call projects.models.create.

request = ml.projects().predict(

name=modelName,

body=requestDict)

response = request.execute()

With {"image": <image_array>, "key": <key_id>} the input-format you have defined for the deployed model via the link from the previous step. This will return in response containing the expected output of the model.

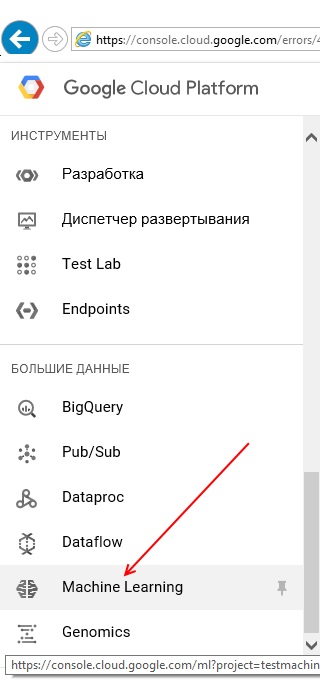

Machine Learning. There I can create somemodelsandtasks. As I understandmodelsis code based ontensorflow, whiletasksis some tasks to train or predict. So I asking is there anyway App Engine application on python can control thatMachine learning? For example, I will put there some model and whatever else, but how then I need to use it? – Schizothymia