I'm trying to reload or access the Keras-Tuner Trials after the Tuner's search has completed for inspecting the results. I'm not able to find any documentation or answers related to this issue.

For example, I set up BayesianOptimization to search for the best hyper-parameters as follows:

## Build Hyper Parameter Search

tuner = kt.BayesianOptimization(build_model,

objective='val_categorical_accuracy',

max_trials=10,

directory='kt_dir',

project_name='lstm_dense_bo')

tuner.search((X_train_seq, X_train_num), y_train_cat,

epochs=30,

batch_size=64,

validation_data=((X_val_seq, X_val_num), y_val_cat),

callbacks=[callbacks.EarlyStopping(monitor='val_loss', patience=3,

restore_best_weights=True)])

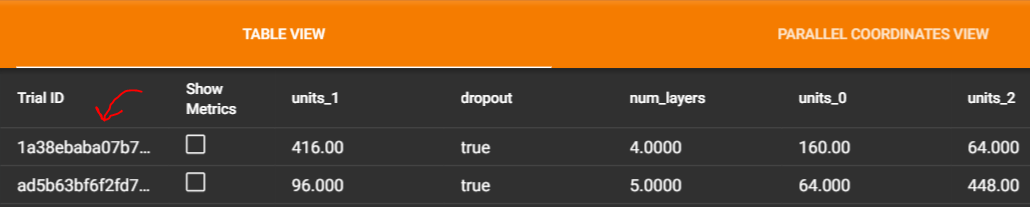

I see this creates trial files in the directory kt_dir with project name lstm_dense_bo such as below:

Now, if I restart my Jupyter kernel, how can I reload these trials into a Tuner object and subsequently inspect the best model or the best hyperparameters or the best trial?

I'd very much appreciate your help. Thank you