scipy.optimize.minimize using default method is returning the initial value as the result, without any error or warning messages. While using the Nelder-Mead method as suggested by this answer solves the problem, I would like to understand:

Why does the default method returns the wrong answer without warning the starting point as the answer - and is there a way I can protect against "wrong answer without warning" avoid this behavior in this case?

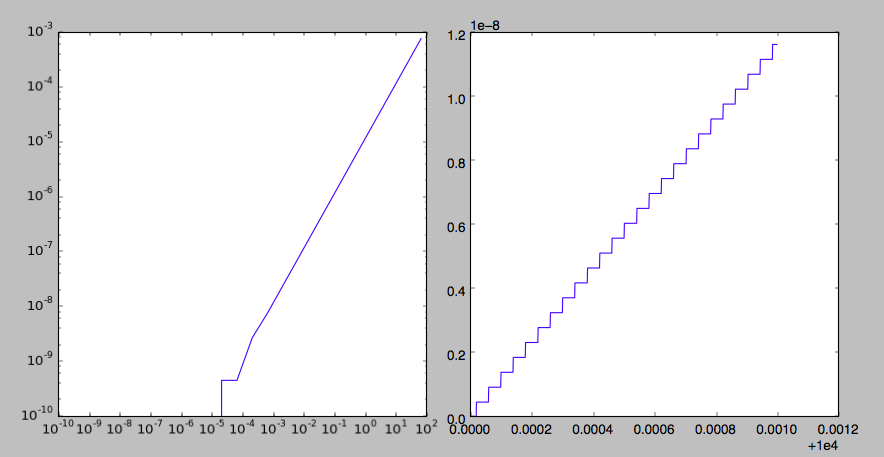

Note, the function separation uses the python package Skyfield to generate the values to be minimized which is not guaranteed smooth, which may be why Simplex is better here.

RESULTS:

test result: [ 2.14159739] 'correct': 2.14159265359 initial: 0.0

default result: [ 10000.] 'correct': 13054 initial: 10000

Nelder-Mead result: [ 13053.81011963] 'correct': 13054 initial: 10000

FULL OUTPUT using DEFAULT METHOD:

status: 0

success: True

njev: 1

nfev: 3

hess_inv: array([[1]])

fun: 1694.98753895812

x: array([ 10000.])

message: 'Optimization terminated successfully.'

jac: array([ 0.])

nit: 0

FULL OUTPUT using Nelder-Mead METHOD:

status: 0

nfev: 63

success: True

fun: 3.2179306044608054

x: array([ 13053.81011963])

message: 'Optimization terminated successfully.'

nit: 28

Here is the full script:

def g(x, a, b):

return np.cos(a*x + b)

def separation(seconds, lat, lon):

lat, lon, seconds = float(lat), float(lon), float(seconds) # necessary it seems

place = earth.topos(lat, lon)

jd = JulianDate(utc=(2016, 3, 9, 0, 0, seconds))

mpos = place.at(jd).observe(moon).apparent().position.km

spos = place.at(jd).observe(sun).apparent().position.km

mlen = np.sqrt((mpos**2).sum())

slen = np.sqrt((spos**2).sum())

sepa = ((3600.*180./np.pi) *

np.arccos(np.dot(mpos, spos)/(mlen*slen)))

return sepa

from skyfield.api import load, now, JulianDate

import numpy as np

from scipy.optimize import minimize

data = load('de421.bsp')

sun = data['sun']

earth = data['earth']

moon = data['moon']

x_init = 0.0

out_g = minimize(g, x_init, args=(1, 1))

print "test result: ", out_g.x, "'correct': ", np.pi-1, "initial: ", x_init # gives right answer

sec_init = 10000

out_s_def = minimize(separation, sec_init, args=(32.5, 215.1))

print "default result: ", out_s_def.x, "'correct': ", 13054, "initial: ", sec_init

sec_init = 10000

out_s_NM = minimize(separation, sec_init, args=(32.5, 215.1),

method = "Nelder-Mead")

print "Nelder-Mead result: ", out_s_NM.x, "'correct': ", 13054, "initial: ", sec_init

print ""

print "FULL OUTPUT using DEFAULT METHOD:"

print out_s_def

print ""

print "FULL OUTPUT using Nelder-Mead METHOD:"

print out_s_NM

minimizeper default uses an algorithms that requires your function to be smooth. If your function is not smooth, you end up in a garbage-in-garbage-out situation. – Corbitt