I have a very simple program that maps a dummy red texture to a quad.

Here is the texture definition in C++:

struct DummyRGB8Texture2d

{

uint8_t data[3*4];

int width;

int height;

};

DummyRGB8Texture2d myTexture

{

{

255,0,0,

255,0,0,

255,0,0,

255,0,0

},

2u,

2u

};

This is how I setup the texture:

void SetupTexture()

{

// allocate a texture on the default texture unit (GL_TEXTURE0):

GL_CHECK(glCreateTextures(GL_TEXTURE_2D, 1, &m_texture));

// allocate texture:

GL_CHECK(glTextureStorage2D(m_texture, 1, GL_RGB8, myTexture.width, myTexture.height));

GL_CHECK(glTextureParameteri(m_texture, GL_TEXTURE_WRAP_S, GL_REPEAT));

GL_CHECK(glTextureParameteri(m_texture, GL_TEXTURE_WRAP_T, GL_REPEAT));

GL_CHECK(glTextureParameteri(m_texture, GL_TEXTURE_MAG_FILTER, GL_NEAREST));

GL_CHECK(glTextureParameteri(m_texture, GL_TEXTURE_MIN_FILTER, GL_NEAREST));

// tell the shader that the sampler2d uniform uses the default texture unit (GL_TEXTURE0)

GL_CHECK(glProgramUniform1i(m_program->Id(), /* location in shader */ 3, /* texture unit index */ 0));

// bind the created texture to the specified target. this is necessary even in dsa

GL_CHECK(glBindTexture(GL_TEXTURE_2D, m_texture));

GL_CHECK(glGenerateMipmap(GL_TEXTURE_2D));

}

This is how I draw the texture to the quad:

void Draw()

{

m_target->ClearTargetBuffers();

m_program->MakeCurrent();

// load the texture to the GPU:

GL_CHECK(glTextureSubImage2D(m_texture, 0, 0, 0, myTexture.width, myTexture.height,

GL_RGB, GL_UNSIGNED_BYTE, myTexture.data));

GL_CHECK(glBindVertexArray(m_vao));

GL_CHECK(glDrawElements(GL_TRIANGLES, static_cast<GLsizei>(VideoQuadElementArray.size()), GL_UNSIGNED_INT, 0));

m_target->SwapTargetBuffers();

}

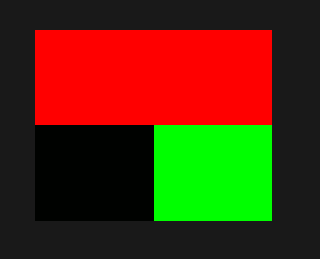

The Result:

I can't figure out why this texture won't appear Red. Also, if I change the texture internal format to RGBA / RGBA8 and the texture data array to have another element in each row, I get a nice red texture.

In case its relevant, here are my vertex attributes and my (very simple) shaders:

struct VideoQuadVertex

{

glm::vec3 vertex;

glm::vec2 uv;

};

std::array<VideoQuadVertex, 4> VideoQuadInterleavedArray

{

/* vec3 */ VideoQuadVertex{ glm::vec3{ -0.25f, -0.25f, 0.5f }, /* vec2 */ glm::vec2{ 0.0f, 0.0f } },

/* vec3 */ VideoQuadVertex{ glm::vec3{ 0.25f, -0.25f, 0.5f }, /* vec2 */ glm::vec2{ 1.0f, 0.0f } },

/* vec3 */ VideoQuadVertex{ glm::vec3{ 0.25f, 0.25f, 0.5f }, /* vec2 */ glm::vec2{ 1.0f, 1.0f } },

/* vec3 */ VideoQuadVertex{ glm::vec3{ -0.25f, 0.25f, 0.5f }, /* vec2 */ glm::vec2{ 0.0f, 1.0f } }

};

vertex setup:

void SetupVertexData()

{

// create a VAO to hold all node rendering states, no need for binding:

GL_CHECK(glCreateVertexArrays(1, &m_vao));

// create vertex buffer objects for data and indices and initialize them:

GL_CHECK(glCreateBuffers(static_cast<GLsizei>(m_vbo.size()), m_vbo.data()));

// allocate memory for interleaved vertex attributes and transfer them to the GPU:

GL_CHECK(glNamedBufferData(m_vbo[EVbo::Data], VideoQuadInterleavedArray.size() * sizeof(VideoQuadVertex), VideoQuadInterle

GL_CHECK(glVertexArrayAttribBinding(m_vao, 0, 0));

GL_CHECK(glVertexArrayVertexBuffer(m_vao, 0, m_vbo[EVbo::Data], 0, sizeof(VideoQuadVertex)));

// setup the indices array:

GL_CHECK(glNamedBufferData(m_vbo[EVbo::Element], VideoQuadElementArray.size() * sizeof(GLuint), VideoQuadElementArray.data

GL_CHECK(glVertexArrayElementBuffer(m_vao, m_vbo[EVbo::Element]));

// enable the relevant attributes for this VAO and

// specify their format and binding point:

// vertices:

GL_CHECK(glEnableVertexArrayAttrib(m_vao, 0 /* location in shader*/));

GL_CHECK(glVertexArrayAttribFormat(

m_vao,

0, // attribute location

3, // number of components in each data member

GL_FLOAT, // type of each component

GL_FALSE, // should normalize

offsetof(VideoQuadVertex, vertex) // offset from the begining of the buffer

));

// uvs:

GL_CHECK(glEnableVertexArrayAttrib(m_vao, 1 /* location in shader*/));

GL_CHECK(glVertexAttribFormat(

1, // attribute location

2, // number of components in each data member

GL_FLOAT, // type of each component

GL_FALSE, // should normalize

offsetof(VideoQuadVertex, uv) // offset from the begining of the buffer

));

GL_CHECK(glVertexArrayAttribBinding(m_vao, 1, 0));

}

vertex shader:

layout(location = 0) in vec3 position;

layout(location = 1) in vec2 texture_coordinate;

out FragmentData

{

vec2 uv;

} toFragment;

void main(void)

{

toFragment.uv = texture_coordinate;

gl_Position = vec4 (position, 1.0f);

}

fragment shader:

in FragmentData

{

vec2 uv;

} data;

out vec4 color;

layout (location = 3) uniform sampler2D tex_object;

void main()

{

color = texture(tex_object, data.uv);

}

GL_UNPACK_ALIGNMENTat the default value of 4, which would make the GL to expect some padding bytes at the end of each line in your client side array. See Common Mistakes: Texture Upload and Pixel Reads in the OpenGL wiki for details. – LeixglPixelStorei(GL_UNPACK_ALIGNMENT, 1);beforeglTextureSubImage2D– Mazel