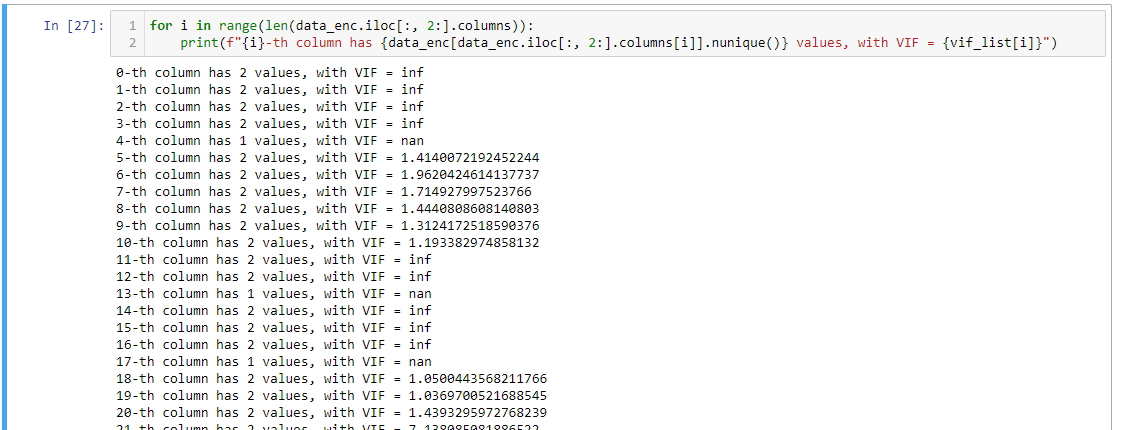

I am comparatively new to Python, Stats and using DS libraries, my requirement is to run a multicollinearity test on a dataset having n number of columns and ensure the columns/variables having VIF > 5 are dropped altogether.

I found a code which is,

from statsmodels.stats.outliers_influence import variance_inflation_factor

def calculate_vif_(X, thresh=5.0):

variables = range(X.shape[1])

tmp = range(X[variables].shape[1])

print(tmp)

dropped=True

while dropped:

dropped=False

vif = [variance_inflation_factor(X[variables].values, ix) for ix in range(X[variables].shape[1])]

maxloc = vif.index(max(vif))

if max(vif) > thresh:

print('dropping \'' + X[variables].columns[maxloc] + '\' at index: ' + str(maxloc))

del variables[maxloc]

dropped=True

print('Remaining variables:')

print(X.columns[variables])

return X[variables]

But, I do not clearly understand, should I pass the dataset altogether in the X argument's position? If yes, it is not working.

Please help!