Low latency is certainly possible with ASP.NET Core / Kestrel.

Here is a tiny web app to demonstrate this...

using System.Net;

using Microsoft.AspNetCore;

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.Builder;

using Microsoft.AspNetCore.Http;

public static void Main(string[] args)

{

IWebHost host = new WebHostBuilder()

.UseKestrel()

.Configure(app =>

{

// notice how we don't have app.UseMvc()?

app.Map("/hello", SayHello); // <-- ex: "http://localhost/hello"

})

.Build();

host.Run();

}

private static void SayHello(IApplicationBuilder app)

{

app.Run(async context =>

{

// implement your own response

await context.Response.WriteAsync("Hello World!");

});

}

I have answered this kind of similar question many times before here and here.

If you wish to compare the ASP.NET Core framework against others this is a great visual https://www.techempower.com/benchmarks/#section=data-r16&hw=ph&test=plaintext. As you can see, ASP.NET Core is has exceptional results and is the leading framework for C#.

In my code block above I noted the lack of app.UseMvc(). If you do need it, I have done a very detailed answer about getting better latency in this answer: What is the difference between AddMvc() and AddMvcCore()?

.NET Core Runtime (CoreRT)

If you are still needing more performance, I would encourage you to look at .Net Core Runtime (CoreRT).

Note that at time of this writing, this option probably needs to be reviewed in more detail before going ahead with this for a production system.

"CoreRT brings much of the performance and all of the deployment benefits of native compilation, while retaining your ability to write in your favorite .NET programming language."

CoreRT offers great benefits that are critical for many apps.

- The native compiler generates a SINGLE FILE, including the app, managed dependencies and CoreRT.

- Native compiled apps startup faster since they execute already compiled code. They don't need to generate machine code at runtime nor load a JIT compiler.

- Native compiled apps can use an optimizing compiler, resulting in faster throughput from higher quality code (C++ compiler optimizations). Both the LLILLC and IL to CPP compilers rely on optimizing compilers.

These benefits open up some new scenarios for .NET developers

- Copy a single file executable from one machine and run on another (of the same kind) without installing a .NET runtime.

- Create and run a docker image that contains a single file executable (e.g. one file in addition to Ubuntu 14.04).

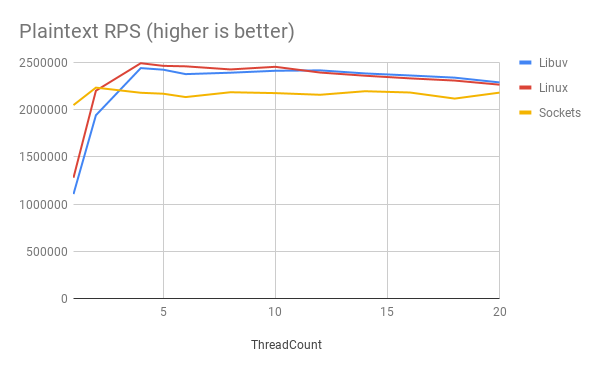

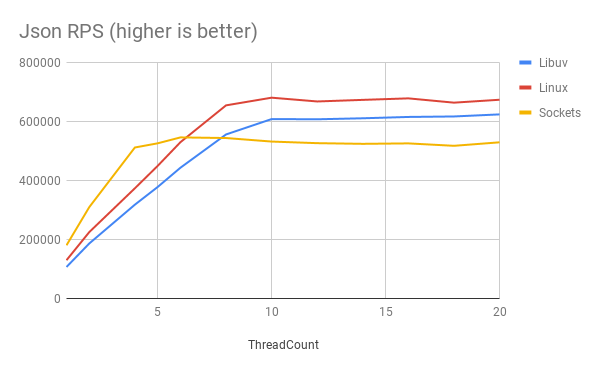

Linux-specific optimizations

There is a nice library that attempts to deal with very specialized cases. In particular for Linux (but this code is safe for other operating systems). The principle behind this optimization is to replace the libuv transport library (which ASP.NET Core uses) with another Linux-specific optimization.

It uses the kernel primitives directly to implement the Transport API. This reduces the number of heap allocated objects (e.g. uv_buf_t, SocketAsyncEventArgs), which means there is less GC pressure. Implementations building on top of an xplat API will pool objects to achieve this.

using RedHat.AspNetCore.Server.Kestrel.Transport.Linux; // <--- note this !

public static IWebHost BuildWebHost(string[] args) =>

WebHost.CreateDefaultBuilder(args)

.UseLinuxTransport() // <--- and note this !!!

.UseStartup()

.Build();

// note: It's safe to call UseLinuxTransport on non-Linux platforms, it will no-op

You can take a look at the repository for that middleware on GitHub here https://github.com/redhat-developer/kestrel-linux-transport

![enter image description here]()

![enter image description here]()

Source: https://developers.redhat.com/blog/2018/07/24/improv-net-core-kestrel-performance-linux/