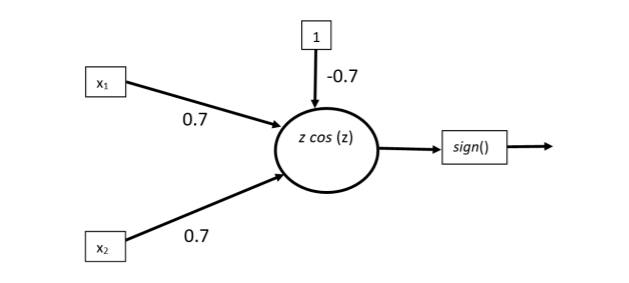

Yes, a single layer neural network with a non-monotonic activation function can solve the XOR problem. More specifically, a periodic function would cut the XY plane more than once. Even an Abs or Gaussian activation function will cut it twice.

Try it yourself: W1 = W2 = 100, Wb = -100, activation = exp(-(Wx)^2)

- exp(-(100 * 0 + 100 * 0 - 100 * 1)^2) = ~0

- exp(-(100 * 0 + 100 * 1 - 100 * 1)^2) = 1

- exp(-(100 * 1 + 100 * 0 - 100 * 1)^2) = 1

- exp(-(100 * 1 + 100 * 1 - 100 * 1)^2) = ~0

Or with the abs activation: W1 = -1, W2 = 1, Wb = 0 (yes, you can solve it even without a bias)

- abs(-1 * 0 + 1 * 0) = 0

- abs(-1 * 0 + 1 * 1) = 1

- abs(-1 * 1 + 1 * 0) = 1

- abs(-1 * 1 + 1 * 1) = 0

Or with sine: W1 = W2 = -PI/2, Wb = -PI

- sin(-PI/2 * 0 - PI/2 * 0 - PI * 1) = 0

- sin(-PI/2 * 0 - PI/2 * 1 - PI * 1) = 1

- sin(-PI/2 * 1 - PI/2 * 0 - PI * 1) = 1

- sin(-PI/2 * 1 - PI/2 * 1 - PI * 1) = 0