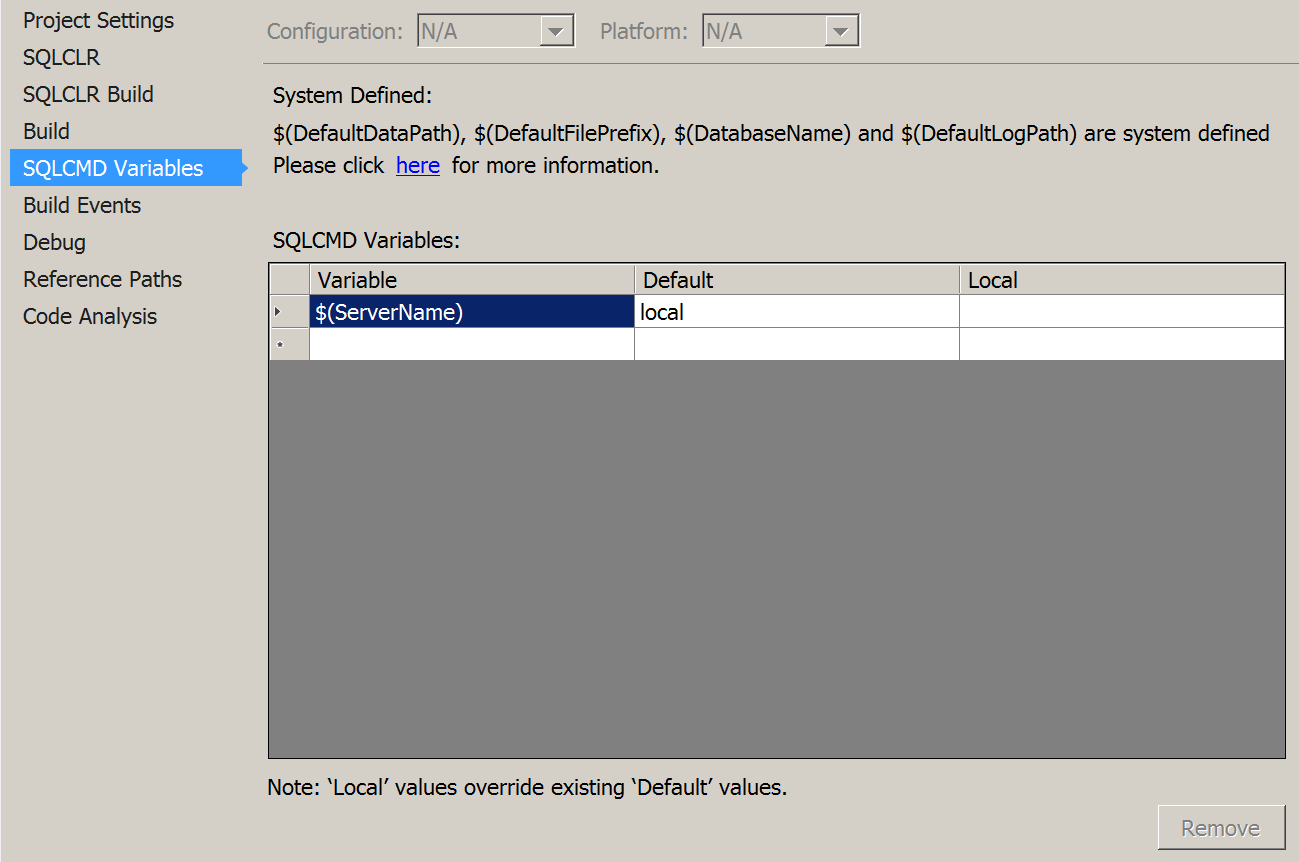

First set up SQLCMD Variables by right clicking on the project and going to properties and the SQLCMD Variables tab:

![Project Properties]()

Then set up a folder structure for you to organize scripts that you want to run for a specific server or any other thing you want to switch off of, like customer. Each server gets a folder. I like to barrel all the scripts I want to run for that folder into an index file in that folder. The index lists out a :r command followed by each scrip in the folder that should run, organized by filename with a numerical prefix so the order can be controlled.

![Folder Structure]()

In the index file in the folder that groups all the server folders will do something different than listing out a call to each server's index file, instead it switches which index file to run based on the SQLCMD variable passed in based on the publish profile. It does so with the following simple code:

:r .\$(Customer)\Index.sql

The reason you want to do it like this by setting up folders and index files is that not only does it keep things organized it also allows you to use Go statements in all of your files. You can then use one script with :r statements for all other scripts you want to run, nesting to your heart's content.

You could set up your sqlcmd file to do it the following way which doesn't require you to set up folders or specific names for files, but it requires you to remove all GO statements. By doing it the above way you don't have to remove any go statements.

if @@servername = '$(ServerName)'

begin

... code for specific server

end

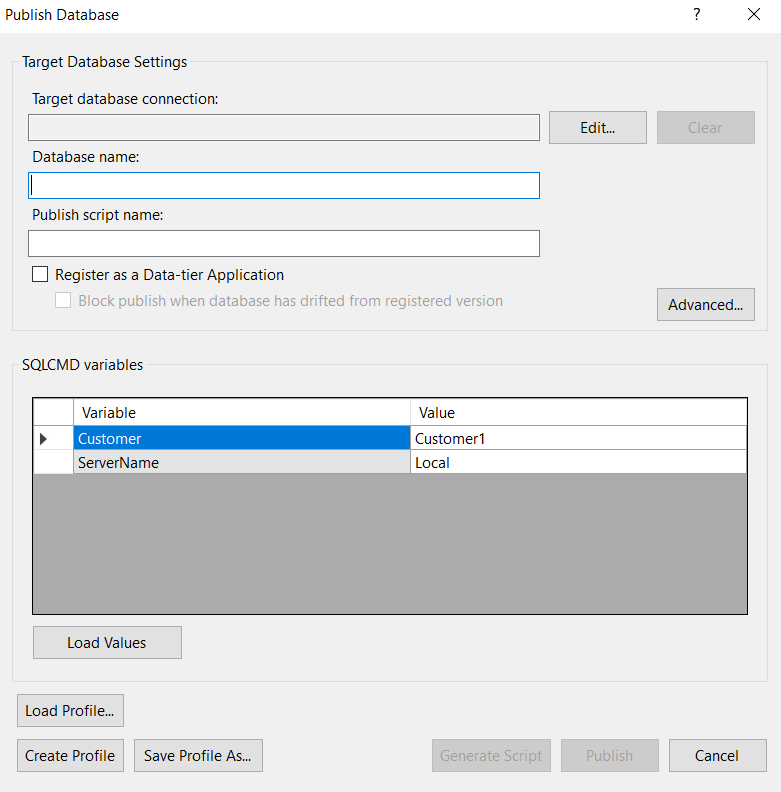

Then when I right click the project and hit publish it builds the project and pops up with the following dialog. I can change which scripts get run by changing the SQLCMD variable.

![Publish Database Dialog]()

I like to save off the most commonly used settings as a separate publish profile and then I can get back to it by just clicking on it's .xml file in the solution explorer. This process makes everything so easy and there is no need to modify the xml by hand, just click save profile as, Load Profile, or Create Profile using the publish database dialog shown above.

Also, you can generate your index files with the following powershell script:

foreach($directory in (Get-ChildItem -Directory -Recurse) | Get-Item)

{

#skip writing to any index file with ---Ignore in it.

if(-Not ((Test-Path $directory\Index.sql) -and (Get-Content $directory\Index.sql | %{$match = $false}{ $match = $match -or $_ -match "---Ignore" }{$match})))

{

$output = "";

foreach($childitem in (Get-ChildItem $directory -Exclude Index.sql, *.ps1 | Sort-Object | Get-Item))

{

$output= $output + ":r .\" + $childitem.name

if($childitem -is [system.io.directoryinfo])

{

$output = $output + "\Index.sql";

}

$output = $output + "`r`n"

}

Out-File $directory\Index.sql -Encoding utf8 -InputObject $output

}

}