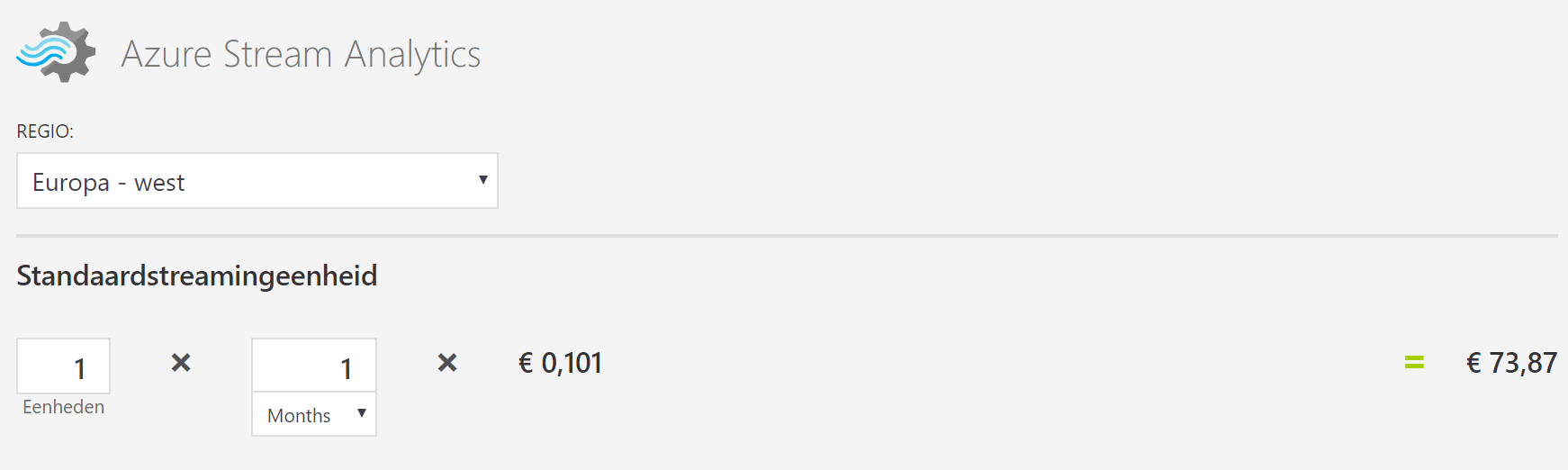

In order to write sensor data from an IoT device to a SQL database in the cloud I use an Azure Streaming Analytics job. The SA job has an IoT Hub input and a SQL database output. The query is trivial; it just sends all data through). According to the MS price calculator, the cheapest way of accomplishing this (in western Europe) is around 75 euros per month (see screenshot).

Actually, only 1 message per minute is send through the hub and the price is fixed per month (regardless of the amount of messages). I am surprised by the price for such a trivial task on small data. Would there be a cheaper alternative for such low capacity needs? Perhaps an Azure function?