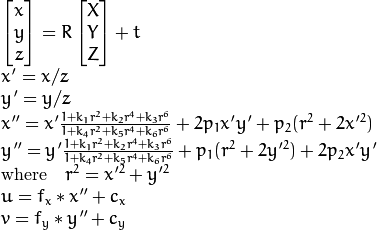

The equations of the projection of a 3D point [X; Y; Z] to a 2D image point [u; v] are provided on the documentation page related to camera calibration :

![opencv projection equations]()

(source: opencv.org)

In the case of lens distortion, the equations are non-linear and depend on 3 to 8 parameters (k1 to k6, p1 and p2). Hence, it would normally require a non-linear solving algorithm (e.g. Newton's method, Levenberg-Marquardt algorithm, etc) to inverse such a model and estimate the undistorted coordinates from the distorted ones. And this is what is used behind function undistortPoints, with tuned parameters making the optimization fast but a little inaccurate.

However, in the particular case of image lens correction (as opposed to point correction), there is a much more efficient approach based on a well-known image re-sampling trick. This trick is that, in order to obtain a valid intensity for each pixel of your destination image, you have to transform coordinates in the destination image into coordinates in the source image, and not the opposite as one would intuitively expect. In the case of lens distortion correction, this means that you actually do not have to inverse the non-linear model, but just apply it.

Basically, the algorithm behind function undistort is the following. For each pixel of the destination lens-corrected image do:

- Convert the pixel coordinates

(u_dst, v_dst) to normalized coordinates (x', y') using the inverse of the calibration matrix K,

- Apply the lens-distortion model, as displayed above, to obtain the distorted normalized coordinates

(x'', y''),

- Convert

(x'', y'') to distorted pixel coordinates (u_src, v_src) using the calibration matrix K,

- Use the interpolation method of your choice to find the intensity/depth associated with the pixel coordinates

(u_src, v_src) in the source image, and assign this intensity/depth to the current destination pixel.

Note that if you are interested in undistorting the depthmap image, you should use a nearest-neighbor interpolation, otherwise you will almost certainly interpolate depth values at object boundaries, resulting in unwanted artifacts.