They're two phrases that describe the same thing from (very slightly) different viewpoints. Parallel programming is describing the situation from the viewpoint of the hardware -- there are at least two processors (possibly within a single physical package) working on a problem in parallel. Concurrent programming is describing things more from the viewpoint of the software -- two or more actions may happen at exactly the same time (concurrently).

The problem here is that people are trying to use the two phrases to draw a clear distinction when none really exists. The reality is that the dividing line they're trying to draw has been fuzzy and indistinct for decades, and has grown ever more indistinct over time.

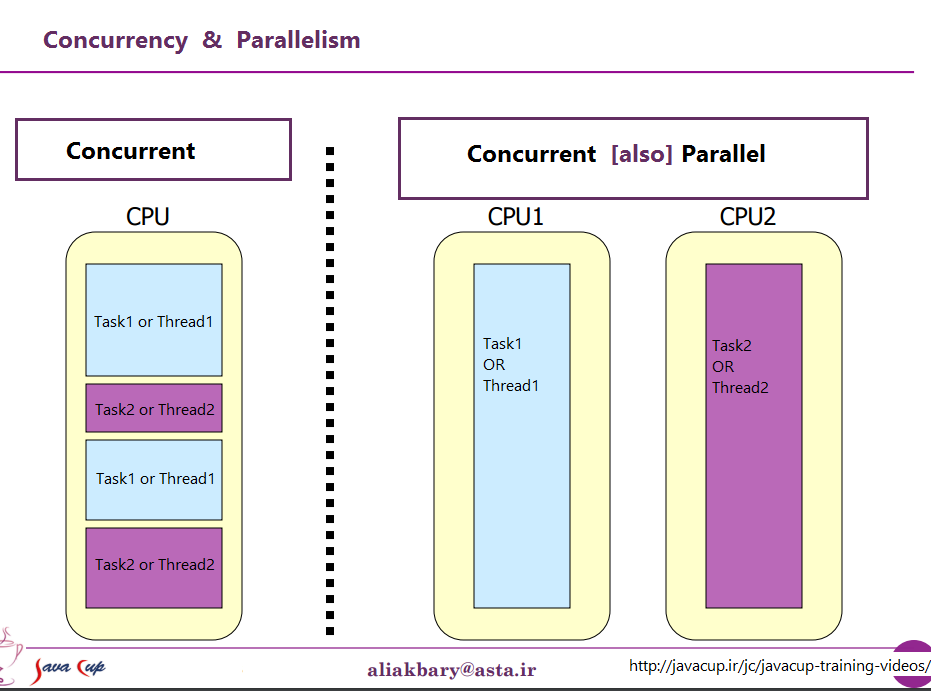

What they're trying to discuss is the fact that once upon a time, most computers had only a single CPU. When you executed multiple processes (or threads) on that single CPU, the CPU was only really executing one instruction from one of those threads at a time. The appearance of concurrency was an illusion--the CPU switching between executing instructions from different threads quickly enough that to human perception (to which anything less than 100 ms or so looks instantaneous) it looked like it was doing many things at once.

The obvious contrast to this is a computer with multiple CPUs, or a CPU with multiple cores, so the machine is executing instructions from multiple threads and/or processes at exactly the same time; code executing one can't/doesn't have any effect on code executing in the other.

Now the problem: such a clean distinction has almost never existed. Computer designers are actually fairly intelligent, so they noticed a long time ago that (for example) when you needed to read some data from an I/O device such as a disk, it took a long time (in terms of CPU cycles) to finish. Instead of leaving the CPU idle while that happened, they figured out various ways of letting one process/thread make an I/O request, and let code from some other process/thread execute on the CPU while the I/O request completed.

So, long before multi-core CPUs became the norm, we had operations from multiple threads happening in parallel.

That's only the tip of the iceberg though. Decades ago, computers started providing another level of parallelism as well. Again, being fairly intelligent people, computer designers noticed that in a lot of cases, they had instructions that didn't affect each other, so it was possible to execute more than one instruction from the same stream at the same time. One early example that became pretty well known was the Control Data 6600. This was (by a fairly wide margin) the fastest computer on earth when it was introduced in 1964--and much of the same basic architecture remains in use today. It tracked the resources used by each instruction, and had a set of execution units that executed instructions as soon as the resources on which they depended became available, very similar to the design of most recent Intel/AMD processors.

But (as the commercials used to say) wait--that's not all. There's yet another design element to add still further confusion. It's been given quite a few different names (e.g., "Hyperthreading", "SMT", "CMP"), but they all refer to the same basic idea: a CPU that can execute multiple threads simultaneously, using a combination of some resources that are independent for each thread, and some resources that are shared between the threads. In a typical case this is combined with the instruction-level parallelism outlined above. To do that, we have two (or more) sets of architectural registers. Then we have a set of execution units that can execute instructions as soon as the necessary resources become available. These often combine well because the instructions from the separate streams virtually never depend on the same resources.

Then, of course, we get to modern systems with multiple cores. Here things are obvious, right? We have N (somewhere between 2 and 256 or so, at the moment) separate cores, that can all execute instructions at the same time, so we have clear-cut case of real parallelism--executing instructions in one process/thread doesn't affect executing instructions in another.

Well, sort of. Even here we have some independent resources (registers, execution units, at least one level of cache) and some shared resources (typically at least the lowest level of cache, and definitely the memory controllers and bandwidth to memory).

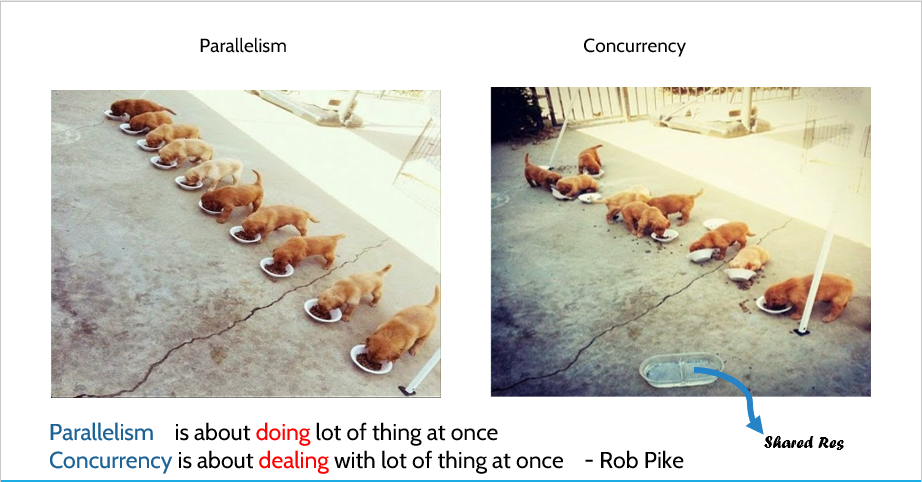

To summarize: the simple scenarios people like to contrast between shared resources and independent resources virtually never happen in real life. With all resources shared, we end up with something like MS-DOS, where we can only run one program at a time, and we have to stop running one before we can run the other at all. With completely independent resources, we have N computers running MS-DOS (without even a network to connect them) with no ability to share anything between them at all (because if we can even share a file, well, that's a shared resource, a violation of the basic premise of nothing being shared).

Every interesting case involves some combination of independent resources and shared resources. Every reasonably modern computer (and a lot that aren't at all modern) has at least some ability to carry out at least a few independent operations simultaneously, and just about anything more sophisticated than MS-DOS has taken advantage of that to at least some degree.

The nice, clean division between "concurrent" and "parallel" that people like to draw just doesn't exist, and almost never has. What people like to classify as "concurrent" usually still involves at least one and often more different types of parallel execution. What they like to classify as "parallel" often involves sharing resources and (for example) one process blocking another's execution while using a resource that's shared between the two.

People trying to draw a clean distinction between "parallel" and "concurrent" are living in a fantasy of computers that never actually existed.

versus

versus