I'm trying to run very simple task with mapreduce.

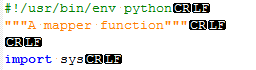

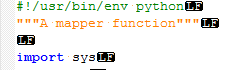

mapper.py:

#!/usr/bin/env python

import sys

for line in sys.stdin:

print line

my txt file:

qwerty

asdfgh

zxc

Command line to run the job:

hadoop jar /usr/lib/hadoop-0.20-mapreduce/contrib/streaming/hadoop-streaming-2.6.0-mr1-cdh5.8.0.jar \

-input /user/cloudera/In/test.txt \

-output /user/cloudera/test \

-mapper /home/cloudera/Documents/map.py \

-file /home/cloudera/Documents/map.py

Error:

INFO mapreduce.Job: Task Id : attempt_1490617885665_0008_m_000001_0, Status : FAILED

Error: java.lang.RuntimeException: PipeMapRed.waitOutputThreads(): subprocess failed with code 127

at org.apache.hadoop.streaming.PipeMapRed.waitOutputThreads(PipeMapRed.java:325)

at org.apache.hadoop.streaming.PipeMapRed.mapRedFinished(PipeMapRed.java:538)

at org.apache.hadoop.streaming.PipeMapper.close(PipeMapper.java:130)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61)

at org.apache.hadoop.streaming.PipeMapRunner.run(PipeMapRunner.java:34)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

How to fix this and run the code?

When I use cat /home/cloudera/Documents/test.txt | python /home/cloudera/Documents/map.py it works fine

!!!!!UPDATE

Something wrong with my *.py file. I have copied file from github 'tom white hadoop book' and everything is working fine.

But I cant understand what is the reason. It is not the permissions and charset (if I am not wrong). What else can it be?