The reason is really that the C++ translation model can easily deal with conflicting multiple declarations; it just requires the compiler part of the toolset to detect errors like this in the source code:

int X();

void X(); // error

A compiler can easily do that.

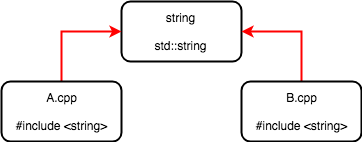

And when there are no such errors in any translation units, then there's no problem; every X() call in every translation unit is identical; what remains to do is for the linker to link every call to the one correct destination. The declarations have done their job and no longer play a role.

Now with multiple definitions, it's not that easy. Definitions are something which concerns multiple translation units and which goes beyond the scope of the compilation phase.

We've already seen that in the example above. The X() calls are in place, but now we need the guarantee that they all end up at the same destination, the same definition of X().

That there can be only one such definition should be clear, but how to enforce it? Put in simple terms, when it's time to link the object code together, the source code has already been dealt with.

The answer is that C++ basically chooses to put the burden on the programmer. Forcing compiler/linker implementors to check all multiple definitions for equality and detect differences would be beyond the capabilities of C++ toolsets in most real-life situations or completely break the way those tools work, so the pragmatic solution is to just forbid it and/or force the programmer to make sure that they are all identical or else get undefined behaviour.