There are multiple problems (with your question):

ps (and top etc) show multiple memory readings. The only one of interest is typically called RES or RSS. You don't tell which one it was.

Basically, looking at the reading typically named VIRT is not interesting.

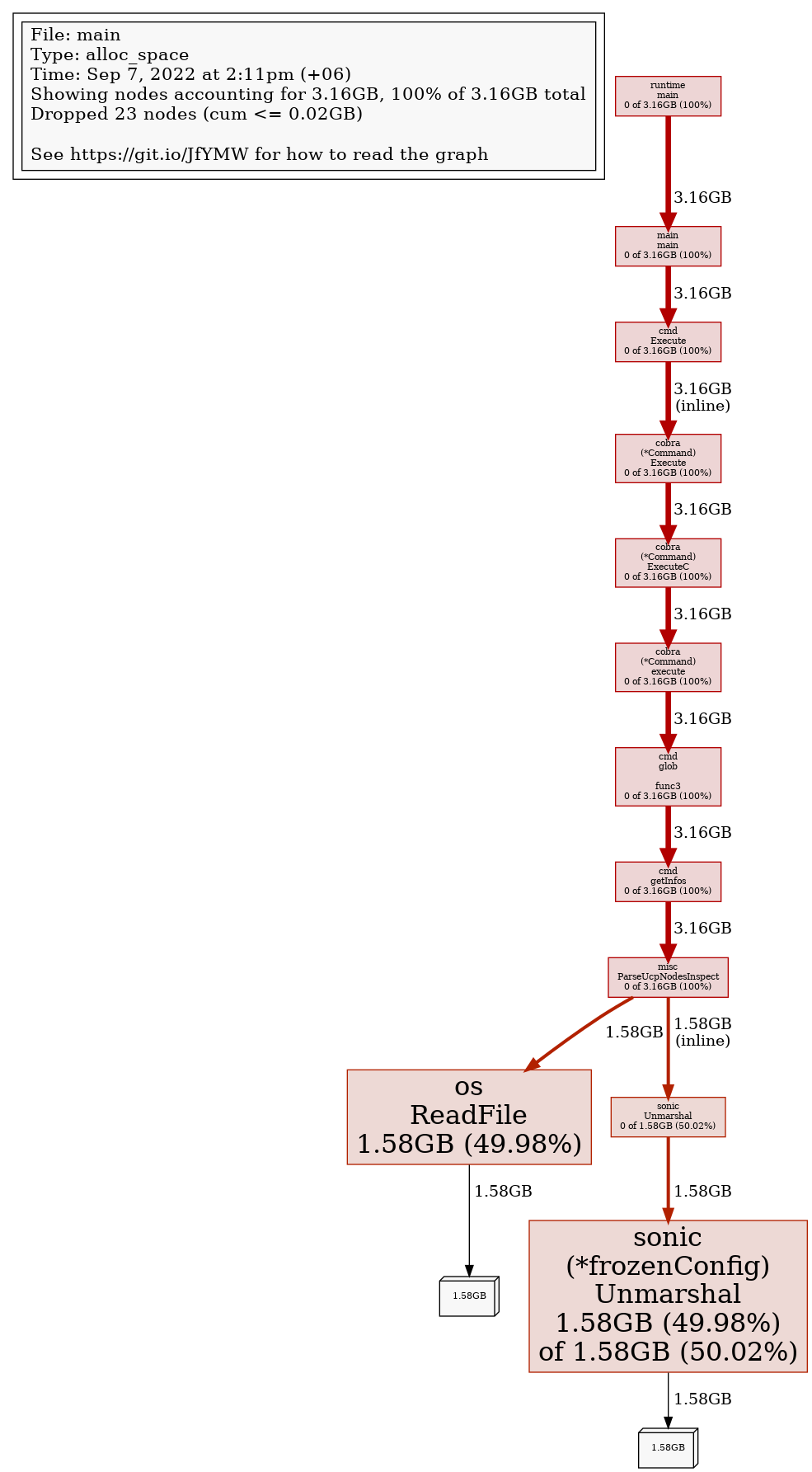

As Volker said, pprof does not measure memory consumption, it measures (in the mode you have run it) memory allocation rate—in the sense of "how much", not "how frequently".

To understand what it means, consider how pprof works.

During profiling, a timer ticks, and on each tick, the profiler sort of snaphots your running program, scans the stacks of all live goroutines and attributes live objects on the heap to the variables contained in the stack frames of those stacks, and each stack frame belongs to an active function.

This means that, if your process will call, say, os.ReadFile—which, by its contract, allocates a slice of bytes long enough to contain the whole contents of the file to be read,—100 times to read 1 GiB file each time, and the profiler's timer will manage to pinpoint each of these 100 calls (it can miss some of the calls as it's sampling), os.ReadFile will be attributed to having had allocated 100 GiB.

But if your program is not written in such a way that it holds each of the slices returned by these calls, but rather does something with those slices and throws them away after processing, the slices from the past calls will likely be already collected by the GC by the time the newer ones are allocated.

While not required by the spec, the two "standard" contemporary implementations of Go—the one originally dubbed "gc", which most people think is the implementation, and the GCC frontend—feature garbage collector which runs in parallel with the flow of your own process; the moments it actually collects the garbage produced by your process are governed by a set of complicated heuristics (start here if interested) which try to balance between spending CPU time for GC and spending RAM for not doing it ;-) , and it means for short-lived processes, the GC might not kick in even a single time, meaning your process will end with all the generated garbage still floating, and all that memory will be reclaimed by the OS in the usual way when the process ends.

When the GC collects garbage, the freed memory is not returned to the OS immediately. Instead, two-staged process is involved:

First, the freed regions are returned to the memory manager which is a part of the Go rutime powering your running program.

This is a sensible thing because in a typical program memory churn is usually high enough and freed memory will likely be quickly allocated back again.

Second, memory pages staying free long enough are marked to let the OS know it can use it for its own needs.

Basically it means that even after the GC frees some memory, you won't see this outside the running Go process as this memory is first retuned to the process' own pool.

Different versions of Go (again, I mean the "gc" implementation) implemented different policies about returning the freed pages to the OS: first they were marked by madvise(2) as MADV_FREE, then as MADV_DONTNEED and then again as MADV_FREE.

If you happen to use a version of Go whose runtime marks freed memory as MADV_DONTNEED, the readings of RSS will be even less sensible because the memory marked that way still counts against the process' RSS even though the OS was hinted it can reclaim that memory when needed.

To recap.

This topic is complex enough and you appear to be drawing certain conclusions too fast ;-)

An update.

I've decided to expand on memory management a bit because I feel like certain bits and pieces may be missing from the big picture of this stuff in your head, and because of this you might find the comments to your question to be moot and dismissive.

The reasoning for the advice to not measure memory consumption of programs written in Go using ps, top and friends is rooted in the fact the memory management implemented in the runtime environments powering programs written in contemporary high-level programming languages is quite far removed from the down-to-the-metal memory management implemented in the OS kernels and the hardware they run on.

Let's consider Linux to have concrete tangible examples.

You certainly can ask the kernel directly to allocate a memory for you: mmap(2) is a syscall which does that.

If you call it with MAP_PRIVATE (and usually also with MAP_ANONYMOUS), the kernel will make sure the page table of your process has one or more new entries for as many pages of memory to contain the contiguous region of as many bytes as you have requested, and return the address of the first page in the sequence.

At this time you might think that the RSS of your process had grown by that number of bytes, but it had not: the memory was "reserved" but not actually allocated; for a memory page to really get allocated, the process has to "touch" any byte within the page—by reading it or writing it: this will generate the so-called "page fault" on the CPU, and the in-kernel handler will ask the hardware to actually allocate a real "hardware" memory page. Only after that the page will actually count against the process' RSS.

OK, that's fun, but you probably can see a problem: it's not too convenient to operate with complete pages (wich can be of different size on different systems; typically it's 4 KiB on systems of the x86 lineage): when you program in a high-level language, you don't think on such a low level about the memory; instead, you expect the running program to somehow materialize "objects" (I do not mean OOP here; just pieces of memory containing values of some language- or user-defined types) as you need them.

These objects may be of any size, most of the time way smaller than a single memory page, and—what is more important,—most of the time you do not even think about how much space these objects are consuming when allocated.

Even when programming in a language like C, which these days is considered to be quite low-level, you're usually accustomed to using memory management functions in the malloc(3) family provided by the standard C library, which allow you to allocate regions of memory of arbitrary size.

A way to solve this sort of problem is to have a higher-level memory manager on top on what the kernel can do for your program, and the fact is, every single general-purpose program written in a high-level language (even C and C++!) is using one: for interpreted languages (such as Perl, Tcl, Python, POSIX shell etc) it is provided by the interpreter; for byte-compiled languages such as Java, it is provided by the process which executes that code (such as JRE for Java); for languages which compile down to machine (CPU) code—such as the "stock" implementation of Go—it is provided by the "runtime" code included into the resulting executable image file or linked into the program dynamically when it's being loaded into the memory for execution.

Such memory managers are usually quite complicated as they have to deal with many complex problems such as memory fragmentation, and they usually have to avoid talking to the kernel as much as possible because syscalls are slow.

The latter requirement naturally means process-level memory managers try to cache the memory they have once taken from the kernel, and are reluctant to release it back.

All this means that, say, in a typical active Go program you might have crazy memory churn — hordes of small objects being allocated and deallocated all the time which has next to no effect on the values of RSS monitored "from the outside" of the process: all this churn is handled by the in-process memory manager and—as in the case of the stock Go implementation—the GC which is naturally tightly integrated with the MM.

Because of that, to have useful actionable idea about what is happening in a long-running production-grade Go program, such program usually provides a set of continuously updated metrics (delivering, collecting and monitoring them is called telemetry). For Go programs, a part of the program tasked with producing these metrics can either make periodic calls to runtime.ReadMemStats and runtime/debug.ReadGCStats or directly use what the runtime/metrics has to offer. Looking at such metrics in a monitoring system such as Zabbix, Graphana etc is quite instructive: you can literally see how the amount of free memory available to the in-process MM increases after each GC cycle while the RSS stays roughly the same.

Also note that you might consider running your Go program with various GC-related debugging settings in a special environment variable GODEBUG described here: basically, you make the Go runtime powering your running program emit detailed information on how the GC is working (also see this).

Hope this will make your curious to make further exploration of these matters ;-)

You might find this to be a good introduction on memory management implemented by the Go runtime—in connection with the kernel and the hardware; recommended read.