The main problem is that graphql-java-tools might have issues to do the field mapping for resolvers that contain fields of not basic types like List, String, Integer, Boolean, etc...

We solved this issue by just creating our own custom scalar that is basically like ApolloScalar.Upload. But instead of returning an object of the type Part, we return our own resolver type FileUpload which contains the contentType as String and the inputStream as byte[], then the field mapping works and we can read the byte[] within the resolver.

First, set up the new type to be used in the resolver:

public class FileUpload {

private String contentType;

private byte[] content;

public FileUpload(String contentType, byte[] content) {

this.contentType = contentType;

this.content = content;

}

public String getContentType() {

return contentType;

}

public byte[] getContent() {

return content;

}

}

Then we make a custom scalar that looks pretty much like ApolloScalars.Upload, but returns our own resolver type FileUpload:

public class MyScalars {

public static final GraphQLScalarType FileUpload = new GraphQLScalarType(

"FileUpload",

"A file part in a multipart request",

new Coercing<FileUpload, Void>() {

@Override

public Void serialize(Object dataFetcherResult) {

throw new CoercingSerializeException("Upload is an input-only type");

}

@Override

public FileUpload parseValue(Object input) {

if (input instanceof Part) {

Part part = (Part) input;

try {

String contentType = part.getContentType();

byte[] content = new byte[part.getInputStream().available()];

part.delete();

return new FileUpload(contentType, content);

} catch (IOException e) {

throw new CoercingParseValueException("Couldn't read content of the uploaded file");

}

} else if (null == input) {

return null;

} else {

throw new CoercingParseValueException(

"Expected type " + Part.class.getName() + " but was " + input.getClass().getName());

}

}

@Override

public FileUpload parseLiteral(Object input) {

throw new CoercingParseLiteralException(

"Must use variables to specify Upload values");

}

});

}

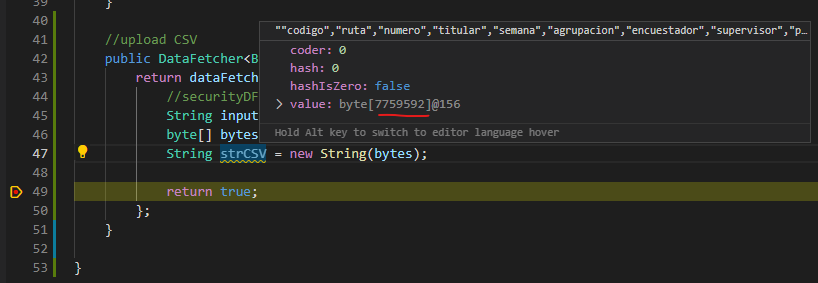

In the resolver, you would now be able to get the file from the resolver arguments:

public class FileUploadResolver implements GraphQLMutationResolver {

public Boolean uploadFile(FileUpload fileUpload) {

String fileContentType = fileUpload.getContentType();

byte[] fileContent = fileUpload.getContent();

// Do something in order to persist the file :)

return true;

}

}

In the schema, you declare it like:

scalar FileUpload

type Mutation {

uploadFile(fileUpload: FileUpload): Boolean

}

Let me know if it doesn't work for you :)