(Note: I have also asked this question here)

Problem

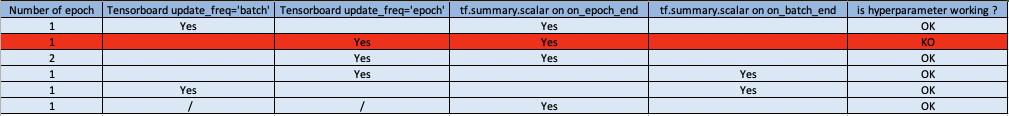

I have been trying to get Google Cloud's AI platform to display the accuracy of a Keras model, trained on the AI platform. I configured the hyperparameter tuning with hptuning_config.yaml and it works. However I can't get AI platform to pick up tf.summary.scalar calls during training.

Documentation

I have been following the following documentation pages:

1. Overview of hyperparameter tuning

2. Using hyperparameter tuning

According to [1]:

How AI Platform Training gets your metric You may notice that there are no instructions in this documentation for passing your hyperparameter metric to the AI Platform Training training service. That's because the service monitors TensorFlow summary events generated by your training application and retrieves the metric."

And according to [2], one way of generating such a Tensorflow summary event is by creating a callback class as so:

class MyMetricCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs=None):

tf.summary.scalar('metric1', logs['RootMeanSquaredError'], epoch)

My code

So in my code I included:

# hptuning_config.yaml

trainingInput:

hyperparameters:

goal: MAXIMIZE

maxTrials: 4

maxParallelTrials: 2

hyperparameterMetricTag: val_accuracy

params:

- parameterName: learning_rate

type: DOUBLE

minValue: 0.001

maxValue: 0.01

scaleType: UNIT_LOG_SCALE

# model.py

class MetricCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

tf.summary.scalar('val_accuracy', logs['val_accuracy'], epoch)

I even tried

# model.py

class MetricCallback(tf.keras.callbacks.Callback):

def __init__(self, logdir):

self.writer = tf.summary.create_file_writer(logdir)

def on_epoch_end(self, epoch, logs):

with writer.as_default():

tf.summary.scalar('val_accuracy', logs['val_accuracy'], epoch)

Which successfully saved the 'val_accuracy' metric to Google storage (I can also see this with TensorBoard). But this does not get picked up by the AI platform, despite the claim made in [1].

Partial solution:

Using the Cloud ML Hypertune package, I created the following class:

# model.py

class MetricCallback(tf.keras.callbacks.Callback):

def __init__(self):

self.hpt = hypertune.HyperTune()

def on_epoch_end(self, epoch, logs):

self.hpt.report_hyperparameter_tuning_metric(

hyperparameter_metric_tag='val_accuracy',

metric_value=logs['val_accuracy'],

global_step=epoch

)

which works! But I don't see how, since it all it seems to do is write to a file on the AI platform worker at /tmp/hypertune/*. There is nothing in the Google Cloud documentation that explains how this is getting picked up by the AI platform...

Am I missing something in order to get tf.summary.scalar events to be displayed?

cloudml-hypertunecase, the file is read by the service to report hyperparameter tuning metrics for your job. This is the recommended way to report hyperparameter tuning metrics if the summary events aren't getting picked up. For thetf.summary.scalarcase, which runtime version are you using? This call is only monitored for runtime version 2.1 or above. – Perrottacloudml-hypertunecase, do you mean that AI platform is pre-configured to read from the/tmp/hypertunefolder in the replicas? – Exeunt