The user uploads a huge file consisting of 1 million words. I parse the file and put the each line of the file into a LinkedHashMap<Integer, String>.

I need O(1) access and removal by key. Also, I need to preserve the access order, iterate from any position and sort.

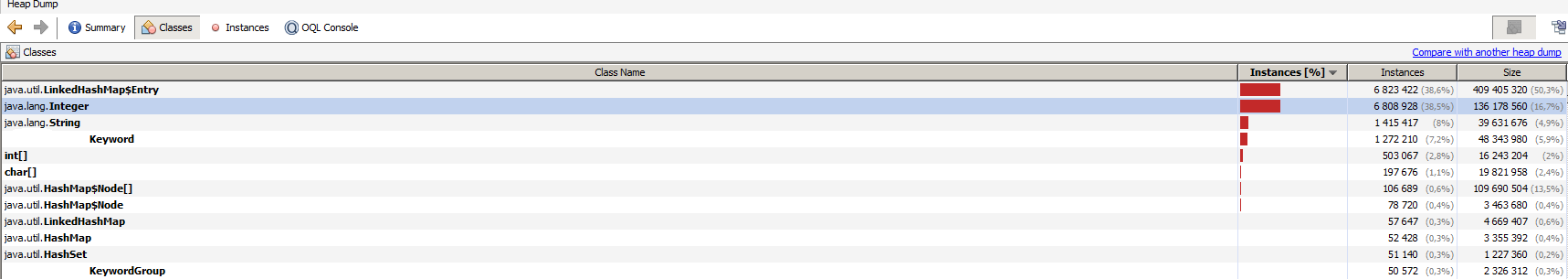

The memory consumption is huge. I enabled Strings deduplication feature which appears in Java 8, but it turns out that the LinkedHashMap consumes most of the memory.

I found that LinkedHashMap.Entry consumes 40 bytes, but there are only 2 pointers - one for the next entry and one for the previous entry. I thought 1 pointer should be 64 bits or 32 bits. Buy if I divide 409,405,320(bytes) by 6,823,422(entries count) I have 60 bytes per entry.

I think I don't need the previous pointer, the next pointer should be enough to keep order. Why does LinkedHashMap consume so much memory? How can I reduce memory consumption?

Integerwrapper is using that much extra memory? Maybe anint-based implementation could help – EffulgenceIntegers are taking up 16% of the heap.LinkedHashMap.Entrys are taking up more than 3x more. I believe the OP wants to know why this should be the case. – ZipHashMap.Nodewhich has 4 more fields, and there are additional object headers whose size is just implementation detail. – Fagenjava.util.LinkedHashMap$Entry 240,034,920 (32.3%) 6,000,873 (24.5%). Maybe you have some memory leaks: (https://hoangx281283.wordpress.com/2012/11/18/wrong-use-of-linkedhashmap-causes-memory-leak/ – Kingmaker