I am a PhD student who is trying to use the NEAT algorithm as a controller for a robot and I am having some accuracy issues with it. I am working with Python 2.7 and for it and am using two NEAT python implementations:

- The

NEATwhich is in this GitHub repository: https://github.com/CodeReclaimers/neat-python Searching in Google, it looks like it has been used in some projects with succed. - The

multiNEATlibrary developed by Peter Chervenski and Shane Ryan: http://www.multineat.com/index.html. Which appears in the "official" software web page ofNEATsoftware catalog.

While testing the first one, I've found that my program converges quickly to a solution, but this solution is not precise enough. As lack of precision I want to say a deviation of a minimum of 3-5% in the median and average related to the "perfect" solution at the end of the evolution (Depending on the complexity of the problem, an error around 10% is normal for my solutions. Furthermore, I could said that I've "never" seen an error value under the 1% between the solution given by the NEAT and the solution that it is the correct one). I must said that I've tried a lot of different parameter combinations and configurations (this is an old problem for me).

Due to that, I tested the second library. The MultiNEAT library converges quickly and easier that the previous one. (I assume that is due to the C++ implementation instead the pure Python) I get similar results, but I still have the same problem; lack of accuracy. This second library has different configuration parameters too, and I haven't found a proper combination of them to improve the performance of the problem.

My question is:

Is it normal to have this lack of accuracy in the NEAT results? It achieves good solutions, but not good enough for controlling a robot arm, which is what I want to use it for.

I'll write what I am doing in case someone sees some conceptual or technical mistake in the way I set out my problem:

To simplify the problem, I'll show a very simple example: I have a very simple problem to solve, I want a NN that may calculate the following function: y = x^2 (similar results are found with y=x^3 or y = x^2 + x^3 or similar functions)

The steps that I follow to develop the program are:

- "Y" are the inputs to the network and "X" the outputs. The activation functions of the neural net are sigmoid functions.

- I create a data set of "n" samples given values to "X" between the

xmin = 0.0and thexmax = 10.0 As I am using sigmoid functions, I make a normalization of the "Y" and "X" values:

- "Y" is normalized linearly between (Ymin, Ymax) and (-2.0, 2.0) (input range of sigmoid).

- "X" is normalized linearly between (Xmin, Xmax) and (0.0, 1.0) (the output range of sigmoid).

After creating the data set, I subdivide in in a train sample (70% percent of the total amount), a validation sample and a test sample (15% each one).

At this point, I create a population of individuals for doing evolution. Each individual of the population is evaluated in all the train samples. Each position is evaluated as:

eval_pos = xmax - abs(xtarget - xobtained)

And the fitness of the individual is the average value of all the train positions (I've selected the minimum too but it gives me worse performance).

After the whole evaluation, I test the best obtained individual against the test sample. And here is where I obtained those "un-precise values". Moreover, during the evaluation process, the maximum value where "abs(xtarget - xobtained) = 0" is never obtained.

Furthermore, I assume that how I manipulate the data is right because, I use the same data set for training a neural network in Keras and I get much better results than with NEAT (an error less than a 1% is achievable after 1000 epochs in a layer with 5 neurons).

At this point, I would like to know if what is happened is normal because I shouldn't use a data set of data for developing the controller, it must be learned "online" and NEAT looks like a suitable solution for my problem.

Thanks in advance.

EDITED POST:

Firstly, Thanks for comment nick. I'll answer your questions below::

I am using the

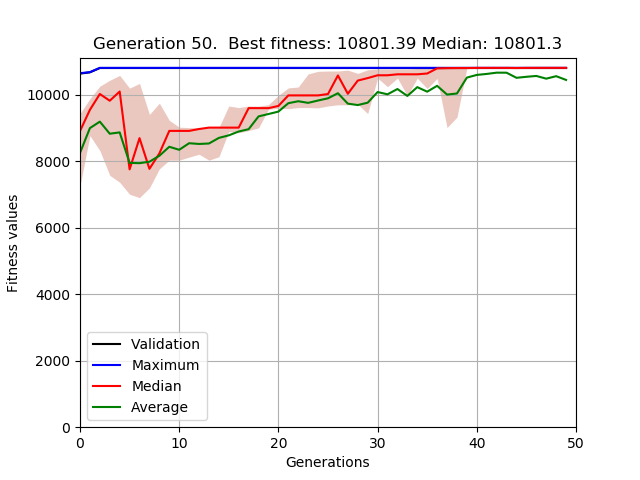

NEATalgorithm.Yes, I've carried out experiments increasing the number of individuals in the population and the generations number. A typical graph that I get is like this:

Although the population size in this example is not such big, I've obtained similar results in experiments incrementing the number of individuals or the number of generations. Populations of 500 in individuals and 500 generations, for example. In this experiments, the he algorithm converge fast to a solution, but once there, the best solution is stucked and it does not improve any more.

As I mentioned in my previous post, I've tried several experiments with many different parameters configurations... and the graphics are more or less similar to the previous showed.

Furthermore, other two experiments that I've tried were: once the evolution reach the point where the maximum value and the median converge, I generate other population based on that genome with new configuration parameters where:

The mutation parameters change with a high probability of mutation (weight and neuron probability) in order to find new solutions with the aim to "jumping" from the current genome to other better.

- The neuron mutation is reduced to 0, while the weight "

mutation probability" increase for "mutate weight" in a lower range in order to get slightly modifications with the aim to get a better adjustment of the weights. (trying to get a "similar" functionality as backprop. making slighty changes in the weights)

- The neuron mutation is reduced to 0, while the weight "

This two experiments didn't work as I expected and the best genome of the population was also the same of the previous population.

- I am sorry, but I do not understand very well what do you want to say with "applying your own weighted penalties and rewards in your fitness function". What do you mean with including weight penalities in the fitness function?

Regards!