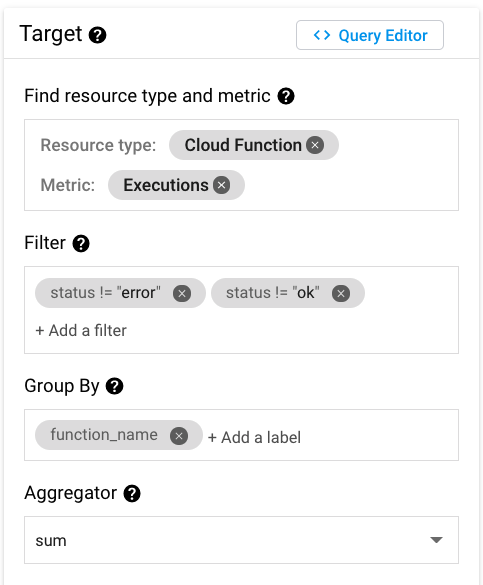

I have an alert that I have configured to send email when the sum of executions of cloud functions that have finished in status other than 'error' or 'ok' is above 0 (grouped by the function name).

The way I defined the alert is:

And the secondary aggregator is delta.

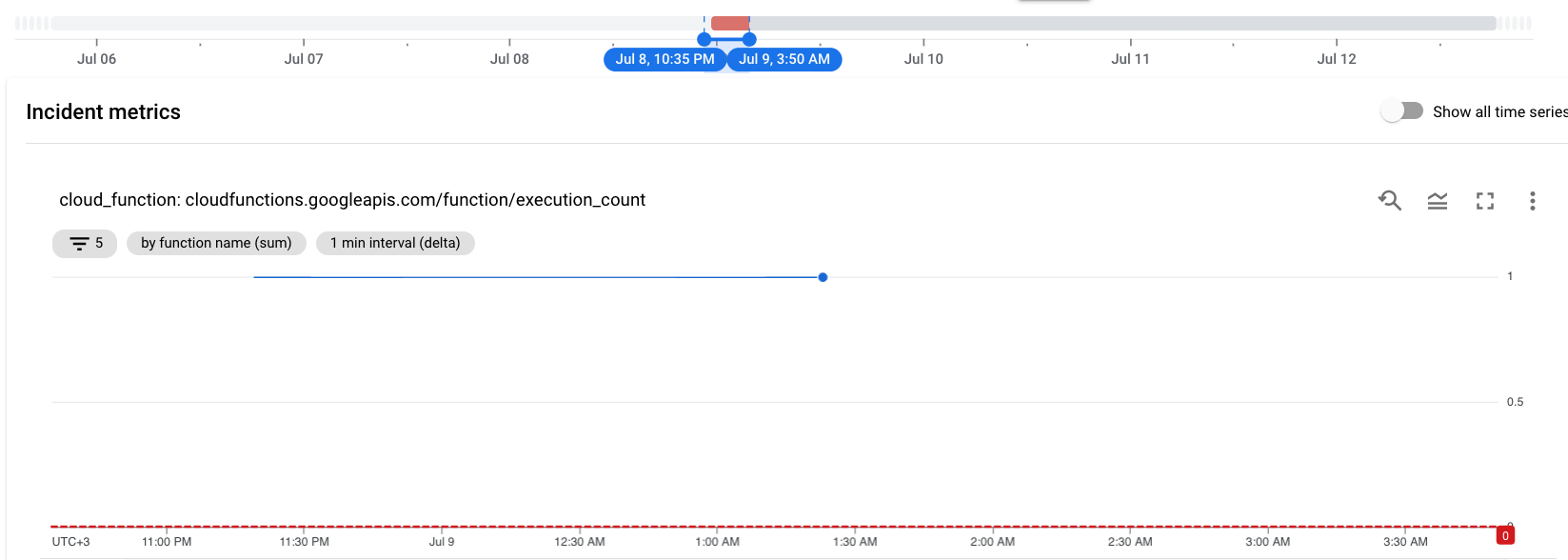

The problem is that once the alert is open, it looks like the filters don't matter any more, and the alert stays open because it sees that the cloud function is triggered and finishes with any status (even 'ok' status keeps it open as long as its triggered enough).

ATM the only solution I can think of is to define a log based metric that will count it itself and then the alert will be based on that custom metric instead of on the built in one.

Is there something that I'm missing?

Edit:

Adding another image to show what I think might be the problem:

From the image above we see that the graph wont go down to 0 but will stay at 1, which is not the way other normal incidents work