I am testing printed digits (0-9) on a Convolutional Neural Network. It is giving 99+ % accuracy on the MNIST Dataset, but when I tried it using fonts installed on computer (Ariel, Calibri, Cambria, Cambria math, Times New Roman) and trained the images generated by fonts (104 images per font(Total 25 fonts - 4 images per font(little difference)) the training error rate does not go below 80%, i.e. 20% accuracy. Why?

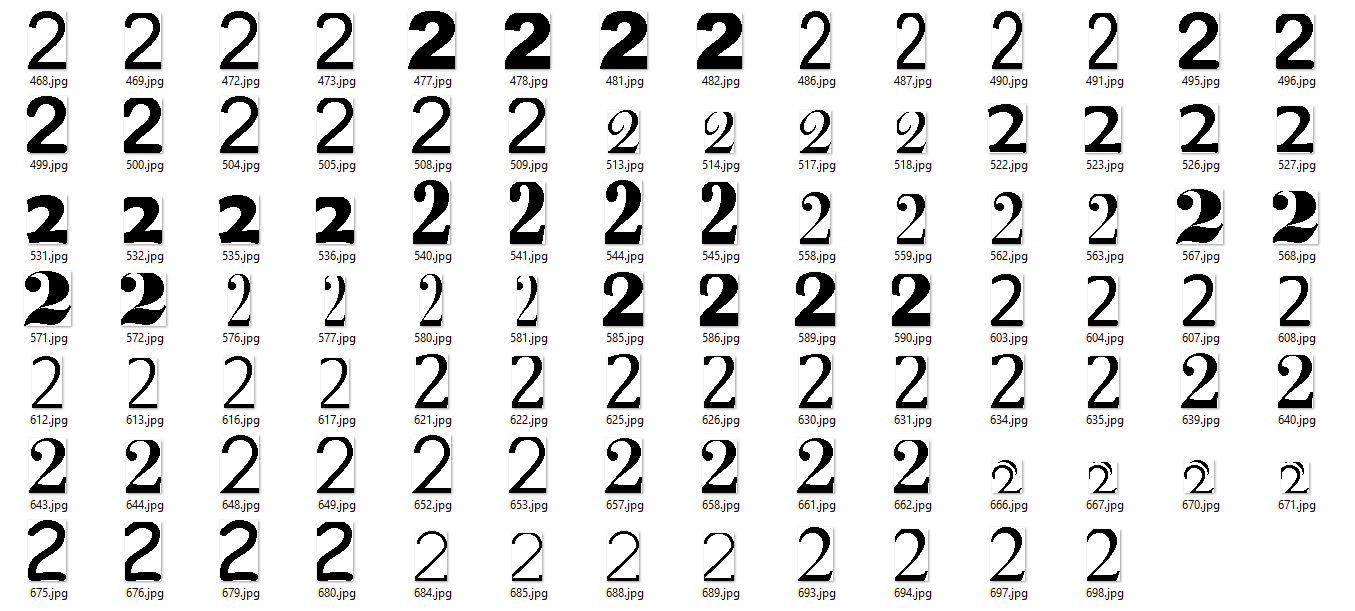

Here is "2" number Images sample -

I resized every image 28 x 28.

Here is more detail :-

Training data size = 28 x 28 images. Network parameters - As LeNet5 Architecture of Network -

Input Layer -28x28

| Convolutional Layer - (Relu Activation);

| Pooling Layer - (Tanh Activation)

| Convolutional Layer - (Relu Activation)

| Local Layer(120 neurons) - (Relu)

| Fully Connected (Softmax Activation, 10 outputs)

This works, giving 99+% accuracy on MNIST. Why is so bad with computer-generated fonts? A CNN can handle lot of variance in data.