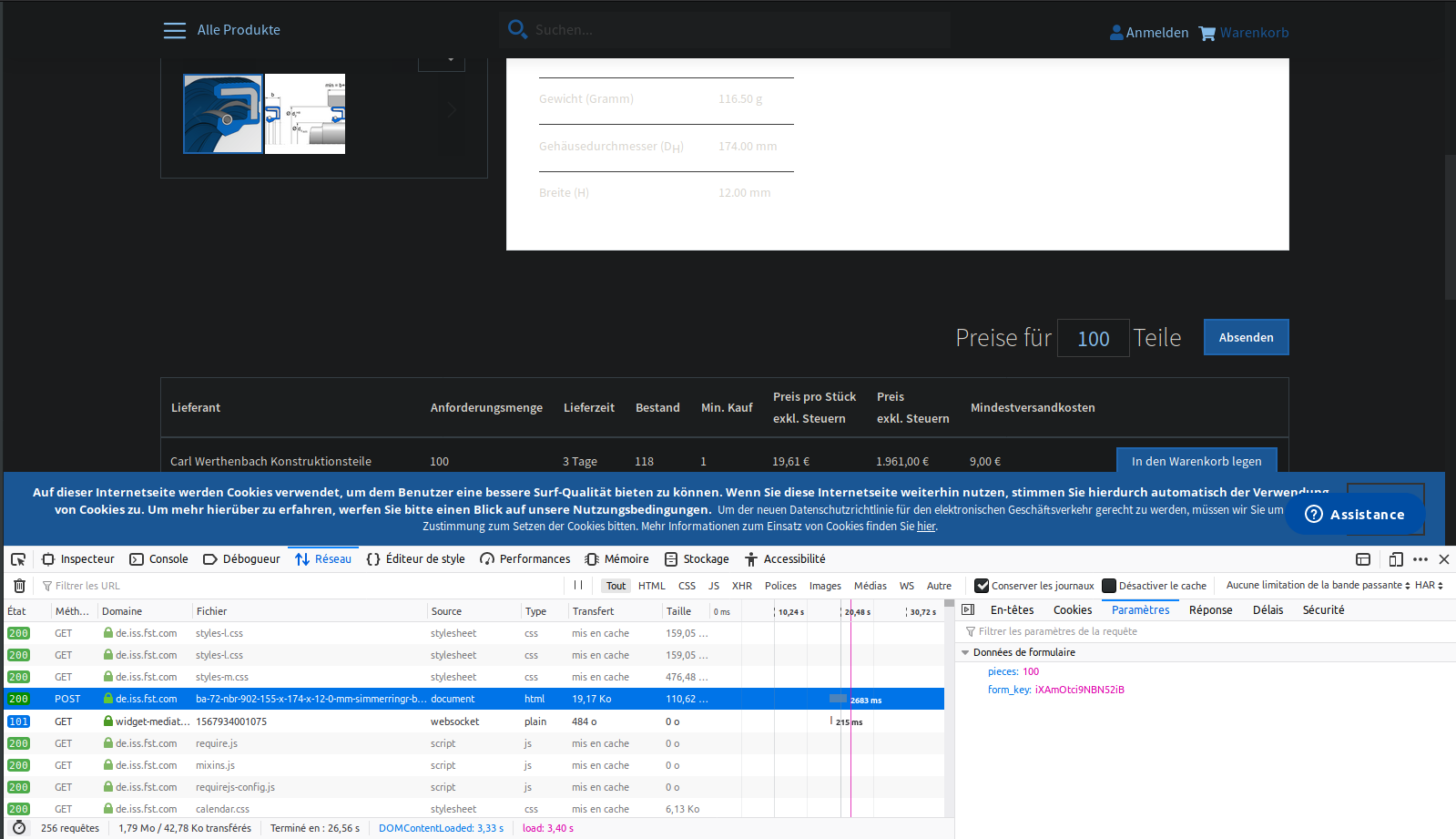

I want to input a value into a text input field and then submit the form and after the form submit scrape the new data on the page How is this possible?

this is the html form on the page. I want to change the input value from 10 to 100 and submit the form

<form action="https://de.iss.fst.com/ba-u6-72-nbr-902-112-x-140-x-13-12-mm-simmerringr-ba-a-mit-feder-fst-40411416#product-offers-anchor" method="post" _lpchecked="1">

<div class="fieldset">

<div class="field qty">

<div class="control">

<label class="label" for="qty-2">

<span>Preise für</span>

</label>

<input type="text" name="pieces" class="validate-length maximum-length-10 qty" maxlength="12" id="qty-2" value="10">

<label class="label" for="qty-2">

<span>Teile</span>

</label>

<span class="actions">

<button type="submit" title="Absenden" class="action">

<span>Absenden</span>

</button>

</span>

</div>

</div>

</div>

</form>

Update! New working code.

import scrapy

import pymongo

from scrapy_splash import SplashRequest, SplashFormRequest

from issfst.items import IssfstItem

class IssSpider(scrapy.Spider):

name = "issfst_spider"

start_urls = ["https://de.iss.fst.com/dichtungen/radialwellendichtringe/rwdr-mit-geschlossenem-kafig/ba"]

custom_settings = {

# specifies exported fields and order

'FEED_EXPORT_FIELDS': ["imgurl",

"Produktdatenblatt",

"Materialdatenblatt",]

}

def parse(self, response):

self.log("I just visted:" + response.url)

urls = response.css('.details-button > a::attr(href)').extract()

for url in urls:

formdata = {'pieces': '200'}

yield SplashFormRequest.from_response(

response,

url=url,

formdata=formdata,

callback=self.parse_details,

args={'wait': 3}

)

# follow paignation link

next_page_url = response.css('li.item > a.next::attr(href)').extract_first()

if next_page_url:

next_page_url = response.urljoin(next_page_url)

yield scrapy.Request(url=next_page_url, callback=self.parse)

def parse_details(self, response):

item = IssfstItem()

# scrape image url

item['imgurl'] = response.css('img.fotorama__img::attr(src)').extract(),

# scrape download pdf links

item['Produktdatenblatt'] = response.css('a.action[data-group="productdatasheet"]::attr(href)').extract_first(),

item['Materialdatenblatt'] = response.css( 'a.action[data-group="materialdatasheet"]::attr(href)').extract_first(),

item['Beschreibung'] = response.css('.description > p::text').extract_first(),

yield item