I've read through the link below and extended the timeout, disable compression on the Origin/Azure Front Door and a Rules Set rule to remove accept-encoding from the request for byte range requests. However, I am still getting these random 503 errors or blank pages.

https://learn.microsoft.com/en-us/azure/frontdoor/front-door-troubleshoot-routing

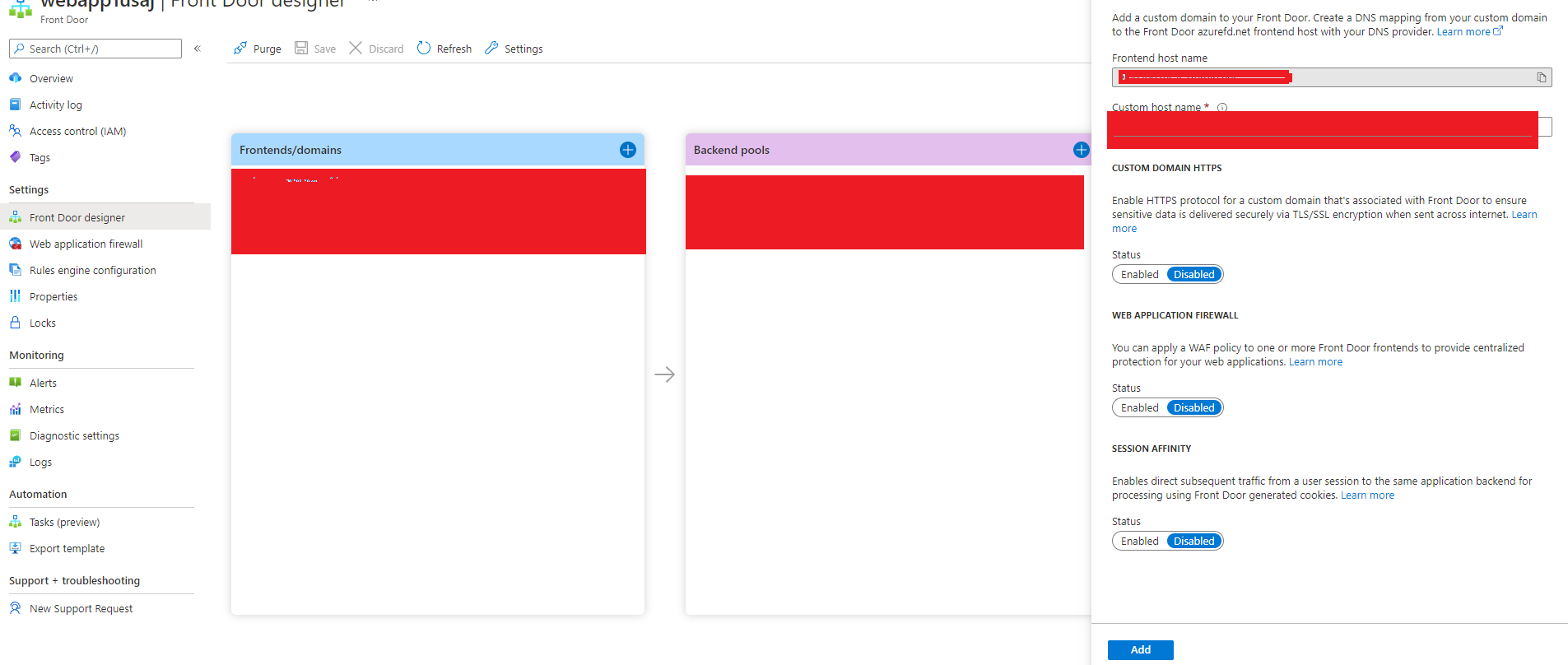

In my web app, I've added the custom domain (app.contoso.com) with my own certificate.

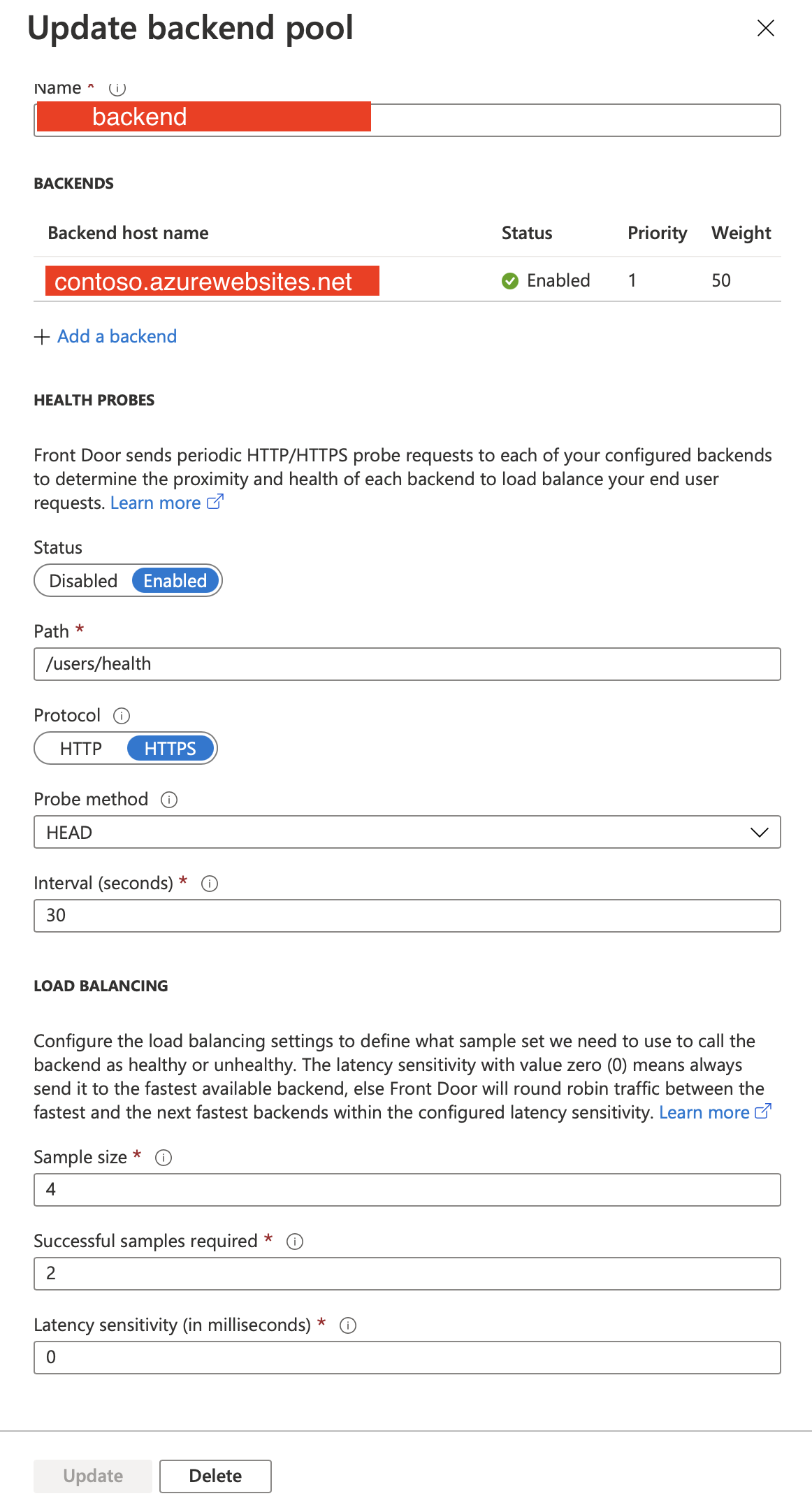

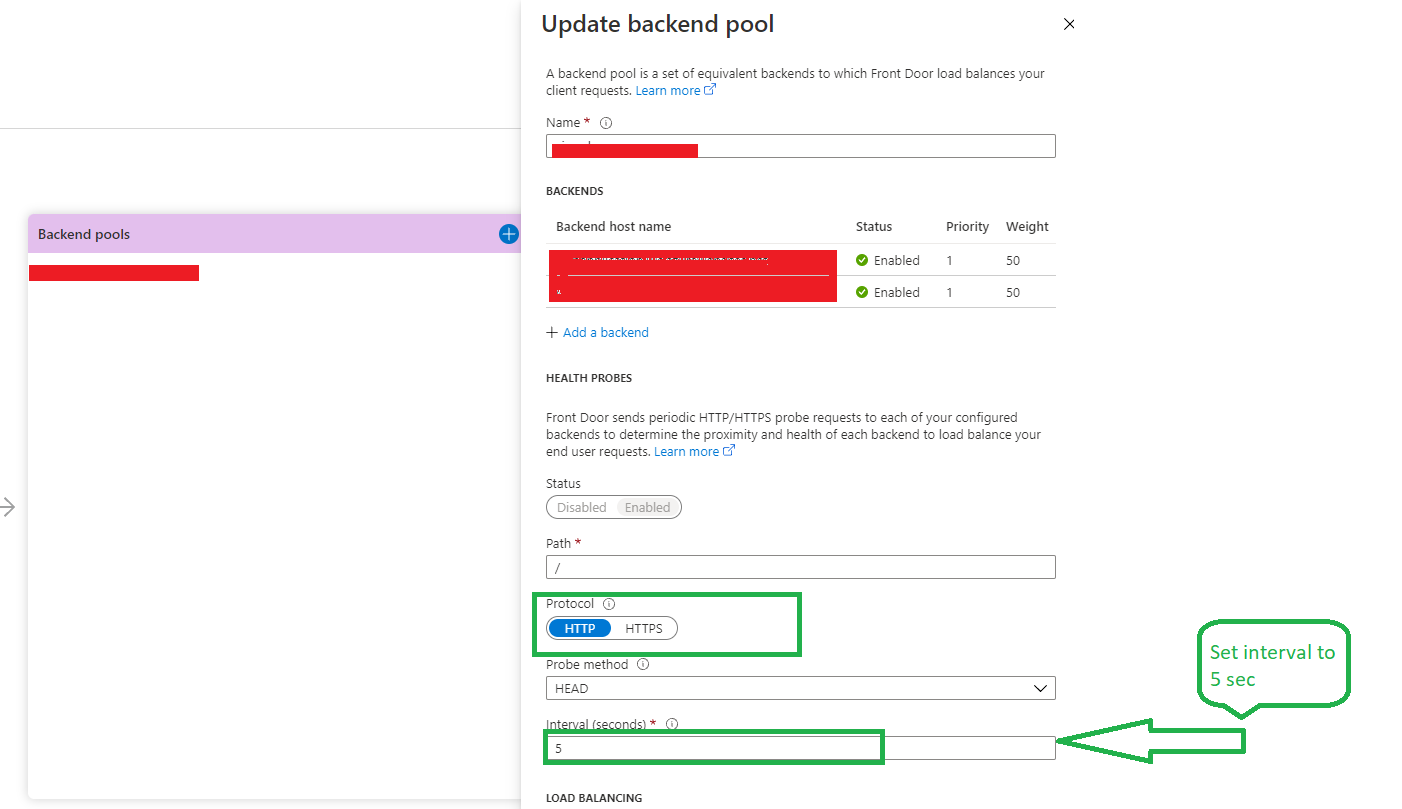

And in my Front door, I've added my custom domain (app.contoso.com) with my own certificate and set up a backend pool and a routing rule.

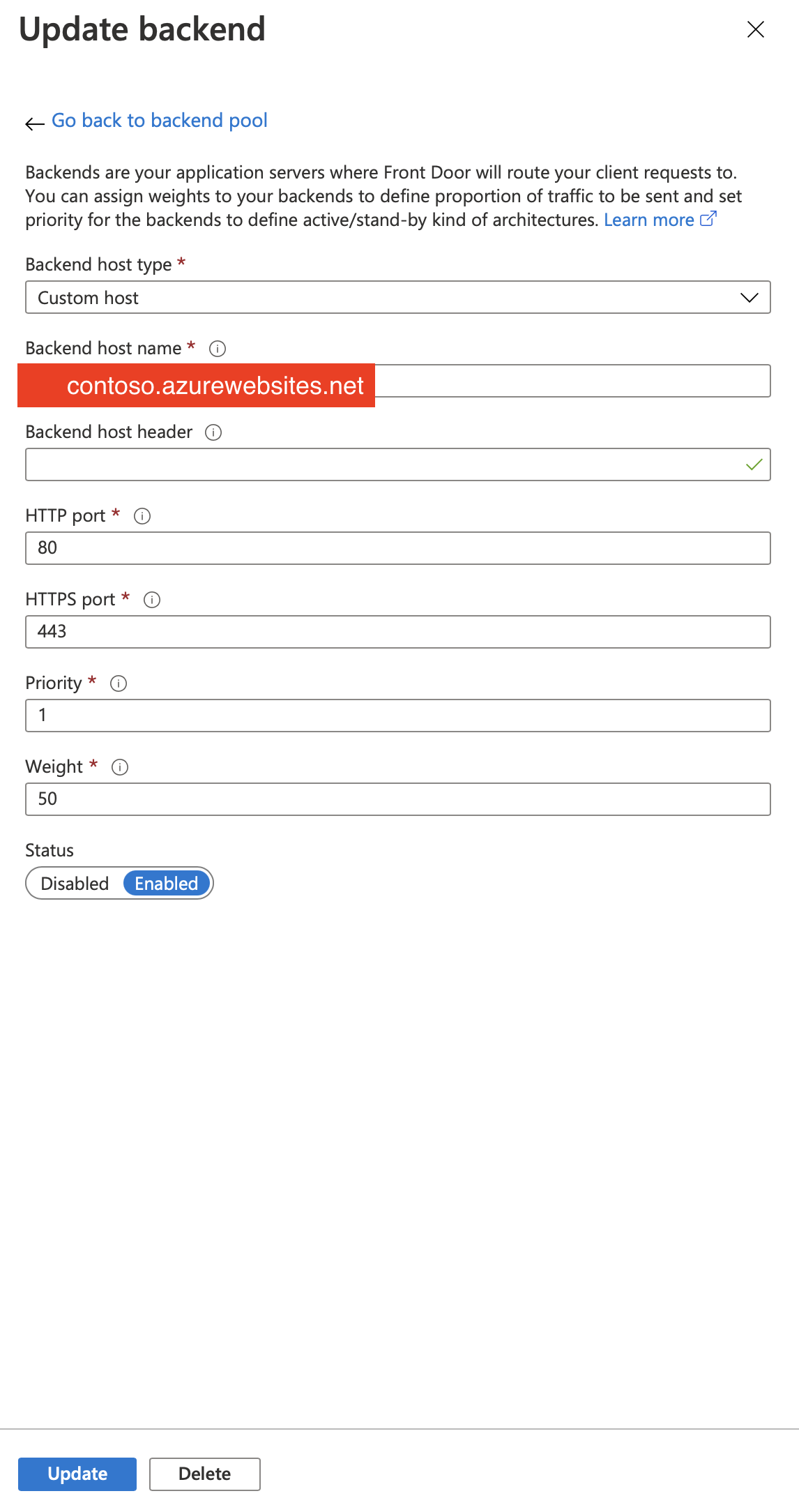

And in the update backend, I've left the backend host header empty as I would like it to be able to redirect to the custom domain instead of showing the web app url.

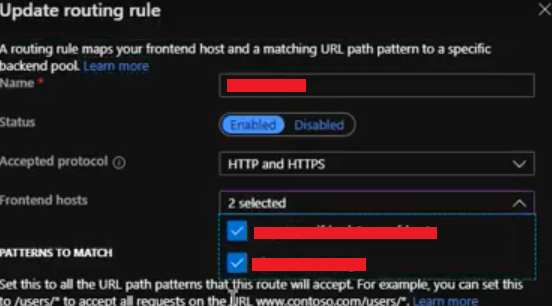

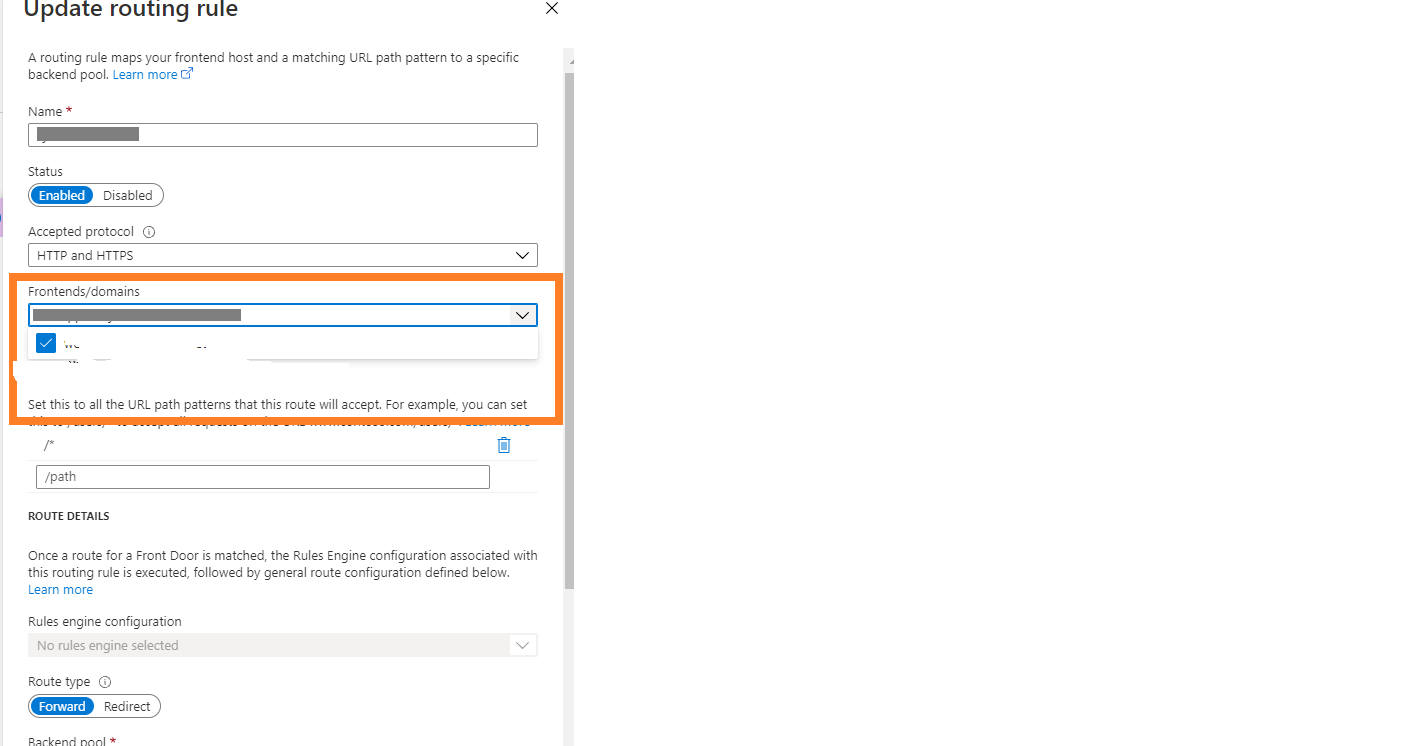

And in my routing rule, I've set the following

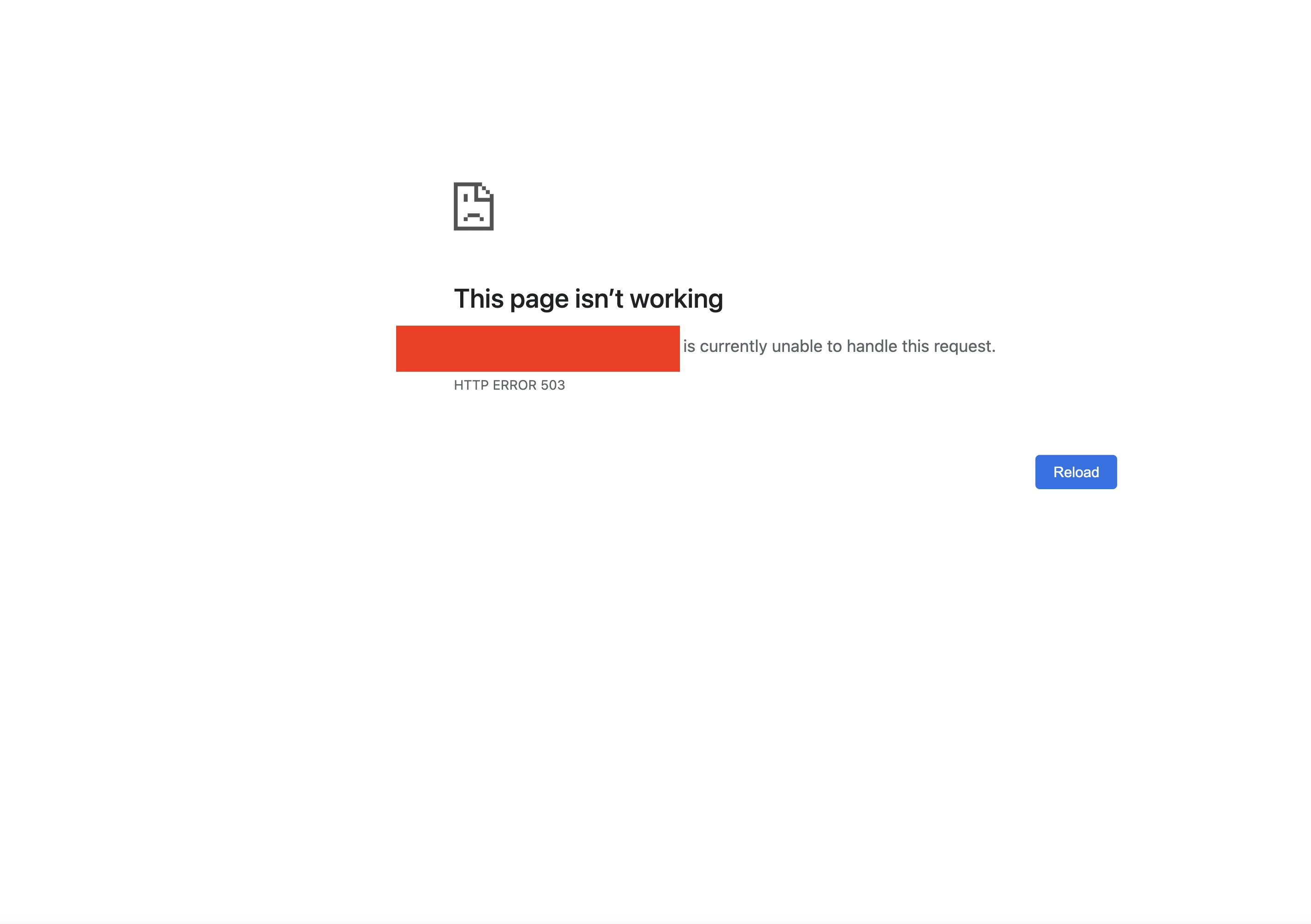

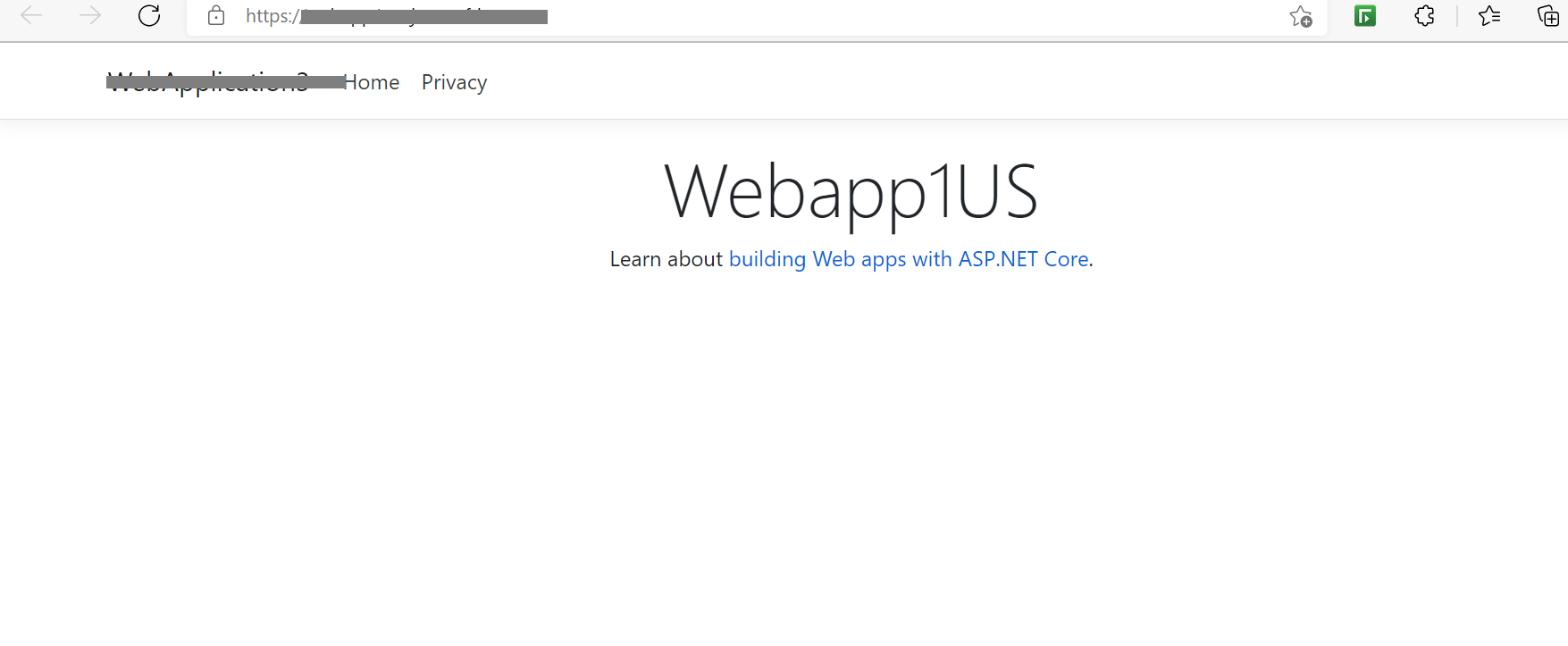

I've followed the instruction closely provided from Azure but still getting random 503 Errors or Blank Page from Azure Front Door. And I can confirm that my Web app (contoso.azurewebsites.net) is working when I am getting a 503 errors or blank pages from the custom domain from front door. I've also ensure I used the same SSL certificate on the web app and front door custom domain.

In my DNS, I've mapped the following

app.contoso.com CNAME contoso.azurefd.net

Is there anything I missed out or does anybody have a solution to this?

Follow up

I've tried to enable and disable the certificate subject name validation and change the send/receive timeout from 30 to 240 or from 240 to 30 but still getting the same issues.

Update: It giving blank page or 503 error (see screenshot below)

I have FrontDoor setup in front of multiple backend Web App. I've noticed there's a number of random 503 errors each day. The requests themselves are valid, and retrying generally works, however, the initial request that fails is not hitting the backend Web App. The backend health at the times of the errors are also 100%, and it can't be a timeout because the error is immediate (timeout is also extended to 240 seconds). It seems like FrontDoor itself is having some kind of health issue, but there's no health issues mentioned.