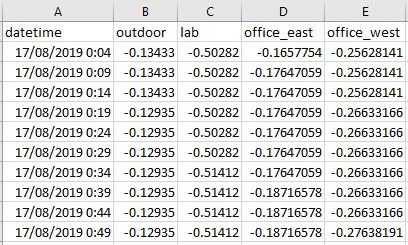

I have a dataset from 4 temperature sensors measuring different places in/around a building:

I'm training a model that takes inputs of shape (96, 4), 96 time steps for the 4 sensors. From this I want to predict 48 points into the future for each of those sensors, shape (48, 4).

So far I've got an implementation working to predict one sensor only. I mostly followed this section from the TensorFlow tutorials.

My train X is shape (6681, 96, 4), train Y is shape (6681, 48) as I have restricted this to one sensor only. If I just change train Y to (6681, 48, 4) when training I of course get ValueError: Dimensions must be equal, but are 48 and 4 for 'loss/dense_loss/sub' (op: 'Sub') with input shapes: [?,48], [?,48,4]. as my model is not expecting this shape.

Where I'm getting stuck is with my LSTM layer's input/output shapes. I just can't figure out how to finish with a shape of (BATCH_SIZE, 48, 4).

Here's my layer setup at the moment:

tf.keras.backend.clear_session()

print("Input shape", x_train_multi.shape[-2:])

multi_step_model = tf.keras.models.Sequential()

multi_step_model.add(tf.keras.layers.LSTM(32,

return_sequences=True,

input_shape=x_train_multi.shape[-2:]))

multi_step_model.add(tf.keras.layers.Dropout(rate=0.5)) # Dropout layer after each LSTM to reduce overfitting.

multi_step_model.add(tf.keras.layers.LSTM(16, activation='relu'))

multi_step_model.add(tf.keras.layers.Dropout(rate=0.5))

# The argument to Dense shapes the results to give the number of time steps we want.

# But how do I make it keep 4 features as well?!?

multi_step_model.add(tf.keras.layers.Dense(future_target / STEP))

multi_step_model.compile(optimizer=tf.keras.optimizers.RMSprop(clipvalue=1.0), loss='mae')

# Shape of predictions

for x, y in val_data_multi.take(1):

print ("Prediction shape", multi_step_model.predict(x).shape)

Some thoughts:

- Am I just missing something or forgetting to set an argument for the output features/dimensions to use?

- Do I need to train separate RNNs for predicting each sensor?

Thanks! :)