Note:

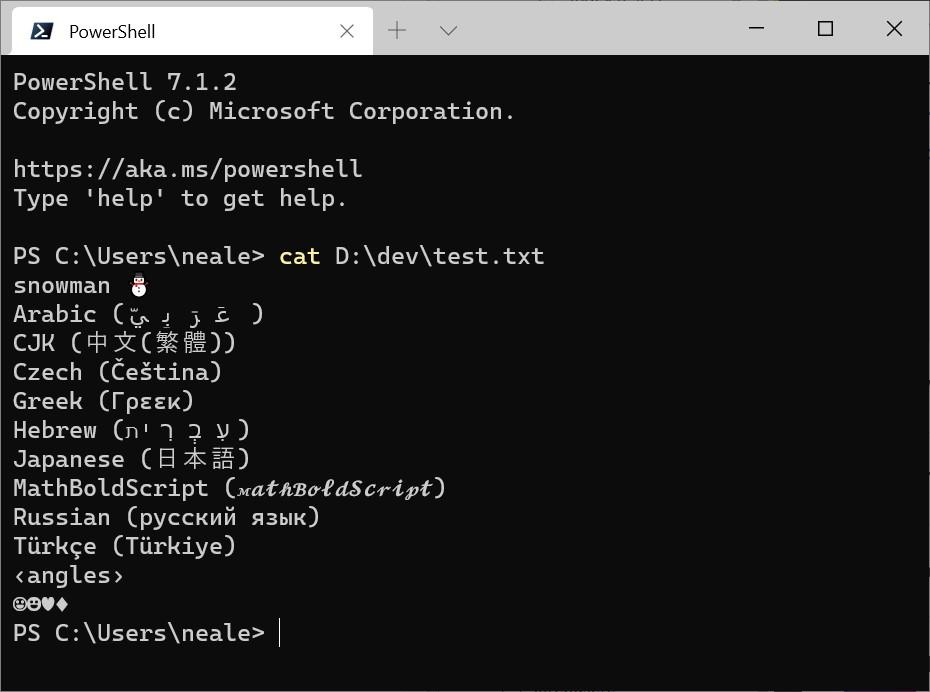

On Windows, with respect to rendering Unicode characters, it is primarily the choice of font / console (terminal) application that matters.

- Nowadays, using Windows Terminal, which is distributed and updated via the Microsoft Store since Windows 10, is a good replacement for the legacy console host (console windows provided by

conhost.exe), providing superior Unicode character support. In Windows 11 22H2, Windows Terminal even became the default console (terminal).

With respect to programmatically processing Unicode characters when communicating with external programs, $OutputEncoding, [Console]::InputEncoding and [Console]::OutputEncoding matter too - see below.

The PowerShell (Core) 7+ perspective (see next section for Windows PowerShell), irrespective of character rendering issues (also covered in the next section), with respect to communicating with external programs:

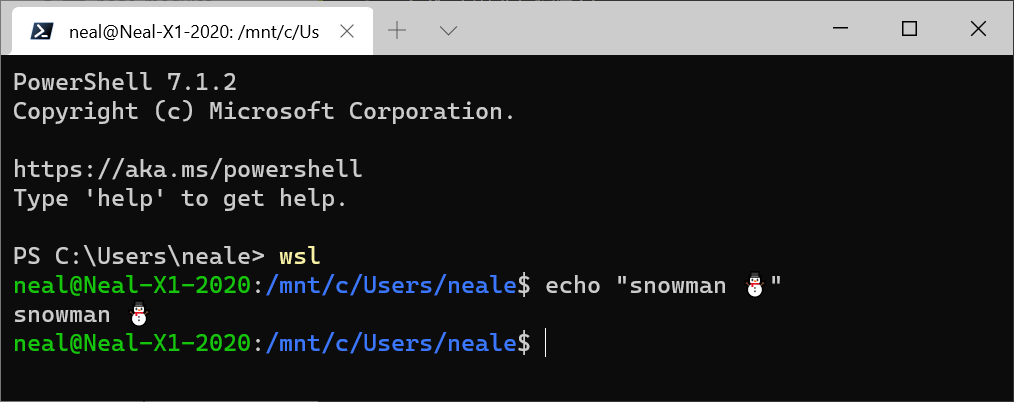

On Unix-like platforms, PowerShell Core uses UTF-8 by default.

On Windows, it is the legacy system locale, via its OEM code page, that determines the default encoding in all consoles, including both Windows PowerShell and PowerShell Core console windows, though recent versions of Windows 10 now allow setting the system locale to code page 65001 (UTF-8); note that the feature is still in beta as of this writing, and using it has far-reaching consequences - see this answer.

If you do use that feature, PowerShell Core console windows will then automatically be UTF-8-aware, though in Windows PowerShell you'll still have to set $OutputEncoding to UTF-8 too (which in Core already defaults to UTF-8), as shown below.

Otherwise - notably on older Windows versions - you can use the same approach as detailed below for Windows PowerShell.

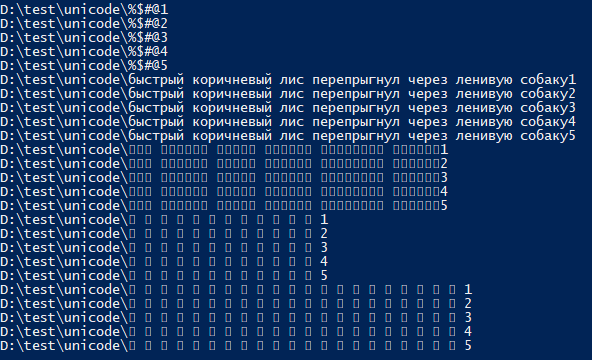

Making your Windows PowerShell console window Unicode (UTF-8) aware:

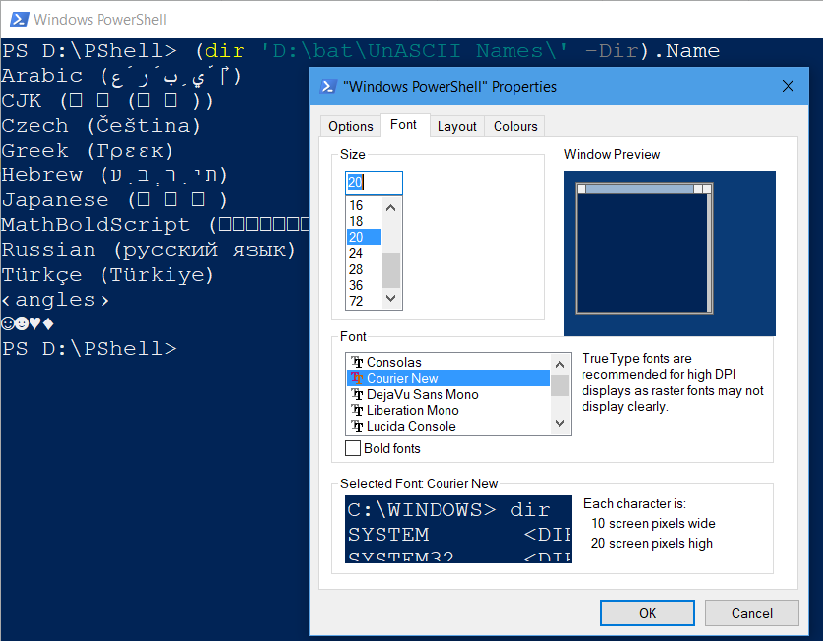

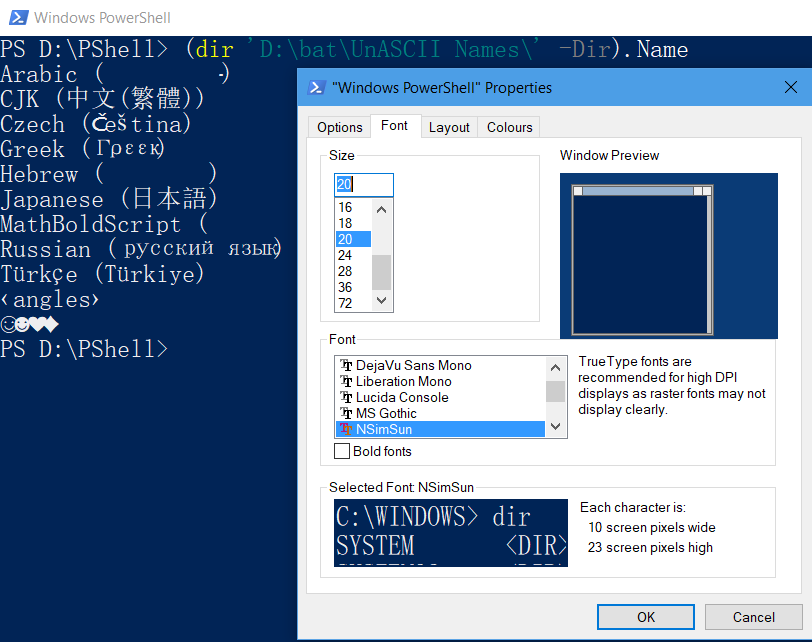

Pick a TrueType (TT) font that supports the specific scripts (writing systems, alphabets) whose characters you want to display properly in the console:

Important: While all TrueType fonts support Unicode in principle, they usually only support a subset of all Unicode characters, namely those corresponding to specific scripts (writing systems), such as the Latin script, the Cyrillic (Russian) script, ...

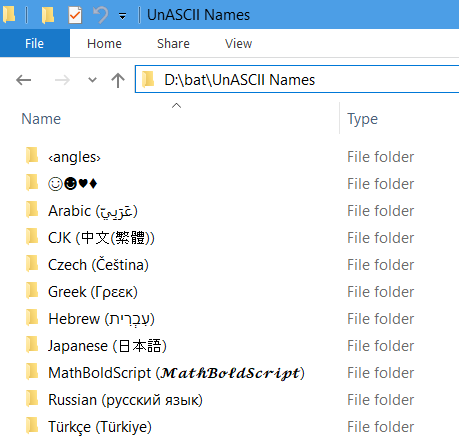

In your particular case - if you must support Arabic as well as Chinese, Japanese and Russian characters - your only choice is SimSun-ExtB, which is available on Windows 10 only.

See Wikipedia for a list of what Windows fonts target what scripts (alphabets).

To change the font, click on the icon in the top-left corner of the window and select Properties, then change to the Fonts tab and select the TrueType font of interest.

Additionally, for proper communication with external programs:

The console window's code page must be switched to 65001, the UTF-8 code page (which is usually done with chcp 65001, which, however, cannot be used directly from within a PowerShell session[1], but the PowerShell command below has the same effect).

Windows PowerShell must be instructed to use UTF-8 to communicate with external utilities too, both when sending pipeline input to external programs, via it $OutputEncoding preference variable (on decoding output from external programs, it is the encoding stored in [Console]::OutputEncoding that is applied).

The following magic incantation in Windows PowerShell does this (as stated, this implicitly performs chcp 65001):

$OutputEncoding = [Console]::InputEncoding = [Console]::OutputEncoding =

New-Object System.Text.UTF8Encoding

To persist these settings, i.e., to make your future interactive PowerShell sessions UTF-8-aware by default, add the command above to your $PROFILE file.

Note: Recent versions of Windows 10 now allow setting the system locale to code page 65001 (UTF-8) (the feature is still in beta as of Window 10 version 1903), which makes all console windows default to UTF-8, including Windows PowerShell's.

If you do use that feature, setting [Console]::InputEncoding / [Console]::OutputEncoding is then no longer strictly necessary, but you'll still have to set $OutputEncoding (which is not necessary in PowerShell Core, where $OutputEncoding already defaults to UTF-8).

Important:

These settings assume that any external utilities you communicate with expect UTF-8-encoded input and produce UTF-8 output.

- CLIs written in Node.js fulfill that criterion, for instance.

- Python scripts - if written with UTF-8 support in mind - can handle UTF-8 too (see this answer).

By contrast, these settings can break (older) utilities that only expect a single-byte encoding as implied by the system's legacy OEM code page.

- Up to Windows 8.1, this even included standard Windows utilities such as

find.exe and findstr.exe, which have been fixed in Windows 10.

- See the bottom of this post for how to bypass this problem by switching to UTF-8 temporarily, on demand for invoking a given utility.

These settings apply to external programs only and are unrelated to the encodings that PowerShell's cmdlets use on output:

- See this answer for the default character encodings used by PowerShell cmdlets; in short: If you want cmdlets in Windows PowerShell to default to UTF-8 (which PowerShell [Core] v6+ does anyway), add

$PSDefaultParameterValues['*:Encoding'] = 'utf8' to your $PROFILE, but note that this will affect all calls to cmdlets with an -Encoding parameter in your sessions, unless that parameter is used explicitly; also note that in Windows PowerShell you'll invariably get UTF-8 files with BOM; conversely, in PowerShell [Core] v6+, which defaults to BOM-less UTF-8 (both in the absence of -Encoding and with -Encoding utf8, you'd have to use 'utf8BOM'.

Optional background information

Tip of the hat to eryksun for all his input.

While a TrueType font is active, the console-window buffer correctly preserves (non-ASCII) Unicode chars. even if they don't render correctly; that is, even though they may appear generically as ?, so as to indicate lack of support by the current font, you can copy & paste such characters elsewhere without loss of information, as eryksun notes.

PowerShell is capable of outputting Unicode characters to the console even without having switched to code page 65001 first.

However, that by itself does not guarantee that other programs can handle such output correctly - see below.

When it comes to communicating with external programs via stdout (piping), PowersShell uses the character encoding specified in the $OutputEncoding preference variable, which defaults to ASCII(!) in Windows PowerShell, which means that any non-ASCII characters are transliterated to literal ? characters, resulting in information loss. (By contrast, commendably, PowerShell Core (v6+) now uses (BOM-less) UTF-8 as the default encoding, consistently.)

- By contrast, however, passing non-ASCII arguments (rather than stdout (piped) output) to external programs seems to require no special configuration (it is unclear to me why that works); e.g., the following Node.js command correctly returns

€: 1 even with the default configuration:

node -pe "process.argv[1] + ': ' + process.argv[1].length" €

[Console]::OutputEncoding:

- controls what character encoding is assumed when the console translates program output into console display characters.

- also tells PowerShell what encoding to assume when capturing output from an external program.

The upshot is that if you need to capture output from an UTF-8-producing program, you need to set [Console]::OutputEncoding to UTF-8 as well; setting $OutputEncoding only covers the input (to the external program) aspect.

[Console]::InputEncoding sets the encoding for keyboard input into a console[2] and also determines how PowerShell's CLI interprets data it receives via stdin (standard input).

If switching the console to UTF-8 for the entire session is not an option, you can do so temporarily, for a given call:

# Save the current settings and temporarily switch to UTF-8.

$oldOutputEncoding = $OutputEncoding; $oldConsoleEncoding = [Console]::OutputEncoding

$OutputEncoding = [Console]::OutputEncoding = New-Object System.Text.Utf8Encoding

# Call the UTF-8 program, using Node.js as an example.

# This should echo '€' (`U+20AC`) as-is and report the length as *1*.

$captured = '€' | node -pe "require('fs').readFileSync(0).toString().trim()"

$captured; $captured.Length

# Restore the previous settings.

$OutputEncoding = $oldOutputEncoding; [Console]::OutputEncoding = $oldConsoleEncoding

Problems on older versions of Windows (pre-W10):

- An active

chcp value of 65001 breaking the console output of some external programs and even batch files in general in older versions of Windows may ultimately have stemmed from a bug in the WriteFile() Windows API function (as also used by the standard C library), which mistakenly reported the number of characters rather than bytes with code page 65001 in effect, as discussed in this blog post.

The resulting symptoms, according to a comment by bobince on this answer from 2008, are: "My understanding is that calls that return a number-of-bytes (such as fread/fwrite/etc) actually return a number-of-characters. This causes a wide variety of symptoms, such as incomplete input-reading, hangs in fflush, the broken batch files and so on."

Superior alternatives to the native Windows console (terminal), conhost.exe

eryksun suggests two alternatives to the native Windows console windows (conhost.exe), which provider better and faster Unicode character rendering, due to using the modern, GPU-accelerated DirectWrite/DirectX API instead of the "old GDI implementation [that] cannot handle complex scripts, non-BMP characters, or automatic fallback fonts."

Microsoft's own, open-source Windows Terminal, which is distributed and updated via the Microsoft Store since Windows 10 - see here for an introduction.

Long-established third-party alternative ConEmu, which has the advantage of working on older Windows versions too.

[1] Note that running chcp 65001 from inside a PowerShell session is not effective, because .NET caches the console's output encoding on startup and is unaware of later changes made with chcp (only changes made directly via [console]::OutputEncoding] are picked up).

[2] I am unclear on how that manifests in practice; do tell us, if you know.