I am trying to program a method to convert subtitle files, such that there is always just one sentence per subtitle.

My idea is the following:

- For each subtitle:

1.1 -> I get the subtitle duration

1.2 -> Calculate the characters_per_second

1.3 -> Use this to store (inside dict_times_word_subtitle ) the time it takes to speak the word i

I extract the sentences from the entire text

For each sentence:

3.1 I store (inside dict_sentences_subtitle ) the time it take to speak the sentence with the specific words (from which I can get the duration to speak them)

- I create a new srt file (subtitle file) which starts at the same time as the original srt file and the subtitle timings can then be taken from the duration that it takes to speak the sentences.

For now, I have written the following code:

#---------------------------------------------------------

import pysrt

import re

from datetime import datetime, date, time, timedelta

#---------------------------------------------------------

def convert_subtitle_one_sentence(file_name):

sub = pysrt.open(file_name)

### ----------------------------------------------------------------------

### Store Each Word and the Average Time it Takes to Say it in a dictionary

### ----------------------------------------------------------------------

dict_times_word_subtitle = {}

running_variable = 0

for i in range(len(sub)):

subtitle_text = sub[i].text

subtitle_duration = (datetime.combine(date.min, sub[i].duration.to_time()) - datetime.min).total_seconds()

# Compute characters per second

characters_per_second = len(subtitle_text)/subtitle_duration

# Store Each Word and the Average Time (seconds) it Takes to Say in a Dictionary

for j,word in enumerate(subtitle_text.split()):

if j == len(subtitle_text.split())-1:

time = len(word)/characters_per_second

else:

time = len(word+" ")/characters_per_second

dict_times_word_subtitle[str(running_variable)] = [word, time]

running_variable += 1

### ----------------------------------------------------------------------

### Store Each Sentence and the Average Time to Say it in a Dictionary

### ----------------------------------------------------------------------

total_number_of_words = len(dict_times_word_subtitle.keys())

# Get the entire text

entire_text = ""

for i in range(total_number_of_words):

entire_text += dict_times_word_subtitle[str(i)][0] +" "

# Initialize the dictionary

dict_times_sentences_subtitle = {}

# Loop through all found sentences

last_number_of_words = 0

for i,sentence in enumerate(re.findall(r'([A-Z][^\.!?]*[\.!?])', entire_text)):

number_of_words = len(sentence.split())

# Compute the time it takes to speak the sentence

time_sentence = 0

for j in range(last_number_of_words, last_number_of_words + number_of_words):

time_sentence += dict_times_word_subtitle[str(j)][1]

# Store the sentence together with the time it takes to say the sentence

dict_times_sentences_subtitle[str(i)] = [sentence, round(time_sentence,3)]

## Update last number_of_words

last_number_of_words += number_of_words

# Check if there is a non-sentence remaining at the end

if j < total_number_of_words:

remaining_string = ""

remaining_string_time = 0

for k in range(j+1, total_number_of_words):

remaining_string += dict_times_word_subtitle[str(k)][0] + " "

remaining_string_time += dict_times_word_subtitle[str(k)][1]

dict_times_sentences_subtitle[str(i+1)] = [remaining_string, remaining_string_time]

### ----------------------------------------------------------------------

### Create a new Subtitle file with only 1 sentence at a time

### ----------------------------------------------------------------------

# Initalize new srt file

new_srt = pysrt.SubRipFile()

# Loop through all sentence

# get initial start time (seconds)

# https://mcmap.net/q/467647/-convert-datetime-time-to-seconds

start_time = (datetime.combine(date.min, sub[0].start.to_time()) - datetime.min).total_seconds()

for i in range(len(dict_times_sentences_subtitle.keys())):

sentence = dict_times_sentences_subtitle[str(i)][0]

print(sentence)

time_sentence = dict_times_sentences_subtitle[str(i)][1]

print(time_sentence)

item = pysrt.SubRipItem(

index=i,

start=pysrt.SubRipTime(seconds=start_time),

end=pysrt.SubRipTime(seconds=start_time+time_sentence),

text=sentence)

new_srt.append(item)

## Update Start Time

start_time += time_sentence

new_srt.save(file_name)

The issue:

There are no error messages, but when I apply this to real subtitle files and then watch the video, the subtitles begin correctly, but as the video progresses (error progression) the subtitles get less and less aligned with what is actually said.

Example: The speaker has finished his talk, but the subtitles keep appearing.

Simple example to test

srt = """

1

00:00:13,100 --> 00:00:14,750

Dr. Martin Luther King, Jr.,

2

00:00:14,750 --> 00:00:18,636

in a 1968 speech where he reflects

upon the Civil Rights Movement,

3

00:00:18,636 --> 00:00:21,330

states, "In the end,

4

00:00:21,330 --> 00:00:24,413

we will remember not the words of our enemies

5

00:00:24,413 --> 00:00:27,280

but the silence of our friends."

6

00:00:27,280 --> 00:00:29,800

As a teacher, I've internalized this message.

"""

with open('test.srt', "w") as file:

file.write(srt)

convert_subtitle_one_sentence("test.srt")

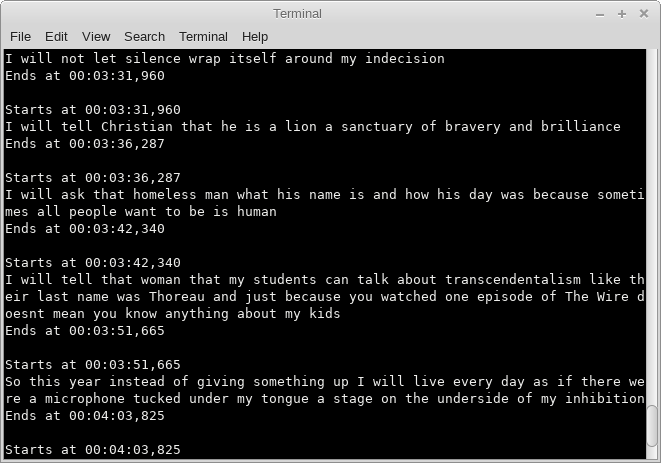

The output looks like this (yes, there is still some work to do on the sentence recognition par (i.e. Dr. )):

0 00:00:13,100 --> 00:00:13,336 Dr. 1 00:00:13,336 --> 00:00:14,750 Martin Luther King, Jr. 2 00:00:14,750 --> 00:00:23,514 Civil Rights Movement, states, "In the end, we will remember not the words of our enemies but the silence of our friends. 3 00:00:23,514 --> 00:00:26,175 As a teacher, I've internalized this message. 4 00:00:26,175 --> 00:00:29,859 our friends." As a teacher, I've internalized this message.

As you can see the original last time stamp is 00:00:29,800 whereas in the output file it is 00:00:29,859. This might not seem like much in the beginning, but as the video gets longer, the difference increases.

The full sample video can be downloaded here: https://ufile.io/19nuvqb3

The full subtitle file: https://ufile.io/qracb7ai

Attention: The subtitle file will be overridden, so you might want to store a copy with another name to be able to compare.

Method how it could be fixed:

The exact timing for words starting or ending an original subtitle is known. This could be used to cross-check and adjust timing accordingly.

Edit

Here is a code to create a dictionary which stores character, character_duration (average over subtitle), and start or end original time stamb, if it exists for this character.

sub = pysrt.open('video.srt')

running_variable = 0

dict_subtitle = {}

for i in range(len(sub)):

# Extract Start Time Stamb

timestamb_start = sub[i].start

# Extract Text

text =sub[i].text

# Extract End Time Stamb

timestamb_end = sub[i].end

# Extract Characters per Second

characters_per_second = sub[i].characters_per_second

# Fill Dictionary

for j,character in enumerate(" ".join(text.split())):

character_duration = len(character)*characters_per_second

dict_subtitle[str(running_variable)] = [character,character_duration,False, False]

if j == 0: dict_subtitle[str(running_variable)] = [character, character_duration, timestamb_start, False]

if j == len(text)-1 : dict_subtitle[str(running_variable)] = [character, character_duration, False, timestamb_end]

running_variable += 1

More videos to try

Here you may download more videos and their respective subtitle files: https://filebin.net/kwygjffdlfi62pjs

Edit 3

4

00:00:18,856 --> 00:00:25,904

Je rappelle la définition de ce qu'est un produit scalaire, <i>dot product</i> dans <i>Ⅎ</i>.

5

00:00:24,855 --> 00:00:30,431

Donc je prends deux vecteurs dans <i>Ⅎ</i> et je définis cette opération-là , linéaire, <i>u