I am working on Application that combines the multiple videos along with background audio track. It also need to set different audio level for different videos.

Following is the code for AssetItem class & AssetManager Class

// AssetItem Class

class AssetItem : NSObject {

var asset : Asset!

var assetEffect : AssetEffectType! // Enum

var assetSceneType : SceneType! // Enum

var videoLength : CMTime!

var animationLayer : AnyObject?

var volumeOfVideoVoice : Float = 0.0

var volumeOfBGMusic : Float = 0.0

override init() {

super.init()

}

}

// AssetManager Class implementation

class AssetManager{

var assetList = [AssetItem]()

var composition : AVMutableComposition! = AVMutableComposition()

var videoComposition : AVMutableVideoComposition? = AVMutableVideoComposition()

var audioMix : AVMutableAudioMix = AVMutableAudioMix()

var transitionDuration = CMTimeMakeWithSeconds(1, 600) // Default transitionDuration is 1 sec

var compositionTimeRanges : [NSValue] = [NSValue]()

var passThroughTimeRangeValue : [NSValue] = [NSValue]()

var transitionTimeRangeValue : [NSValue] = [NSValue]()

var videoTracks = [AVMutableCompositionTrack]()

var audioTracks = [AVMutableCompositionTrack]()

// MARK: - Constructor

override init() {

super.init()

let compositionTrackA = self.composition.addMutableTrackWithMediaType(AVMediaTypeVideo, preferredTrackID: CMPersistentTrackID(kCMPersistentTrackID_Invalid))

let compositionTrackB = self.composition.addMutableTrackWithMediaType(AVMediaTypeVideo, preferredTrackID: CMPersistentTrackID(kCMPersistentTrackID_Invalid))

let compositionTrackAudioA = self.composition.addMutableTrackWithMediaType(AVMediaTypeAudio, preferredTrackID: CMPersistentTrackID(kCMPersistentTrackID_Invalid))

let compositionTrackAudioB = self.composition.addMutableTrackWithMediaType(AVMediaTypeAudio, preferredTrackID: CMPersistentTrackID(kCMPersistentTrackID_Invalid))

self.videoTracks = [compositionTrackA, compositionTrackB]

self.audioTracks = [compositionTrackAudioA, compositionTrackAudioB]

}

func buildCompositionTrack(forExport : Bool ){

// This is the Method to Build Compositions

}

}

FOllowing is the Method for BuildingCompositions

func buildCompositionTrack(forExport : Bool) {

var cursorTIme = kCMTimeZero

var transitionDurationForEffect = kCMTimeZero

// Create a mutable composition instructions object

var videoCompositionInstructions = [AVMutableVideoCompositionInstruction]()

var audioMixInputParameters = [AVMutableAudioMixInputParameters]()

let timeRanges = calculateTimeRangeForAssetLayer()

self.passThroughTimeRangeValue = timeRanges.passThroughTimeRangeValue

self.transitionTimeRangeValue = timeRanges.transitionTimeRangeValue

let defaultMuteSoundTrackURL: NSURL = bundle.URLForResource("30sec", withExtension: "mp3")!

let muteSoundTrackAsset = AVURLAsset(URL: defaultMuteSoundTrackURL, options: nil)

let muteSoundTrack = muteSoundTrackAsset.tracksWithMediaType(AVMediaTypeAudio)[0]

for (index,assetItem) in self.assetsList.enumerate() {

let trackIndex = index % 2

let assetVideoTrack = assetItem.asset.movieAsset.tracksWithMediaType(AVMediaTypeVideo)[0]

let timeRange = CMTimeRangeMake(kCMTimeZero, assetItem.videoLength)

do {

try self.videoTracks[trackIndex].insertTimeRange(timeRange, ofTrack: assetVideoTrack, atTime: cursorTime)

} catch let error1 as NSError {

error = error1

}

if error != nil {

print("Error: buildCompositionTracks for video with parameter index: %@ and VideoCounts: %@ error: %@", ["\(index)", "\(self.assetsList.count)", "\(error?.description)"])

error = nil

}

if assetItem.asset.movieAsset.tracksWithMediaType(AVMediaTypeAudio).count > 0 {

let clipAudioTrack = assetItem.asset.movieAsset.tracksWithMediaType(AVMediaTypeAudio)[0]

do {

try audioTracks[trackIndex].insertTimeRange(timeRange, ofTrack: clipAudioTrack, atTime: cursorTime)

} catch let error1 as NSError {

error = error1

}

}else {

do {

try audioTracks[trackIndex].insertTimeRange(timeRange, ofTrack: muteSoundTrack, atTime: cursorTime)

}catch let error1 as NSError {

error = error1

}

}

// The end of this clip will overlap the start of the next by transitionDuration.

// (Note: this arithmetic falls apart if timeRangeInAsset.duration < 2 * transitionDuration.)

if assetItem.assetEffect == FLIXAssetEffectType.Default {

transitionDurationForEffect = kCMTimeZero

let timeRange = CMTimeRangeMake(cursorTime, assetItem.videoLength)

self.compositionTimeRanges.append(NSValue(CMTimeRange: timeRange))

cursorTime = CMTimeAdd(cursorTime, assetItem.videoLength)

} else {

transitionDurationForEffect = self.transitionDuration

let timeRange = CMTimeRangeMake(cursorTime, CMTimeSubtract(assetItem.videoLength, transitionDurationForEffect))

self.compositionTimeRanges.append(NSValue(CMTimeRange: timeRange))

cursorTime = CMTimeAdd(cursorTime, assetItem.videoLength)

cursorTime = CMTimeSubtract(cursorTime, transitionDurationForEffect)

}

videoCompositionInstructions.appendContentsOf(self.buildCompositionInstructions( index, assetItem : assetItem))

}

if self.project.hasProjectMusicTrack() && self.backgroundMusicTrack != nil {

let url: NSURL = bundle.URLForResource("Music9", withExtension: "mp3")!

bgMusicSound = AVURLAsset(URL: url, options: nil)

backgroundAudioTrack = bgMusicSound.tracksWithMediaType(AVMediaTypeAudio)[0]

let compositionBackgroundTrack = self.composition.addMutableTrackWithMediaType(AVMediaTypeAudio, preferredTrackID: CMPersistentTrackID(kCMPersistentTrackID_Invalid))

let soundDuration = CMTimeCompare(bgMusicSound.duration, self.composition.duration)

if soundDuration == -1 {

let bgMusicSoundTimeRange = CMTimeRangeMake(kCMTimeZero, bgMusicSound.duration)

let noOftimes = Int(CMTimeGetSeconds(self.composition.duration) / CMTimeGetSeconds(bgMusicSound.duration))

let remainingTime = CMTimeGetSeconds(self.composition.duration) % CMTimeGetSeconds(bgMusicSound.duration)

var musicCursorTime = kCMTimeZero

for _ in 0..<noOftimes {

do {

try compositionBackgroundTrack.insertTimeRange(bgMusicSoundTimeRange, ofTrack: backgroundAudioTrack, atTime: musicCursorTime)

} catch let error1 as NSError {

error = error1

}

musicCursorTime = CMTimeAdd(bgMusicSound.duration, musicCursorTime)

}

}

let backgroundMusciMixInputParameters = AVMutableAudioMixInputParameters(track: compositionBackgroundTrack)

backgroundMusciMixInputParameters.trackID = compositionBackgroundTrack.trackID

// setting up music levels for background music track.

for index in 0 ..< Int(self.compositionTimeRanges.count) {

let timeRange = self.compositionTimeRanges[index].CMTimeRangeValue

let scene = self.assetsList[index].assetSceneType

let volumeOfBGMusic = self.assetsList[index].volumeOfBGMusic

var nextvolumeOfBGMusic : Float = 0.0

if let nextAsset = self.assetsList[safe: index + 1] {

nextvolumeOfBGMusic = nextAsset.volumeOfBGMusic

}

backgroundMusciMixInputParameters.setVolume(volumeOfBGMusic, atTime: timeRange.start)

backgroundMusciMixInputParameters.setVolumeRampFromStartVolume(volumeOfBGMusic, toEndVolume: nextvolumeOfBGMusic, timeRange: CMTimeRangeMake(CMTimeSubtract(timeRange.end,CMTimeMake(2, 1)), CMTimeMake(2, 1)))

}

audioMixInputParameters.append(backgroundMusciMixInputParameters)

} // End of If for ProjectMusic Check

for (index, assetItem) in self.assetsList.enumerate(){

let trackIndex = index % 2

let timeRange = self.compositionTimeRanges[index].CMTimeRangeValue

let sceneType = assetItem.assetSceneType

let volumnOfVideoMusic = assetItem.volumeOfVideoVoice

let audioTrackParamater = AVMutableAudioMixInputParameters(track: self.audioTracks[trackIndex])

audioTrackParamater.trackID = self.audioTracks[trackIndex].trackID

audioTrackParamater.setVolume(0.0, atTime: kCMTimeZero ) // Statement 1

audioTrackParamater.setVolume(volumnOfVideoMusic, atTime: timeRange.start) // Statement 2

audioTrackParamater.setVolume(0.0, atTime: timeRange.end) // statement 3

audioMixInputParameters.append(audioTrackParamater)

}

self.audioMix.inputParameters = audioMixInputParameters

self.composition.naturalSize = self.videoRenderSize

self.videoComposition!.instructions = videoCompositionInstructions

self.videoComposition!.renderSize = self.videoRenderSize

self.videoComposition!.frameDuration = CMTimeMake(1, 30)

self.videoComposition!.renderScale = 1.0 // This is a iPhone only option.

}

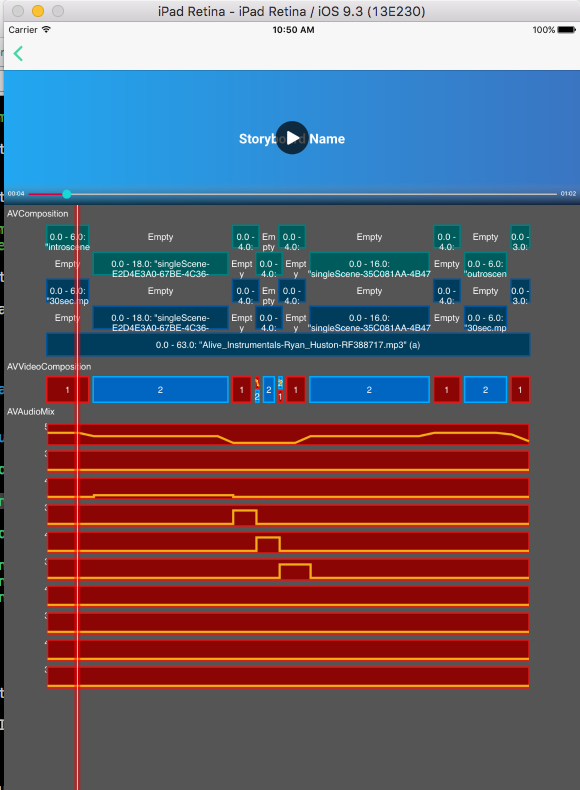

In above code, Background Music Levels are set properly, but something is going wrong for audio levels of Video Tracks. I have added DebugView to help Debug Compositions, everything looks perfect in debug view, but other than Background Music Track, Audio of Video is no more audible. is there is something I am doing wrong?

If I remove Statement 1 from above code then its audible, but now they are all audible at level 1.0, and dont respect the levels set.