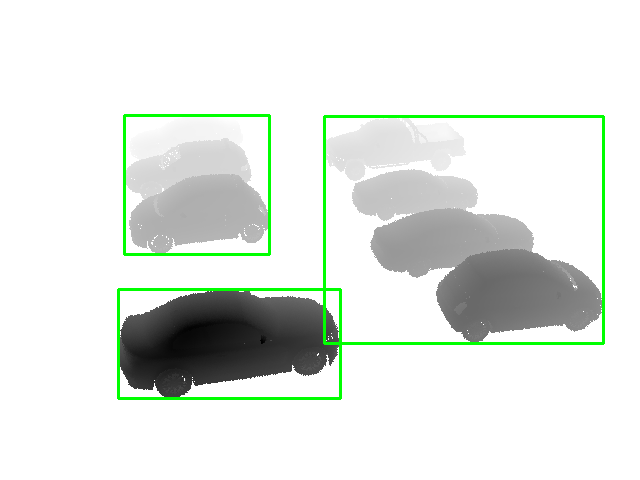

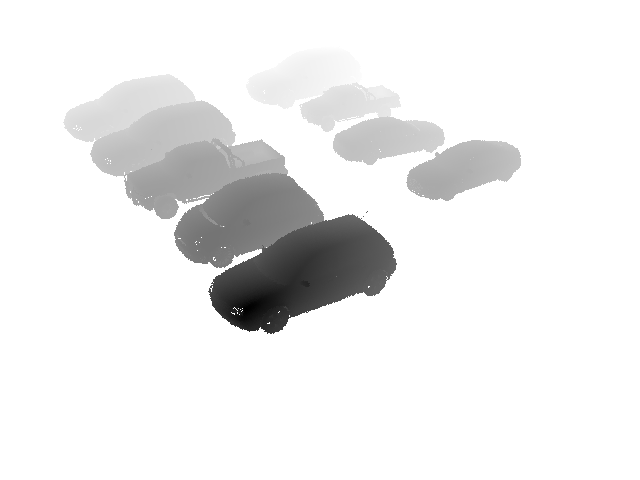

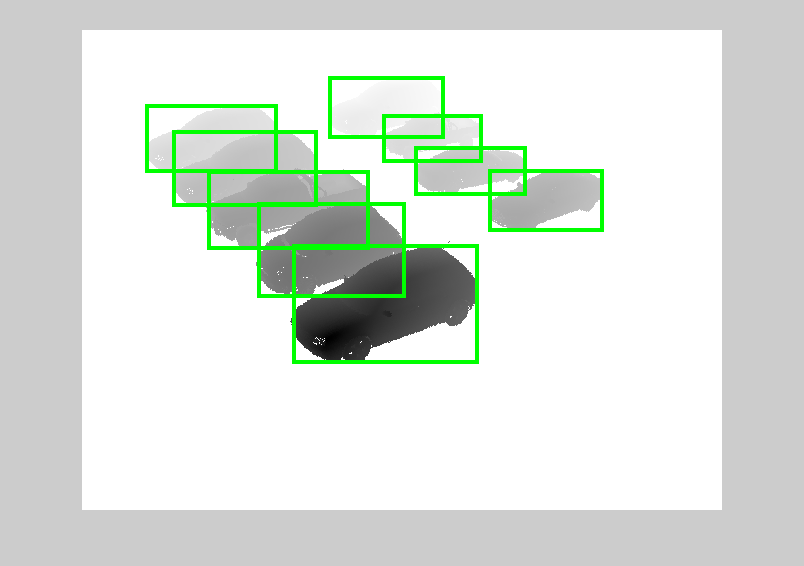

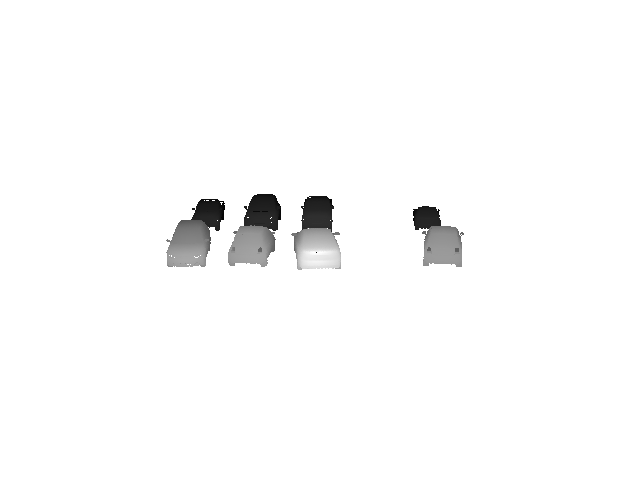

I have a picture like  , which i need to segment the picture into 8 blocks.

, which i need to segment the picture into 8 blocks.

I have tried this threshold method

img_gray = cv2.imread(input_file,cv2.IMREAD_GRAYSCALE)

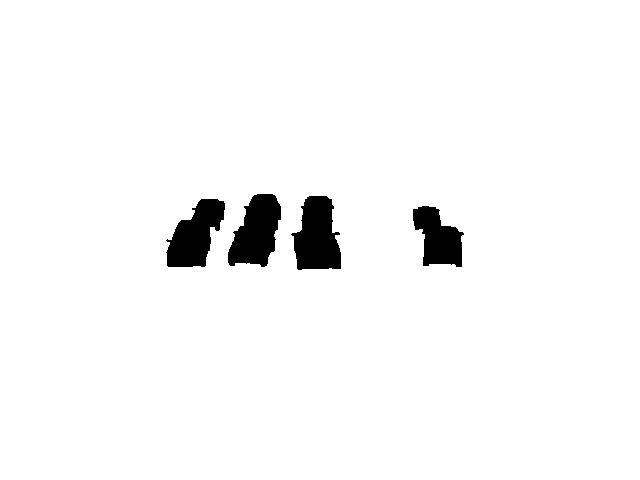

ret,thresh = cv2.threshold(img_gray,254,255,cv2.THRESH_BINARY) =

kernel = np.array(cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3), (-1, -1)))

img_open = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel)

cv2.imshow('abc',img_open)

ret1,thresh1 = cv2.threshold(img_open,254,255,cv2.THRESH_BINARY_INV) #

contours, hierarchy = cv2.findContours(thresh1, cv2.RETR_CCOMP ,cv2.CHAIN_APPROX_NONE)

for i in range(len(contours)):

if len(contours[i]) > 20:

x, y, w, h = cv2.boundingRect(contours[i])

cv2.rectangle(img, (x, y), (x+w, y+h), (0, 255, 0), 2)

print (x, y),(x+w, y+h)

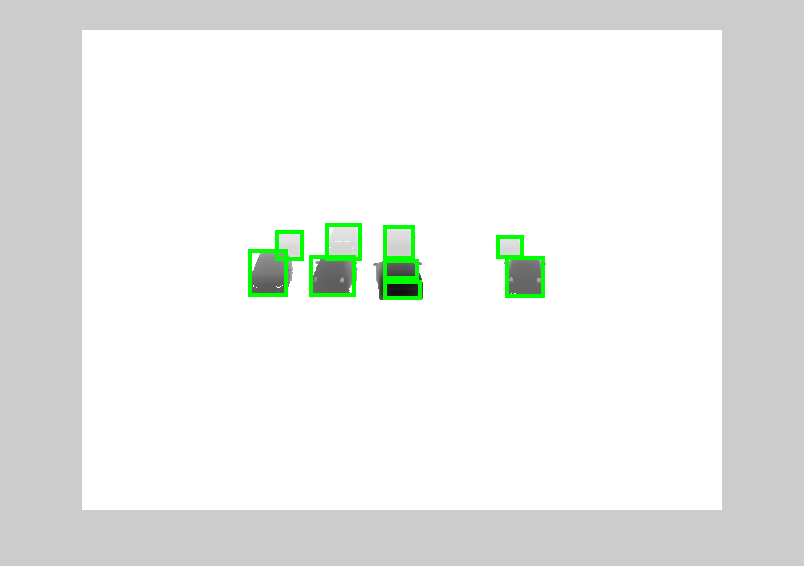

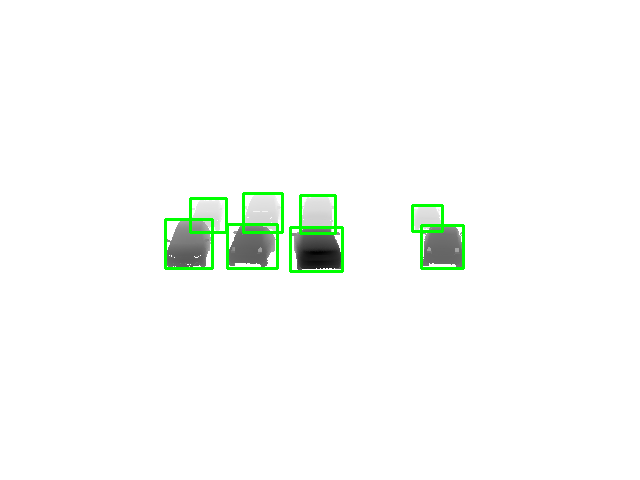

the end result is some blocks connected together are formed into a large segment, which is not what I hoped.

Any other ways to get it around

Any other ways to get it around

cv2.THRESH_BINARYtrycv2.THRESH_TRUNCto first omit the background, and then a binary comparison to separate the darker front row from the lighter rear row. Finally retrieve the contours for both, combine them, and you should in theory end up with 8 regions. – Optometer