1. Short and to the point answer

XCM works fine for classification + GRAD-CAM, but consider using GRAD-CAM with TSR from the TSInterpret library for more reliable results.

2. Long and complete answer

I will broaden the answer to explainability on MTS classification in general. GRAD-CAM is specific to CNNs and is rather niche: there may be better solutions out there for your needs. I currently can't help you with regression, but I assume some information will be applicable.

First, you should know that MTS classification is a rather hard problem. It often draws inspiration from image classification or object detection. Furthermore, XAI is a relatively new research branch and is not very established yet. For instance, there are no exact definitions of what explainability is and no good evaluation metrics for on explainability methods. Combining these two is a problem that is not yet very well investigated in literature.

Before you do anything, try to narrow down the number of features, or at least make sure to minimize correlation, it makes explainability more reliable.

Feature attribution: the easier path

If feature attribution is your main concern, I would suggest to extract tabular information from your MTS, for example with the tsfresh library in Python. This makes classification much easier, but you lose any time-related explainability. It is good practice then to begin with the simplest and most explainable (these two go hand in hand) algorithms, such as the ridge classifier from the sklearn library. If that one doesn't do the trick, you can follow this chart from explainable to non-explainable. XGBoost has worked very well for me in the past. For complex algorithms, you can consider the rather complete OmniXAI Python library that implements common explainability method such as SHAP and LIME in a common interface.

Time attribution or both attributions: the harder path

If time attribution or both attributions are your main concern, then converting to a tabular format won't work. There are very few white-box MTS classifiers, so your best shot is using either a non-neural algorithm from the sktime library or a neural one from tsai. Notice that explainability methods will almost always be post-hoc and model-agnostic in this case, making them less accurate.

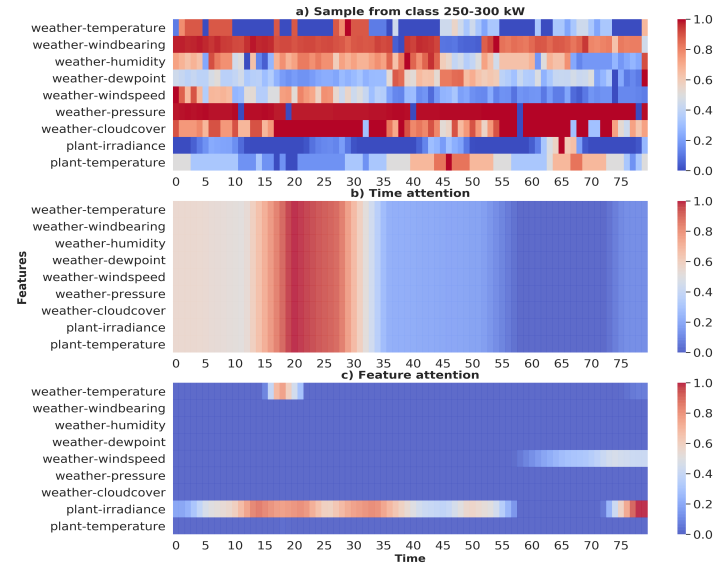

There have been some efforts for creating algorithms that focus on explainability specifically. XCM is one (implemented in tsai) and gives you attributions in both dimensions using GRAD-CAM. From the same authors, I had very good results with the XEM algorithm (but try XGBoost instead of their LCE classifier, since you can't use XAI methods on LCE). Another very recent library you can use is dCAM, which adapted SotA methods for MTSC, such as InceptionTime or ResNet to be 2D explainable.

Apart from the algorithms above, you can use all the others that are not specifically designed for XAI. You can train and test them and then apply an XAI method of choice. I have been using InceptionTime, ResNet and TST. You should keep in mind, however, that regular XAI methods such as SHAP, LIME or Grad-CAM are proven to not work well when combining the time dimension and multiple channels. The TSInterpret library is an effort to solve this, check it out. It works well with the CNN and Transformer algorithms from tsai, but the COMTE counterfactual explainability algorithm also works with sktime I think.

For more insights, consider reading the paper Explainable AI for Time Series Classification: A Review, Taxonomy and Research Directions.

Three more insights:

- Interpretability for time series with LSTMs doesn't seem to work very well, so consider other algorithms first.

- Don't use Rocket or MiniRocket: it works well, but it's not explainable. Edit: it might work when you pipeline the transformation with the classifier, but I haven't tested it.

- Try a bunch of different combinations of algorithms + XAI methods to see what satisfies your needs.