Audio Recording

Add the Audio Recorder Plugin NuGet Package to the Android Project (and to any PCL, netstandard, or iOS libraries if you are using them).

Android Project Configuration

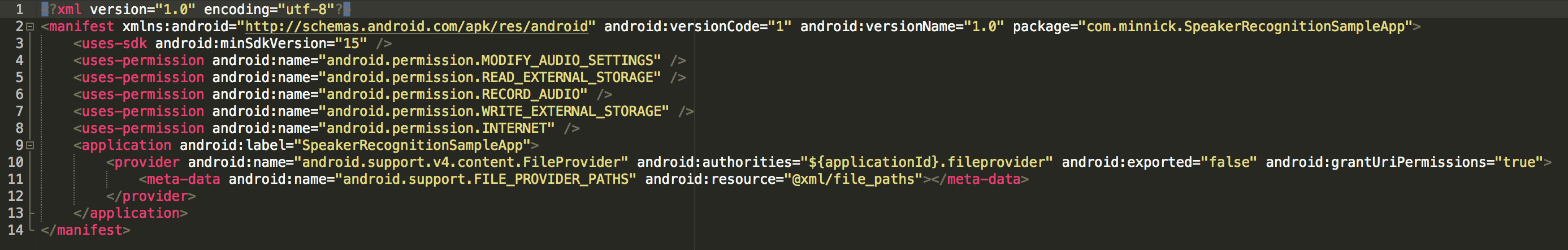

- In AndroidMainifest.xml, add the following permissions:

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.INTERNET" />

- In AndroidManifest.xml, add the following

provider inside the <application></application> tag.

<provider android:name="android.support.v4.content.FileProvider" android:authorities="${applicationId}.fileprovider" android:exported="false" android:grantUriPermissions="true">

<meta-data android:name="android.support.FILE_PROVIDER_PATHS" android:resource="@xml/file_paths"></meta-data>

</provider>

![enter image description here]()

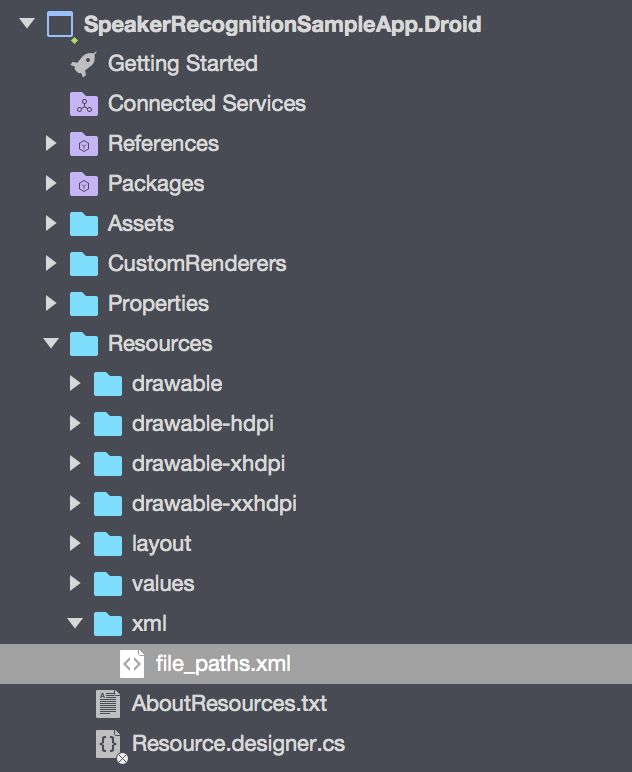

In the Resources folder, create a new folder called xml

Inside of Resources/xml, create a new file called file_paths.xml

![enter image description here]()

- In

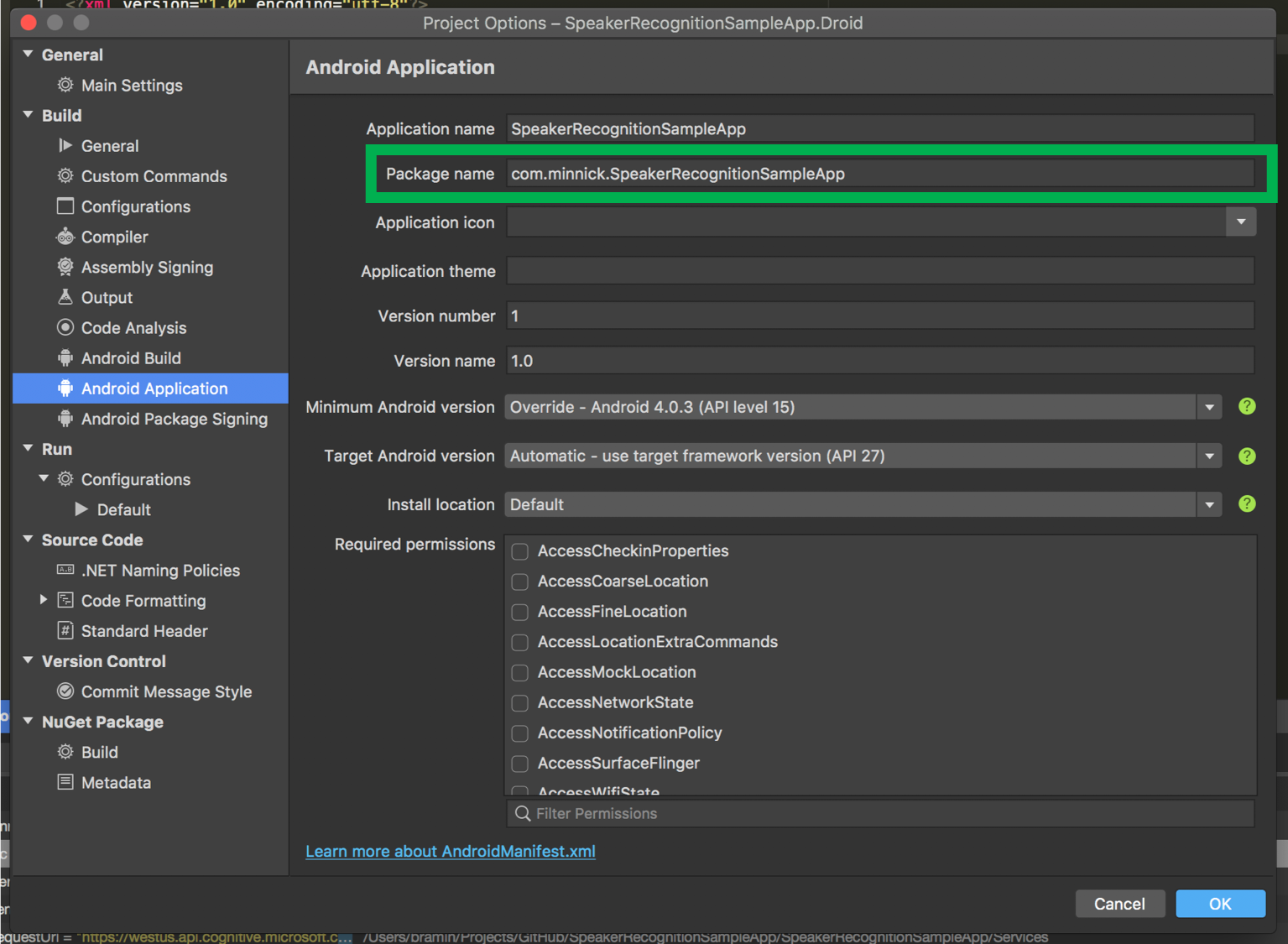

file_paths.xml, add the following code, replacing [your package name] with the package of your Android project

<?xml version="1.0" encoding="utf-8"?>

<paths xmlns:android="http://schemas.android.com/apk/res/android">

<external-path name="my_images" path="Android/data/[your package name]/files/Pictures"/>

<external-path name="my_movies" path="Android/data/[your package name]/files/Movies" />

</paths>

Example Package Name

![enter image description here]()

Android Recorder Code

AudioRecorderService AudioRecorder { get; } = new AudioRecorderService

{

StopRecordingOnSilence = true,

PreferredSampleRate = 16000

});

public async Task StartRecording()

{

AudioRecorder.AudioInputReceived += HandleAudioInputReceived;

await AudioRecorder.StartRecording();

}

public async Task StopRecording()

{

AudioRecorder.AudioInputReceived += HandleAudioInputReceived;

await AudioRecorder.StartRecording();

}

async void HandleAudioInputReceived(object sender, string e)

{

AudioRecorder.AudioInputReceived -= HandleAudioInputReceived;

PlaybackRecording();

//replace [UserGuid] with your unique Guid

await EnrollSpeaker(AudioRecorder.GetAudioFileStream(), [UserGuid]);

}

Cognitive Services Speaker Recognition Code

HttpClient Client { get; } = CreateHttpClient(TimeSpan.FromSeconds(10));

public static async Task<EnrollmentStatus?> EnrollSpeaker(Stream audioStream, Guid userGuid)

{

Enrollment response = null;

try

{

var boundryString = "Upload----" + DateTime.Now.ToString("u").Replace(" ", "");

var content = new MultipartFormDataContent(boundryString)

{

{ new StreamContent(audioStream), "enrollmentData", userGuid.ToString("D") + "_" + DateTime.Now.ToString("u") }

};

var requestUrl = "https://westus.api.cognitive.microsoft.com/spid/v1.0/verificationProfiles" + "/" + userGuid.ToString("D") + "/enroll";

var result = await Client.PostAsync(requestUrl, content).ConfigureAwait(false);

string resultStr = await result.Content.ReadAsStringAsync().ConfigureAwait(false);

if (result.StatusCode == HttpStatusCode.OK)

response = JsonConvert.DeserializeObject<Enrollment>(resultStr);

return response?.EnrollmentStatus;

}

catch (Exception)

{

}

return response?.EnrollmentStatus;

}

static HttpClient CreateHttpClient(TimeSpan timeout)

{

HttpClient client = new HttpClient();

client.Timeout = timeout;

client.DefaultRequestHeaders.AcceptEncoding.Add(new StringWithQualityHeaderValue("gzip"));

client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

//replace [Your Speaker Recognition API Key] with your Speaker Recognition API Key from the Azure Portal

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", [Your Speaker Recognition API Key]);

return client;

}

public class Enrollment : EnrollmentBase

{

[JsonConverter(typeof(StringEnumConverter))]

public EnrollmentStatus EnrollmentStatus { get; set; }

public int RemainingEnrollments { get; set; }

public int EnrollmentsCount { get; set; }

public string Phrase { get; set; }

}

public enum EnrollmentStatus

{

Enrolling

Training,

Enrolled

}

Audio Playback

Configuration

Add the SimpleAudioPlayer Plugin NuGet Package to the Android Project (and to any PCL, netstandard, or iOS libraries if you are using them).

Code

public void PlaybackRecording()

{

var isAudioLoaded = Plugin.SimpleAudioPlayer.CrossSimpleAudioPlayer.Current.Load(AudioRecorder.GetAudioFileStream());

if (isAudioLoaded)

Plugin.SimpleAudioPlayer.CrossSimpleAudioPlayer.Current.Play();

}