Some references:

This is a follow-up on this Why is processing a sorted array faster than processing an unsorted array?

The only post in r tag that I found somewhat related to branch prediction was this Why sampling matrix row is very slow?

Explanation of the problem:

I was investigating whether processing a sorted array is faster than processing an unsorted one (same as the problem tested in Java and C – first link) to see if branch prediction is affecting R in the same manner.

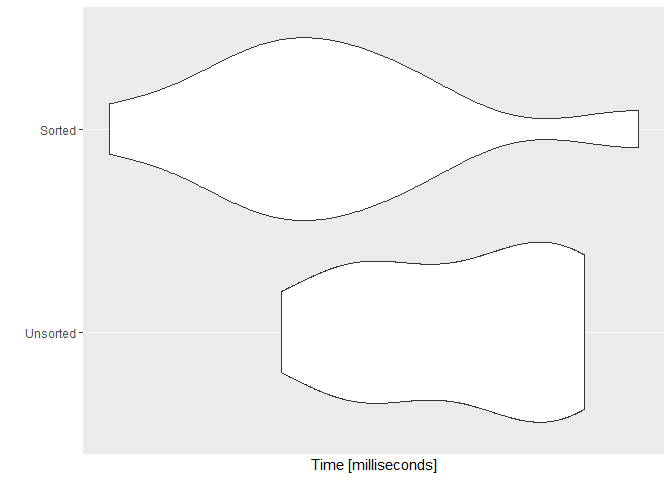

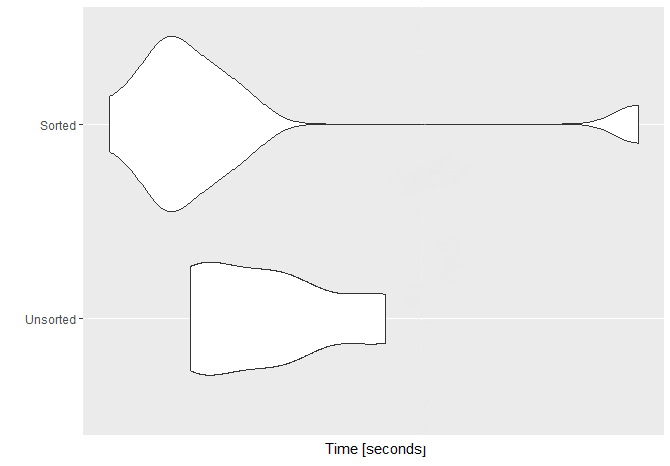

See the benchmark examples below:

set.seed(128)

#or making a vector with 1e7

myvec <- rnorm(1e8, 128, 128)

myvecsorted <- sort(myvec)

mysumU = 0

mysumS = 0

SvU <- microbenchmark::microbenchmark(

Unsorted = for (i in 1:length(myvec)) {

if (myvec[i] > 128) {

mysumU = mysumU + myvec[i]

}

} ,

Sorted = for (i in 1:length(myvecsorted)) {

if (myvecsorted[i] > 128) {

mysumS = mysumS + myvecsorted[i]

}

} ,

times = 10)

ggplot2::autoplot(SvU)

Question:

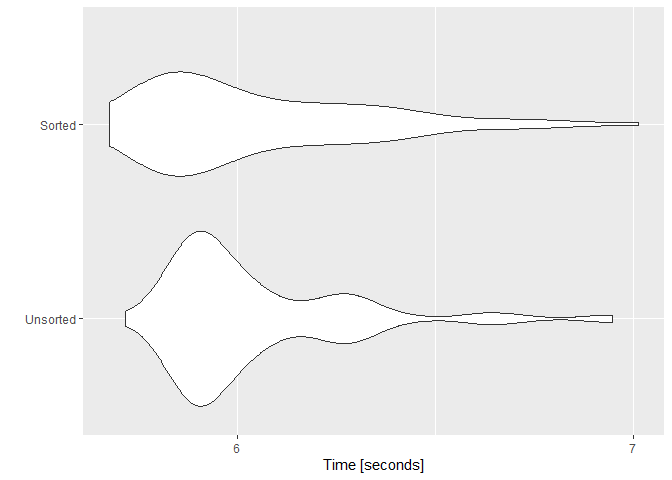

- First, I want to know that why "Sorted" vector is not the fastest all the time and not by the same magnitude as expressed in

Java? - Second, why the sorted execution time has a higher variation compared to one of the unsorted?

N.B. My CPU is an i7-6820HQ @ 2.70GHz Skylake, quad-core with hyperthreading.

Update:

To investigate the variation part, I did the microbenchmark with the vector of 100 million elements (n=1e8) and repeated the benchmark 100 times (times=100). Here's the associated plot with that benchmark.

Here's my sessioninfo:

R version 3.6.1 (2019-07-05)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 16299)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252 LC_CTYPE=English_United States.1252 LC_MONETARY=English_United States.1252

[4] LC_NUMERIC=C LC_TIME=English_United States.1252

attached base packages:

[1] compiler stats graphics grDevices utils datasets methods base

other attached packages:

[1] rstudioapi_0.10 reprex_0.3.0 cli_1.1.0 pkgconfig_2.0.3 evaluate_0.14 rlang_0.4.0

[7] Rcpp_1.0.2 microbenchmark_1.4-7 ggplot2_3.2.1

1.Evaluating the Design of the R Language2.Implementing Persistent O(1) Stacks and Queues in R3.A Byte Code Compiler for R – Africanizecompiler::enableJIT(0). – Lepidosiren