I can't seem to find any python libraries that do multiple regression. The only things I find only do simple regression. I need to regress my dependent variable (y) against several independent variables (x1, x2, x3, etc.).

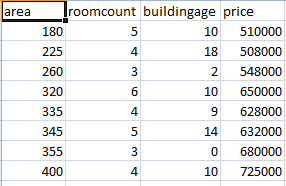

For example, with this data:

print 'y x1 x2 x3 x4 x5 x6 x7'

for t in texts:

print "{:>7.1f}{:>10.2f}{:>9.2f}{:>9.2f}{:>10.2f}{:>7.2f}{:>7.2f}{:>9.2f}" /

.format(t.y,t.x1,t.x2,t.x3,t.x4,t.x5,t.x6,t.x7)

(output for above:)

y x1 x2 x3 x4 x5 x6 x7

-6.0 -4.95 -5.87 -0.76 14.73 4.02 0.20 0.45

-5.0 -4.55 -4.52 -0.71 13.74 4.47 0.16 0.50

-10.0 -10.96 -11.64 -0.98 15.49 4.18 0.19 0.53

-5.0 -1.08 -3.36 0.75 24.72 4.96 0.16 0.60

-8.0 -6.52 -7.45 -0.86 16.59 4.29 0.10 0.48

-3.0 -0.81 -2.36 -0.50 22.44 4.81 0.15 0.53

-6.0 -7.01 -7.33 -0.33 13.93 4.32 0.21 0.50

-8.0 -4.46 -7.65 -0.94 11.40 4.43 0.16 0.49

-8.0 -11.54 -10.03 -1.03 18.18 4.28 0.21 0.55

How would I regress these in python, to get the linear regression formula:

Y = a1x1 + a2x2 + a3x3 + a4x4 + a5x5 + a6x6 + +a7x7 + c

Ymay be correlated with each other, but assuming independence does not accurately model the dataset. – Trimmer