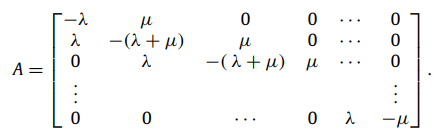

I'm trying to calculate the eigenvectors and eigenvalues of this matrix

import numpy as np

la = 0.02

mi = 0.08

n = 500

d1 = np.full(n, -(la+mi), np.double)

d1[0] = -la

d1[-1] = -mi

d2 = np.full(n-1, la, np.double)

d3 = np.full(n-1, mi, np.double)

A = np.diagflat(d1) + np.diagflat(d2, -1) + np.diag(d3, 1)

e_values, e_vectors = np.linalg.eig(A)

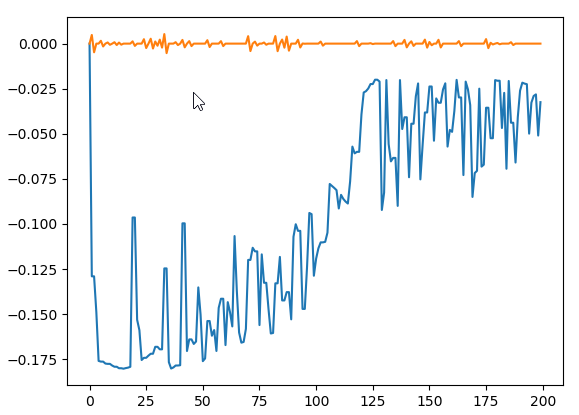

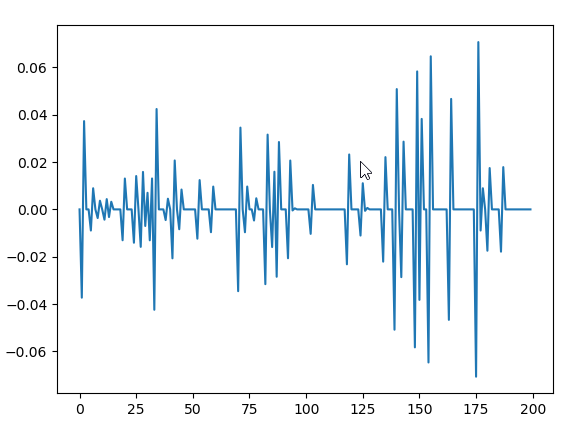

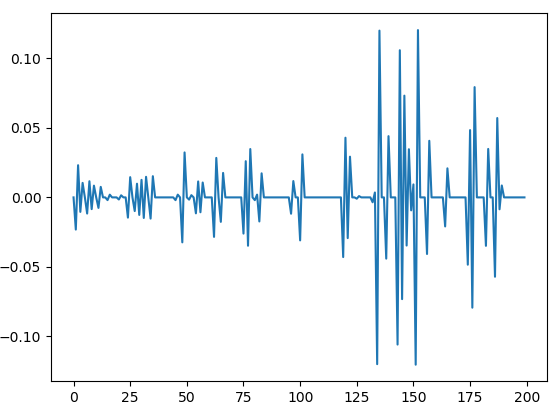

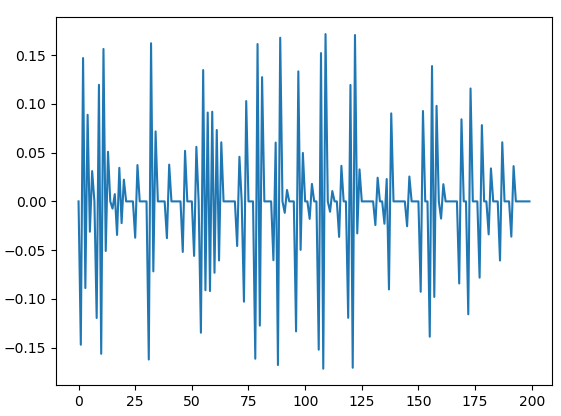

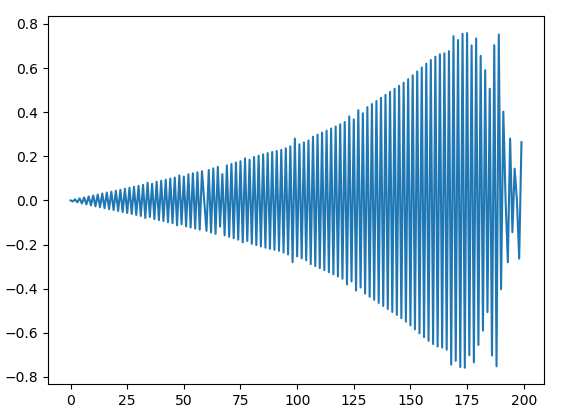

If I set the dimensions of the matrix to n < 110 the output is fine. However, if I set it to n >= 110 both the eigenvalues and the eigenvector components become complex numbers with significant imaginary parts. Why does this happen? Is it supposed to happen? It is very strange behavior and frankly I'm kind of stuck.