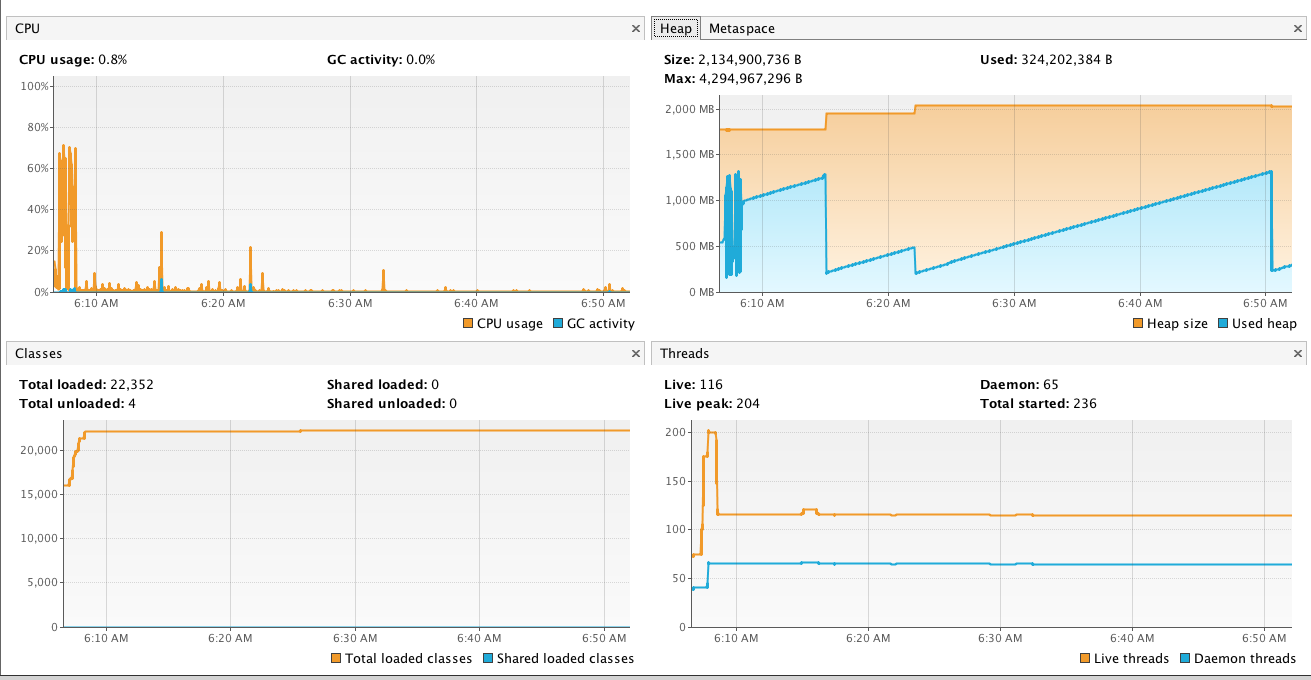

My Spring Data JPA/Hibernate Application consumes over 2GB of memory at start without a single user hitting it. I am using Hazelcast as the second level cache but I had the same issue when I used ehCache as well so that is probably not the cause of the issue.

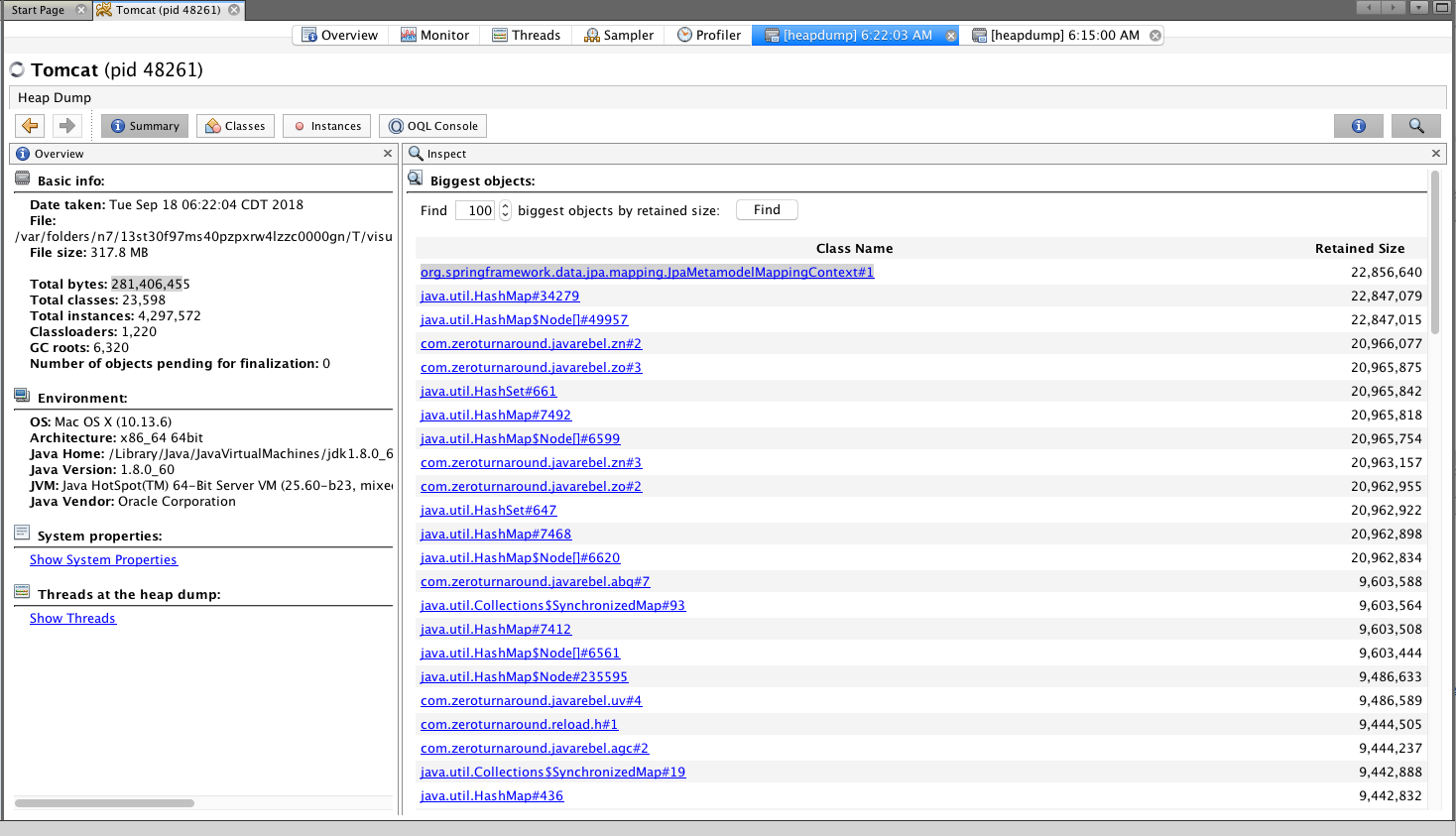

I ran a profile with a Heap Dump in Visual VM and I see where the bulk of the memory is being consumed by JpaMetamodelMappingContext and secondary a ton of Map objects. I just need help in deciphering what I am seeing and if this is actually a problem. I do have a hundred classes in the model so this may be normal but I have no point of reference. It just seems a bit excessive.

Once I get a load of 100 concurrent users, my memory consumption increases to 6-7 GB. That is quite normal for the amount of data I push around and cache, but I feel like if I could reduce the initial memory, I'd have a lot more room for growth.