Having a script or even a subsystem of an application for a network protocol debugging, it's desired to see what request-response pairs are exactly, including effective URLs, headers, payloads and the status. And it's typically impractical to instrument individual requests all over the place. At the same time there are performance considerations that suggest using single (or few specialised) requests.Session, so the following assumes that the suggestion is followed.

requests supports so called event hooks (as of 2.23 there's actually only response hook). It's basically an event listener, and the event is emitted before returning control from requests.request. At this moment both request and response are fully defined, hence can be logged.

import logging

import requests

logger = logging.getLogger('httplogger')

def logRoundtrip(response, *args, **kwargs):

extra = {'req': response.request, 'res': response}

logger.debug('HTTP roundtrip', extra=extra)

session = requests.Session()

session.hooks['response'].append(logRoundtrip)

That's basically how to log all HTTP round-trips of a session.

Formatting HTTP round-trip log records

For the logging above to be useful there can be specialised logging formatter that understands req and res extras on logging records. It can look like this:

import textwrap

class HttpFormatter(logging.Formatter):

def _formatHeaders(self, d):

return '\n'.join(f'{k}: {v}' for k, v in d.items())

def formatMessage(self, record):

result = super().formatMessage(record)

if record.name == 'httplogger':

result += textwrap.dedent('''

---------------- request ----------------

{req.method} {req.url}

{reqhdrs}

{req.body}

---------------- response ----------------

{res.status_code} {res.reason} {res.url}

{reshdrs}

{res.text}

''').format(

req=record.req,

res=record.res,

reqhdrs=self._formatHeaders(record.req.headers),

reshdrs=self._formatHeaders(record.res.headers),

)

return result

formatter = HttpFormatter('{asctime} {levelname} {name} {message}', style='{')

handler = logging.StreamHandler()

handler.setFormatter(formatter)

logging.basicConfig(level=logging.DEBUG, handlers=[handler])

Now if you do some requests using the session, like:

session.get('https://httpbin.org/user-agent')

session.get('https://httpbin.org/status/200')

The output to stderr will look as follows.

2020-05-14 22:10:13,224 DEBUG urllib3.connectionpool Starting new HTTPS connection (1): httpbin.org:443

2020-05-14 22:10:13,695 DEBUG urllib3.connectionpool https://httpbin.org:443 "GET /user-agent HTTP/1.1" 200 45

2020-05-14 22:10:13,698 DEBUG httplogger HTTP roundtrip

---------------- request ----------------

GET https://httpbin.org/user-agent

User-Agent: python-requests/2.23.0

Accept-Encoding: gzip, deflate

Accept: */*

Connection: keep-alive

None

---------------- response ----------------

200 OK https://httpbin.org/user-agent

Date: Thu, 14 May 2020 20:10:13 GMT

Content-Type: application/json

Content-Length: 45

Connection: keep-alive

Server: gunicorn/19.9.0

Access-Control-Allow-Origin: *

Access-Control-Allow-Credentials: true

{

"user-agent": "python-requests/2.23.0"

}

2020-05-14 22:10:13,814 DEBUG urllib3.connectionpool https://httpbin.org:443 "GET /status/200 HTTP/1.1" 200 0

2020-05-14 22:10:13,818 DEBUG httplogger HTTP roundtrip

---------------- request ----------------

GET https://httpbin.org/status/200

User-Agent: python-requests/2.23.0

Accept-Encoding: gzip, deflate

Accept: */*

Connection: keep-alive

None

---------------- response ----------------

200 OK https://httpbin.org/status/200

Date: Thu, 14 May 2020 20:10:13 GMT

Content-Type: text/html; charset=utf-8

Content-Length: 0

Connection: keep-alive

Server: gunicorn/19.9.0

Access-Control-Allow-Origin: *

Access-Control-Allow-Credentials: true

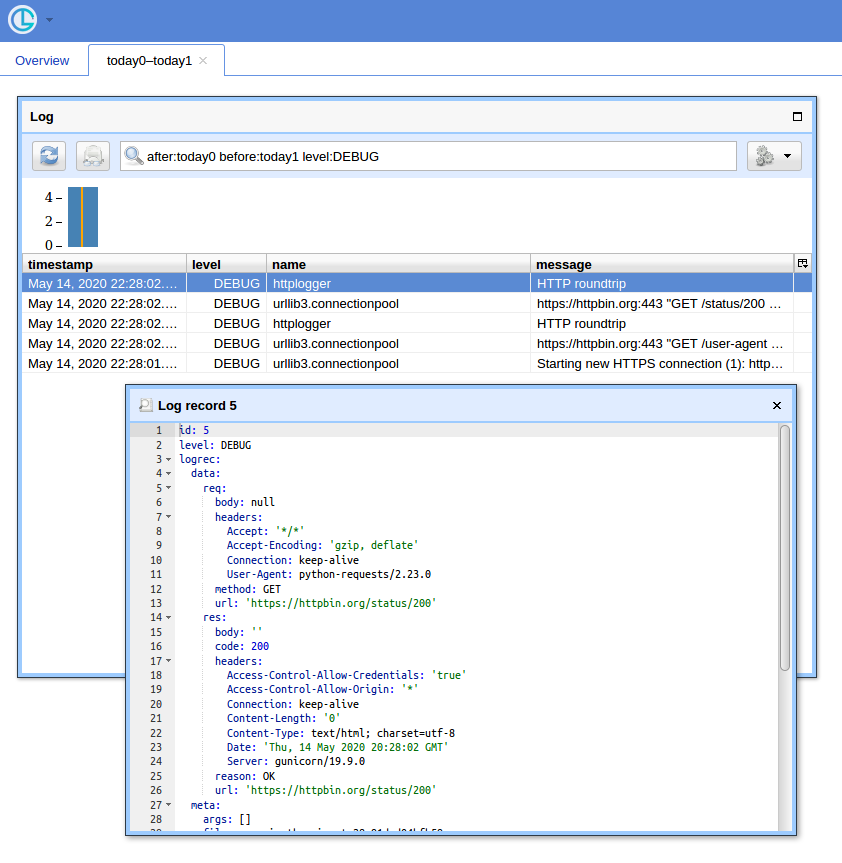

A GUI way

When you have a lot of queries, having a simple UI and a way to filter records comes at handy. I'll show to use Chronologer for that (which I'm the author of).

First, the hook has be rewritten to produce records that logging can serialise when sending over the wire. It can look like this:

def logRoundtrip(response, *args, **kwargs):

extra = {

'req': {

'method': response.request.method,

'url': response.request.url,

'headers': response.request.headers,

'body': response.request.body,

},

'res': {

'code': response.status_code,

'reason': response.reason,

'url': response.url,

'headers': response.headers,

'body': response.text

},

}

logger.debug('HTTP roundtrip', extra=extra)

session = requests.Session()

session.hooks['response'].append(logRoundtrip)

Second, logging configuration has to be adapted to use logging.handlers.HTTPHandler (which Chronologer understands).

import logging.handlers

chrono = logging.handlers.HTTPHandler(

'localhost:8080', '/api/v1/record', 'POST', credentials=('logger', ''))

handlers = [logging.StreamHandler(), chrono]

logging.basicConfig(level=logging.DEBUG, handlers=handlers)

Finally, run Chronologer instance. e.g. using Docker:

docker run --rm -it -p 8080:8080 -v /tmp/db \

-e CHRONOLOGER_STORAGE_DSN=sqlite:////tmp/db/chrono.sqlite \

-e CHRONOLOGER_SECRET=example \

-e CHRONOLOGER_ROLES="basic-reader query-reader writer" \

saaj/chronologer \

python -m chronologer -e production serve -u www-data -g www-data -m

And run the requests again:

session.get('https://httpbin.org/user-agent')

session.get('https://httpbin.org/status/200')

The stream handler will produce:

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): httpbin.org:443

DEBUG:urllib3.connectionpool:https://httpbin.org:443 "GET /user-agent HTTP/1.1" 200 45

DEBUG:httplogger:HTTP roundtrip

DEBUG:urllib3.connectionpool:https://httpbin.org:443 "GET /status/200 HTTP/1.1" 200 0

DEBUG:httplogger:HTTP roundtrip

Now if you open http://localhost:8080/ (use "logger" for username and empty password for the basic auth popup) and click "Open" button, you should see something like:

![Screenshot of Chronologer]()