I have exported a DNNClassifier model and run it on tensorflow-serving server using docker. After that I have written a python client to interact with that tensorflow-serving for new prediction.

I have written the following code to get the response from tensorflow-serving server.

host, port = FLAGS.server.split(':')

channel = implementations.insecure_channel(host, int(port))

stub = prediction_service_pb2.beta_create_PredictionService_stub(channel)

request = predict_pb2.PredictRequest()

request.model_spec.name = FLAGS.model

request.model_spec.signature_name = 'serving_default'

feature_dict = {'a': _float_feature(value=400),

'b': _float_feature(value=5),

'c': _float_feature(value=200),

'd': _float_feature(value=30),

'e': _float_feature(value=60),

'f': _float_feature(value=5),

'g': _float_feature(value=7500),

'h': _int_feature(value=1),

'i': _int_feature(value=1234),

'j': _int_feature(value=1),

'k': _int_feature(value=4),

'l': _int_feature(value=1),

'm': _int_feature(value=0)}

example= tf.train.Example(features=tf.train.Features(feature=feature_dict))

serialized = example.SerializeToString()

request.inputs['inputs'].CopyFrom(

tf.contrib.util.make_tensor_proto(serialized, shape=[1]))

result_future = stub.Predict.future(request, 5.0)

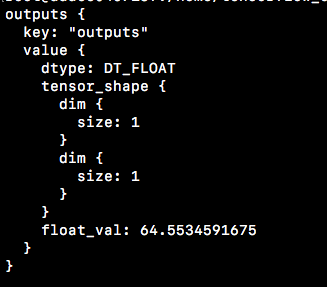

print(result_future.result()) I m not able to figure out how to parse that float_val number because that is my output. Pls help.

I m not able to figure out how to parse that float_val number because that is my output. Pls help.