I'm trying to implement a custom loss function for my CNN model. I found an IPython notebook that has implemented a custom loss function named Dice, just as follows:

from keras import backend as K

smooth = 1.

def dice_coef(y_true, y_pred, smooth=1):

intersection = K.sum(y_true * y_pred, axis=[1,2,3])

union = K.sum(y_true, axis=[1,2,3]) + K.sum(y_pred, axis=[1,2,3])

return K.mean( (2. * intersection + smooth) / (union + smooth), axis=0)

def bce_dice(y_true, y_pred):

return binary_crossentropy(y_true, y_pred)-K.log(dice_coef(y_true, y_pred))

def true_positive_rate(y_true, y_pred):

return K.sum(K.flatten(y_true)*K.flatten(K.round(y_pred)))/K.sum(y_true)

seg_model.compile(optimizer = 'adam',

loss = bce_dice,

metrics = ['binary_accuracy', dice_coef, true_positive_rate])

I have never used keras backend before and really get confused with the matrix calculations of keras backend. So, I created some tensors to see what's happening in the code:

val1 = np.arange(24).reshape((4, 6))

y_true = K.variable(value=val1)

val2 = np.arange(10,34).reshape((4, 6))

y_pred = K.variable(value=val2)

Now I run the dice_coef function:

result = K.eval(dice_coef(y_true=y_true, y_pred=y_pred))

print('result is:', result)

But it gives me this error:

ValueError: Invalid reduction dimension 2 for input with 2 dimensions. for 'Sum_32' (op: 'Sum') with input shapes: [4,6], [3] and with computed input tensors: input[1] = <1 2 3>.

Then I changed all of [1,2,3] to -1 just like below:

def dice_coef(y_true, y_pred, smooth=1):

intersection = K.sum(y_true * y_pred, axis=-1)

# intersection = K.sum(y_true * y_pred, axis=[1,2,3])

# union = K.sum(y_true, axis=[1,2,3]) + K.sum(y_pred, axis=[1,2,3])

union = K.sum(y_true, axis=-1) + K.sum(y_pred, axis=-1)

return K.mean( (2. * intersection + smooth) / (union + smooth), axis=0)

Now it gives me a value.

result is: 14.7911625

Questions:

- What is

[1,2,3]? - Why the code works when I change

[1,2,3]to-1? - What does this

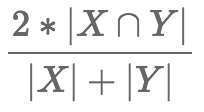

dice_coeffunction do?

[1,2,3]means that the sum (seens as an aggregation operation) runs through the second to fourth axes of the tensor. – Babitaaxis=-1insummeans that the sum runs over the last axis. – Babita