I use Metal and CADisplayLink to live filter a CIImage and render it into a MTKView.

// Starting display link

displayLink = CADisplayLink(target: self, selector: #selector(applyAnimatedFilter))

displayLink.preferredFramesPerSecond = 30

displayLink.add(to: .current, forMode: .default)

@objc func applyAnimatedFilter() {

...

metalView.image = filter.applyFilter(image: ciImage)

}

According to the memory monitor in Xcode, memory usage is stable on iPhone X and never goes above 100mb, on devices like iPhone 6 or iPhone 6s the memory usage keeps growing until eventually the system kills the app.

I've checked for memory leaks using Instruments, but no leaks were reported. Running the app through Allocations also don't show any problems and the app won't get shut down by the system. I also find it interesting that on newer devices the memory usage is stable but on older it just keeps growing and growing.

The filter's complexity don't matter as I tried even most simple filters and the issue persists. Here is an example from my metal file:

extern "C" { namespace coreimage {

float4 applyColorFilter(sample_t s, float red, float green, float blue) {

float4 newPixel = s.rgba;

newPixel[0] = newPixel[0] + red;

newPixel[1] = newPixel[1] + green;

newPixel[2] = newPixel[2] + blue;

return newPixel;

}

}

I wonder what can cause the issue on older devices and in which direction I should look up to.

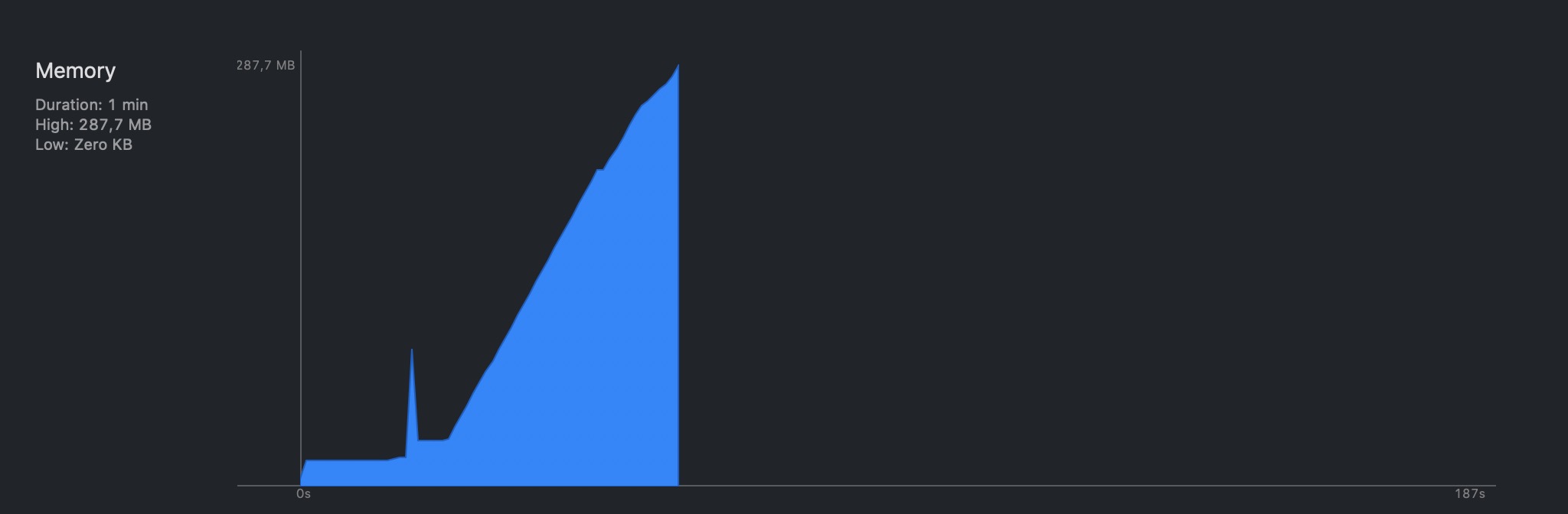

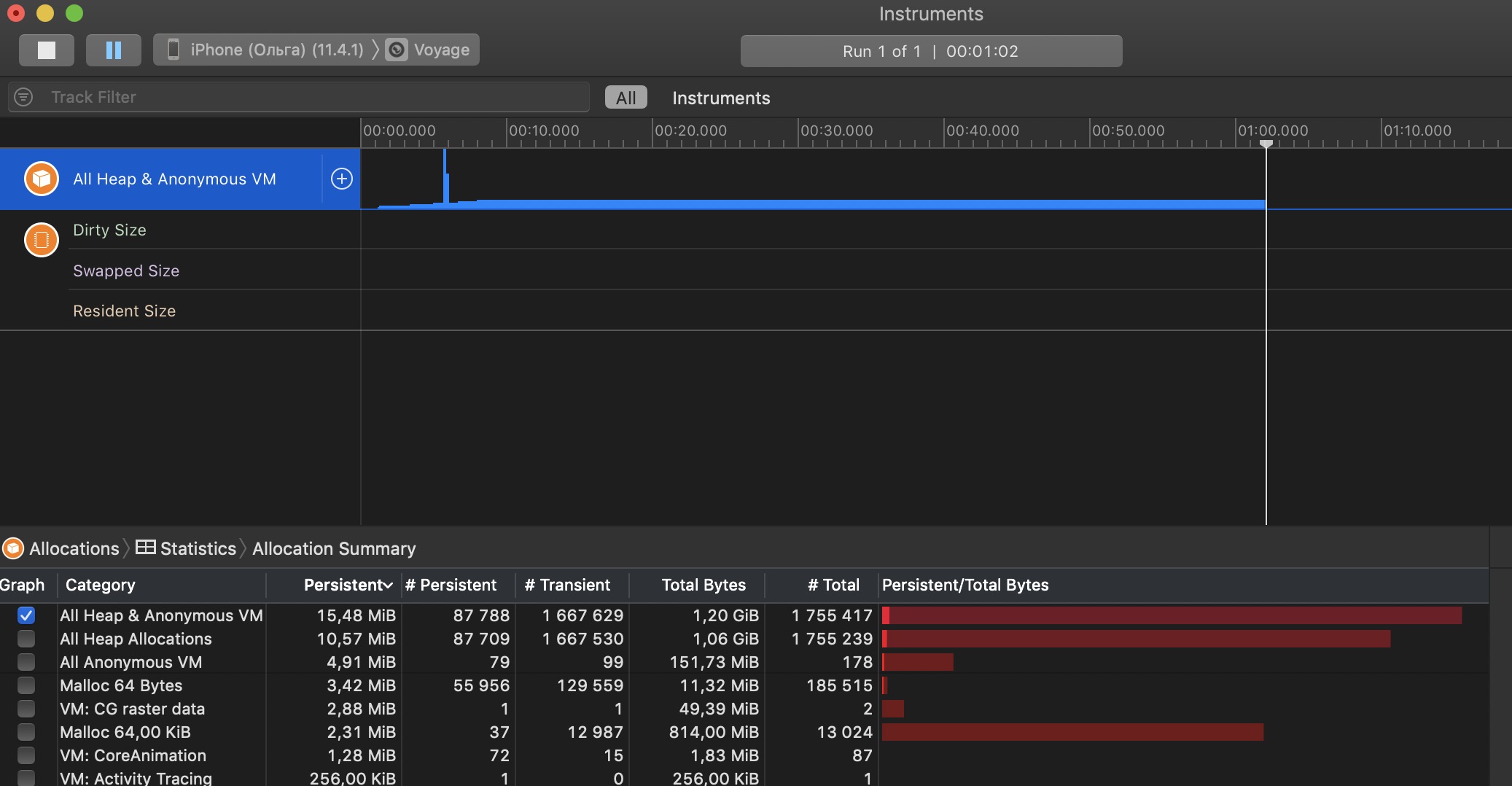

Update 1: here are two 1 minute graphs, one from Xcode and one from Allocations both using the same filter. Allocations graph is stable while Xcode graph is always growing:

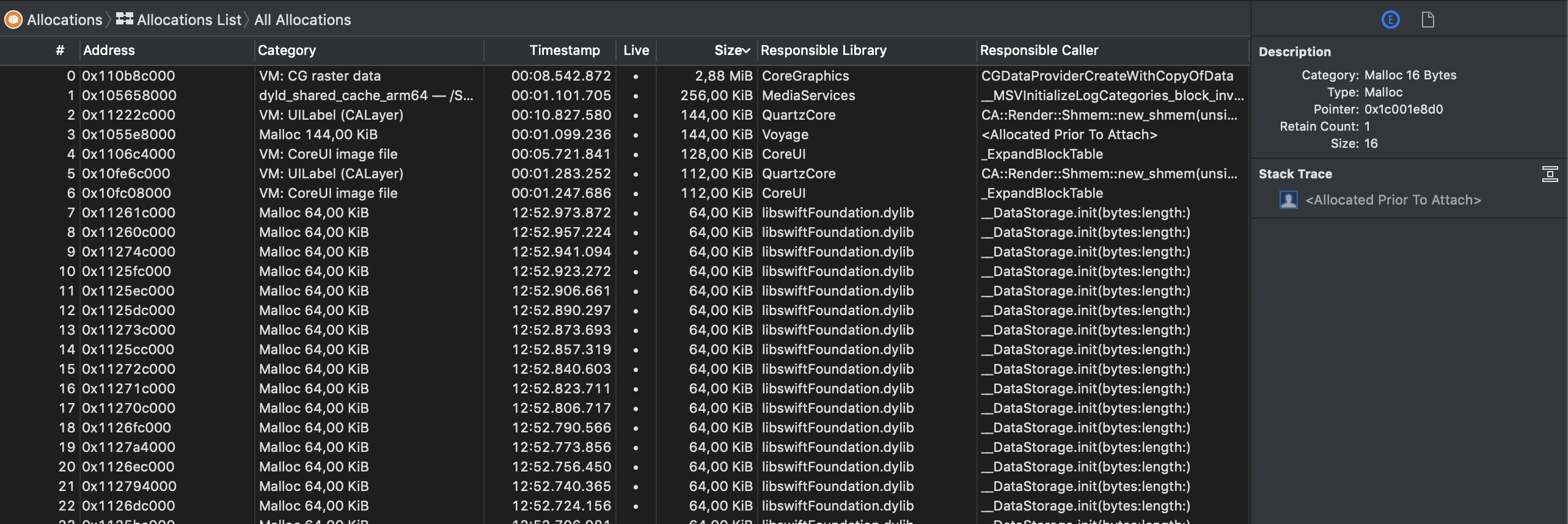

Update 2: Attaching a screenshot of Allocations List sorted by size, the app was running for 16 minutes, applying the filter non stop:

Update 3: A bit more info on what is happening in applyAnimatedFilter():

I render a filtered image into a metalView which is a MTKView. I receive the filtered image from filter.applyFilter(image: ciImage), where in Filter class happens next:

func applyFilter(image: ciImage) -> CIImage {

...

var colorMix = ColorMix()

return colorMix.use(image: ciImage, time: filterTime)

}

where filterTime is just a Double variable. And finally, here is the whole ColorMix class:

import UIKit

class ColorMix: CIFilter {

private let kernel: CIKernel

@objc dynamic var inputImage: CIImage?

@objc dynamic var inputTime: CGFloat = 0

override init() {

let url = Bundle.main.url(forResource: "default", withExtension: "metallib")!

let data = try! Data(contentsOf: url)

kernel = try! CIKernel(functionName: "colorMix", fromMetalLibraryData: data)

super.init()

}

required init?(coder aDecoder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

func outputImage() -> CIImage? {

guard let inputImage = inputImage else {return nil}

return kernel.apply(extent: inputImage.extent, roiCallback: {

(index, rect) in

return rect.insetBy(dx: -1, dy: -1)

}, arguments: [inputImage, CIVector(x: inputImage.extent.width, y: inputImage.extent.height), inputTime])

}

func use(image: CIImage, time: Double) -> CIImage {

var resultImage = image

// 1. Apply filter

let filter = ColorMix()

filter.setValue(resultImage, forKey: "inputImage")

filter.setValue(NSNumber(floatLiteral: time), forKey: "inputTime")

resultImage = filter.outputImage()!

return resultImage

}

}

applyAnimatedFilterin anautoreleasepoolblock and let's see if makes any difference. – Deelautireleasepooldidn't make a difference according to Xcode memory graph – DoneganReleasebuild configuration and did a "build and run" on device - the Xcode memory graph still showed a constantly growing memory usage. But when I run it in Instruments, the memory usage seem to be OK. Whom do I trust? :D – DoneganCIImagewith Metal filter (which also doesn't involve any images loading, just modifies the input image) and rendering it back toMTKView. – DoneganapplyAnimatedFilter. – GyrateCIImageinto theMTKView(what happens when you assignmetalView.image)? – Gyrate