Occasionally with Kafka Connect, I see my JdbcSourceConnector's task go up and down--that is, the REST interface sometimes reports one task that is RUNNING and sometimes reports no tasks (the connector remains RUNNING this whole time). During these times, the task seems to be working when its running. Then, if I delete and re-create the connector, the problem seems to go away. I suspect something is wrong--that tasks shouldn't churn like this, right? But the INFO/WARN logs on the server don't seem to give me many clues, although there are lots of INFO lines to sort through.

- Is it normal for JdbcSourceConnector tasks to oscillate between nonexisting and RUNNING?

- Assuming not, what should I look for in the log to help figure it out? (I see lots of INFO lines)

- Any idea what could be causing this?

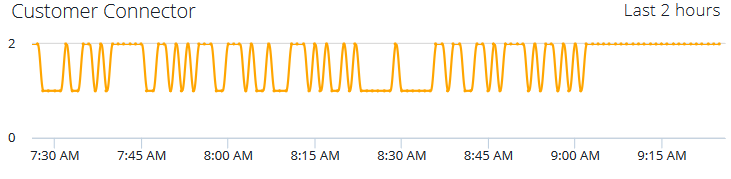

I have monitoring on my REST connectors' statuses, and this one gives me the following (where the value is the number of RUNNING statuses; 2 is Connector RUNNING and task RUNNING, but 1 is Connector RUNNING without a task). Today at 9:01 AM I deleted and created the connector, thus "solving" the problem. Any thoughts?

I have Kafka Connect version "5.5.0-ccs" for use with Confluent platform 5.4, running on Openshift with 2 pods. I have 6 separate connectors each with max 1 task, and I typically see 3 connectors with their tasks on one pod and 3 on the other. For the example above, this was the only 1 of the 6 tasks that showed this behavior, but I have seen where 2 or 3 of them are doing it.