Personally I can see two tricks you can pursue. And one "blast from the past" more.

Route #1. Use GTT: GLOBAL TEMPORARY TABLE

GTTs were introduced in FB 2.1 (and u use it) and can be per-connection or per-transaction. You would want the per-transaction one. This difference is about the data(rows), the schema(structure and indexes, the meta-data) is persistent. See ON COMMIT DELETE ROWS option in the GTT documentation.

and so on.

In that way, you open the transaction, you fill the GTT with the data from your list (copying those 1500 value-pairs of data from your workstation to the server), you run your query JOINing over that GTT, and then you COMMIT your transaction and the table content is auto-dropped.

If you can run many almost-similar queries in the session, then it might make sense to make that GTT per-connection instead and to modify the data as you need, rather than re-fill it for every next query in every next transaction, but it is a more complex approach. Cleanse-early on every COMMIT is what i'd prefer as default approach until argued why per-connection would be better in this specific case. Just not to keep that garbage on the server between queries.

Route #2. Use string search - reversed LIKE matching.

In its basic form this method works for searching for some huge and arbitrary list of integer numbers. Your case is a bit more complex, you match against PAIRS of numbers, not single ones.

The simple idea is like that, let's assume we want to fetch rows where ID column can be 1, 4, 12, 24.

Straightforward approach would be either making 4 queries for every value, or making WHERE ID = 1 or ID = 4 or ... or using WHERE id IN (1,4,12,24). Internally, IN would be unrolled into that very = or = or = and then most probably executed as four queries. Not very efficient for long lists.

So instead - for really long lists to match - we may form a special string. And match it as a text. This makes matching itself much less efficient, and prohibits using any indexing, the server runs a NATURAL SCAN over a whole table - but it makes a one-pass scan. When the matching-list is really large, the one-pass all-table scan gets more efficient than thousands of by-index fetches.

BUT - only when the list-to-table ratio is really large, depends on your specific data.

We make the text enlisting all our target values, interspersed by AND wrapped into a delimiter: "~1~4~12~24~". Now we make the same delimiter-number-delimiter string of our ID column and see whether such a substring can be found.

The usual use of LIKE/CONTAINING is to match a column against data like below: SELECT * from the_table WHERE column_name CONTAINING value_param

We reverse it, SELECT * from the_table WHERE value_param CONTAINING column_name-based-expression

SELECT * from the_table WHERE '~1~4~12~24~' CONTAINING '~' || ID || '~'

This assumes ID would get auto-casted from integer to string. IF not you would have to do it manually: .... CONTAINING '~' || CAST( ID as VARCHAR(100) ) || '~'

Your case is a bit more complex, you need to match two numbers, Department and Number, so you would have to use TWO DIFFERENT delimiters, if you follow this way. Something like

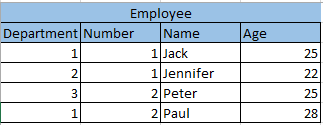

SELECT * FROM employee e WHERE

'~1@10~1@11~2@20~3@7~3@66~' CONTAINING

'~' || e.Department || '@' || e.Number || '~'

Gotcha: you say your target list is 1500 elements. The target line would be... long.

How exactly long???

VARCHAR in Firebird is limited with 32KB AFAIR, and longer texts should be made as text BLOBs, with reduced functionality. Does LIKE work against BLOBs in FB2.1? I don't remember, check release-notes. Also check if your library would even allow you to specify the parameter type as a BLOB not string.

Now, what is your CONNECTION CHARSET? If it would be something like Windows-1250 or Windows-1251 - then one character is one byte, and you can fit 32K characters into 32KBytes. But if the CONNECTION CHARSET your application sets is UTF-8 - then each letter takes 4 bytes and your maximum VARCHARable string gets reduced to 8K letters.

You may try to avoid using parameter for this long string and to inline the target string constant into the SQL statement. But then you may hit the limit of maximum SQL statement length instead.

See Also: MON$CHARACTER_SET_ID in c:\Program Files\Firebird\Firebird_2_1\doc\README.monitoring_tables.txt and then SYSTEM TABLES section in the FB docs how to map IDs to charset textual names.

Route #3 Poor man's GTT. Enter pseudo-tables.

This trick could be used sometimes in older IB/FB versions before GTTs were introduced.

Pro: you do not need to change your persistent SCHEMA.

Con: without changing SCHEME - you can not create indices and can not use indexed joining. And yet again, you can hit the length limit of single SQL statement.

Really, don't think this would be applicable to your case, just to make the answer complete I think this trick should be mentioned too.

select * from employee e, (

SELECT 1 as Department, 10 as Number FROM RDB$DATABASE

UNION ALL SELECT 1, 11 FROM RDB$DATABASE

UNION ALL SELECT 2, 20 FROM RDB$DATABASE

UNION ALL SELECT 3, 7 FROM RDB$DATABASE

UNION ALL SELECT 3, 66 FROM RDB$DATABASE

) t,

where e.Department = t.Department

and e.Number = t.Number

Crude and ugly, but sometimes this pseudo-table might help. When? mostly it helps to make batch INSERT-from-SELECT, where indexing is not needed :-D It is rarely applicable to SELECTs - but just know the trick.

select count(*) from RDB$RELATIONS where rdb$relation_type=0 and ( rdb$System_flag is null or rdb$System_flag=0 )Maybe you'd really think about upgrading FB... – Buffoon