I have a working TensorFlow seq2seq model I've been using for image captioning that I'd like to convert over to CNTK, but I'm having trouble getting the input to my LSTM in the right format.

Here's what I do in my TensorFlow network:

max_seq_length = 40

embedding_size = 512

self.x_img = tf.placeholder(tf.float32, [None, 2048])

self.x_txt = tf.placeholder(tf.int32, [None, max_seq_length])

self.y = tf.placeholder(tf.int32, [None, max_seq_length])

with tf.device("/cpu:0"):

image_embed_inputs = tf.layers.dense(inputs=self.x_img, units=embedding_size)

image_embed_inputs = tf.reshape(image_embed_inputs, [-1, 1, embedding_size])

image_embed_inputs = tf.contrib.layers.batch_norm(image_embed_inputs, center=True, scale=True, is_training=is_training, scope='bn')

text_embedding = tf.Variable(tf.random_uniform([vocab_size, embedding_size], -init_scale, init_scale))

text_embed_inputs = tf.nn.embedding_lookup(text_embedding, self.x_txt)

inputs = tf.concat([image_embed_inputs, text_embed_inputs], 1)

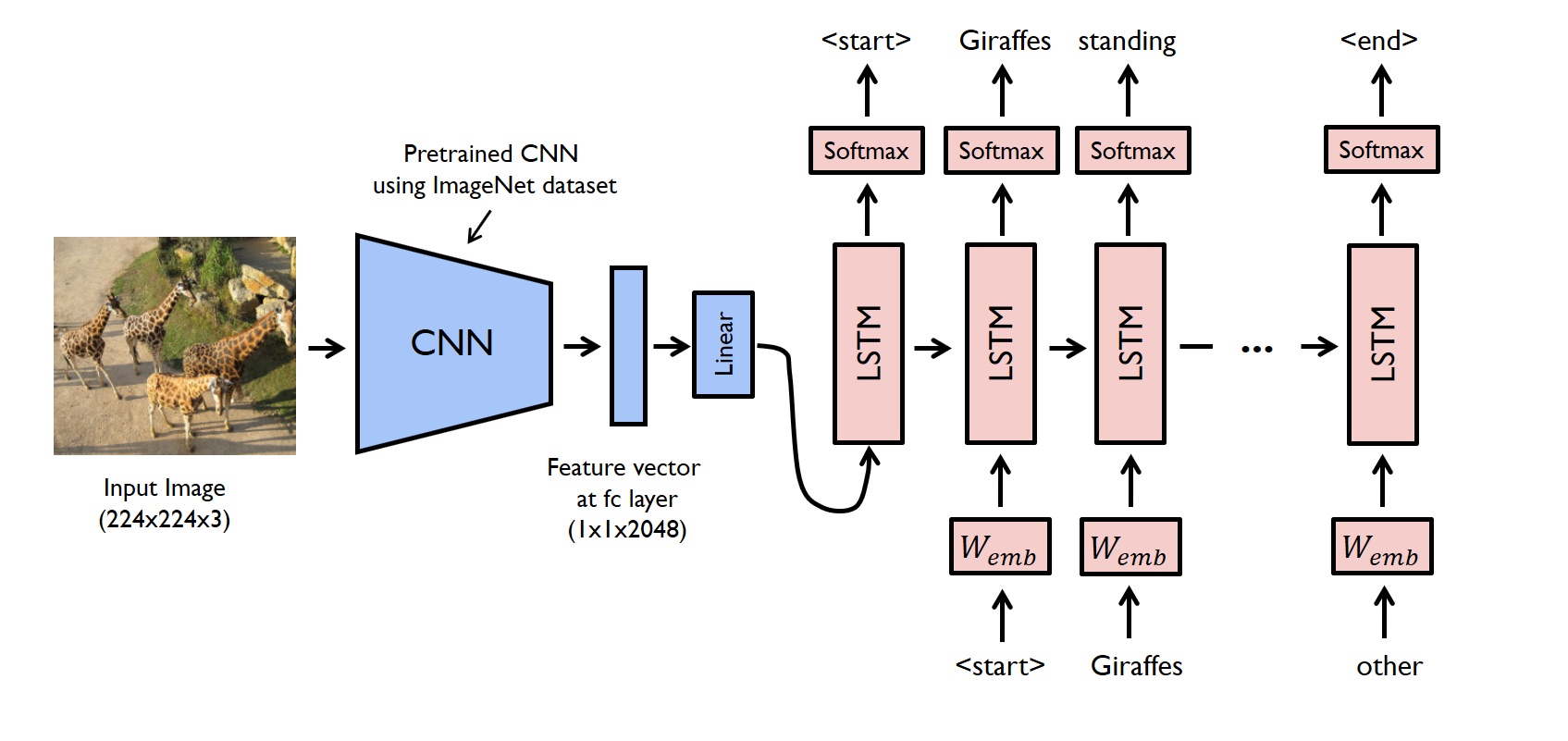

I'm taking the last 2048-dim layer of a pretrained 50-layer ResNet as part of my input. I'm then embedding that in 512-dim space via a basic dense layer (image_embed_inputs).

Simultaneously, I have a 40-element long sequence of text tokens (x_txt) that I'm embedding into 512-dim space (text_embedding / text_embed_inputs).

I'm then concatenating them together into a [-1, 41, 512] tensor, which is the actual input to my LSTM. The first element ([-1, 0, 512]) is the image embedding, and the remaining 40 elements ([-1, 1:41, 512]) are the embeddings for each text token in my input sequence.

Ultimately, that works & does what I need it to do in TensorFlow. Now, I'd like to do something similar in CNTK. I'm looking at the seq2seq tutorial but I haven't figured out how to set up the input for my CNTK LSTM yet.

I've taken the 2048-dim ResNet embedding, the 40-dim input text token sequence and the 40-dim label text token sequence, and stored them in CTF text format (concatenating the ResNet embedding and the input text token sequence together), so they can be read like so:

def create_reader(path, is_training, input_dim, label_dim):

return MinibatchSource(CTFDeserializer(path, StreamDefs(

features=StreamDef(field='x', shape=2088, is_sparse=True),

labels=StreamDef(field='y', shape=40, is_sparse=False)

)), randomize=is_training,

max_sweeps=INFINITELY_REPEAT if is_training else 1)

What I'd like to do at train/test time is take that features input tensor, break back it into a 2048-dim ResNet embedding and a 40-dim input text token sequence, and then set up CNTK sequence entities to feed into my network. So far, though, I haven't been able to figure out how to do that. This is where I am:

def lstm(input, embedding_dim, LSTM_dim, cell_dim, vocab_dim):

x_image = C.slice(input, 0, 0, 2048)

x_text = C.slice(input, 0, 2048, 2088)

x_text_seq = sequence.input_variable(shape=[vocab_dim], is_sparse=False)

# How do I get the data from x_text into x_text_seq?

image_embedding = Embedding(embedding_dim)

text_embedding = Embedding(x_text_seq)

lstm_input = C.splice(image_embedding, text_embedding)

I'm not sure how to set up a sequence properly, though - any ideas?