I am fairly new to cloud and SBT/IntelliJ, So trying my luck with IntelliJ & SBT build environment to deploy my jar on data proc cluster.

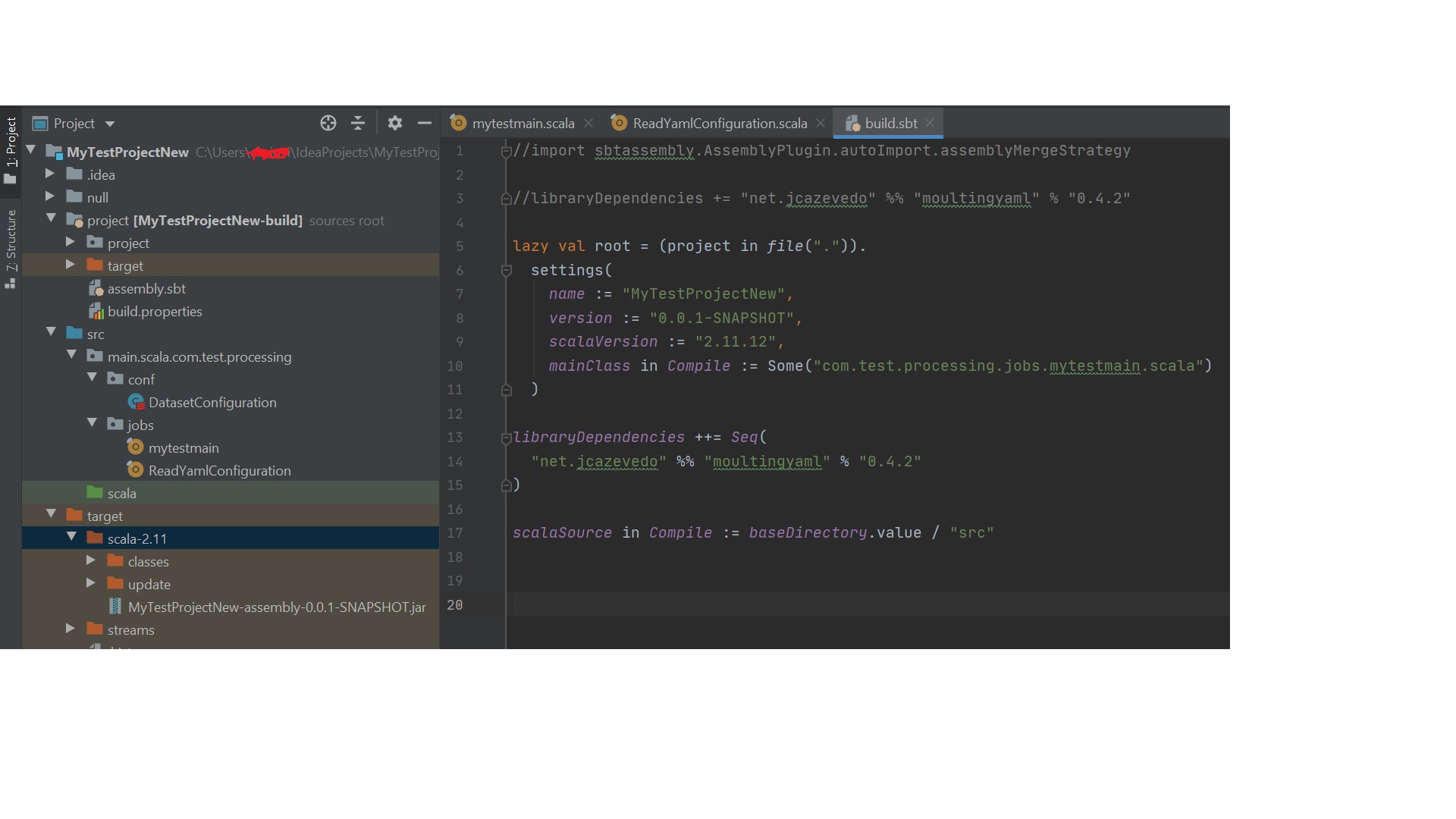

Here's a screen shot of my project structure:

Code is quite simple with main defined in 'mytestmain' which call another method defined in 'ReadYamlConfiguration' which needed a moultingyaml dependency, which I have included as shown in my build.sbt.

Here's my build.sbt & assembly.sbt file:

lazy val root = (project in file(".")).

settings(

name := "MyTestProjectNew",

version := "0.0.1-SNAPSHOT",

scalaVersion := "2.11.12",

mainClass in Compile := Some("com.test.processing.jobs.mytestmain.scala")

)

libraryDependencies ++= Seq(

"net.jcazevedo" %% "moultingyaml" % "0.4.2"

)

scalaSource in Compile := baseDirectory.value / "src"

assembly.sbt file:

addSbtPlugin("com.eed3si9n" % "sbt-assembly" % "0.14.10")

I created assembly.sbt to create Uber jar in order to include required dependencies and ran 'SBT assembly' from Terminal. It has created a assembly jar file successfully, Which I was able to deploy and run successfully on Dataproc cluster.

gcloud dataproc jobs submit spark \

--cluster my-dataproc-cluster \

--region europe-north1 --class com.test.processing.jobs.mytestmain \

--jars gs://my-test-bucket/spark-jobs/MyTestProjectNew-assembly-0.0.1-SNAPSHOT.jar

Code is working fine as expected with no issues.

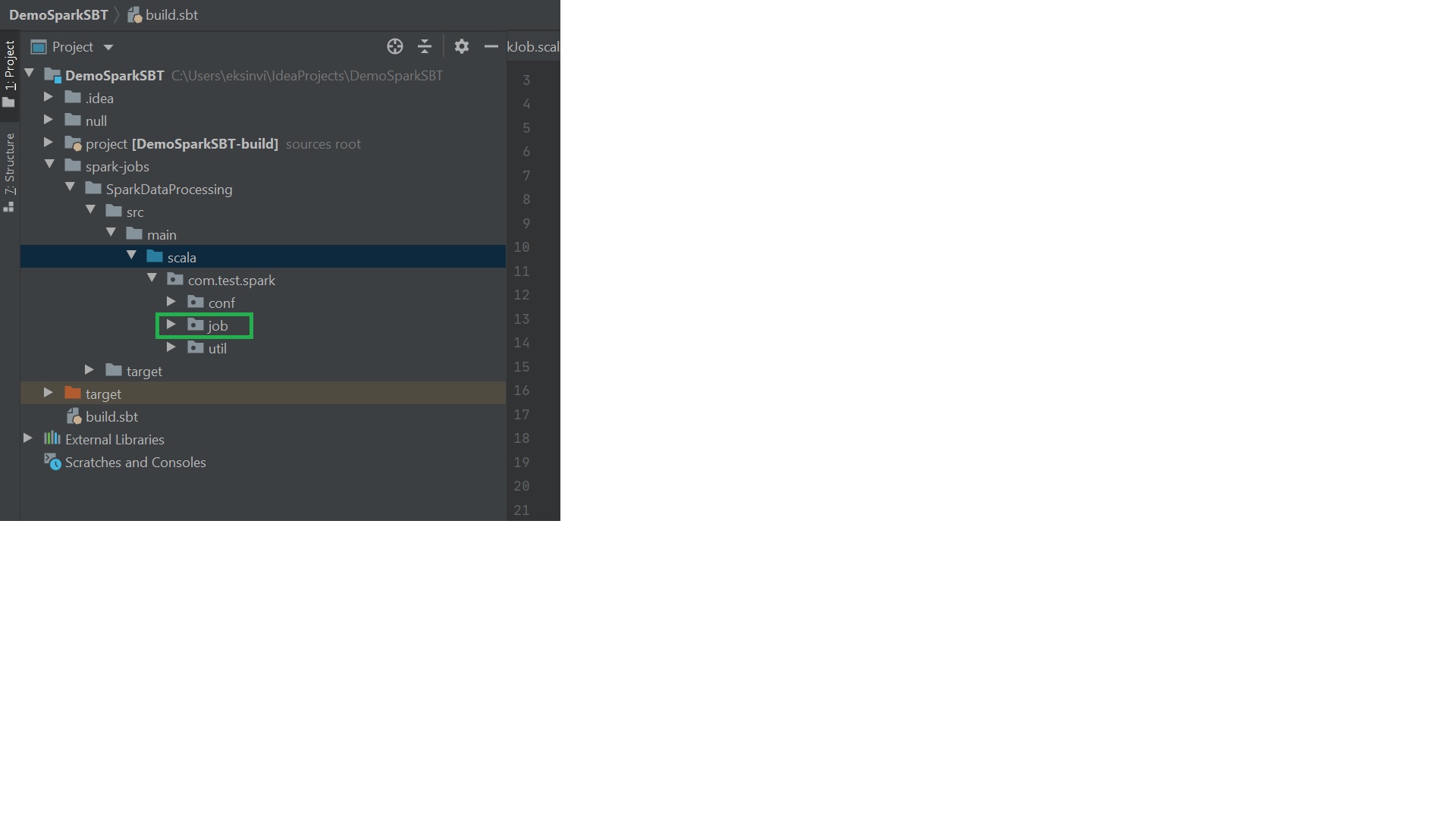

Now I would like to have my own custom directory structure as shown below:

For example, I would like to have a folder name as 'spark-job' with a sub dir named as 'SparkDataProcessing' and then src/main/scala folder with packages and respective scala classes and objects etc.

my main method is defined in in package 'job' within 'com.test.processing' package.

What all changes do I need to make in build.sbt? I am looking for a detail explanation with build.sbt as a sample according to my project structure. Also please suggest what all needs to be included in gitignore file.

I am using IntelliJ Idea 2020 community edition and SBT 1.3.3 version. I tried few things here and there but always ended up some issue with structure, jar or build.sbt issues.

I was expecting an answer something similar which is done in below post.

Why does my sourceDirectories setting have no effect in sbt?

As you can see in below pic, the source directory has been changed.

spark-jobs/SparkDataProcessing/src/main/Scala

and when I am building this with below path, it's not working.

scalaSource in Compile := baseDirectory.value / "src"

it works when I keep the default structure. like src/main/scala