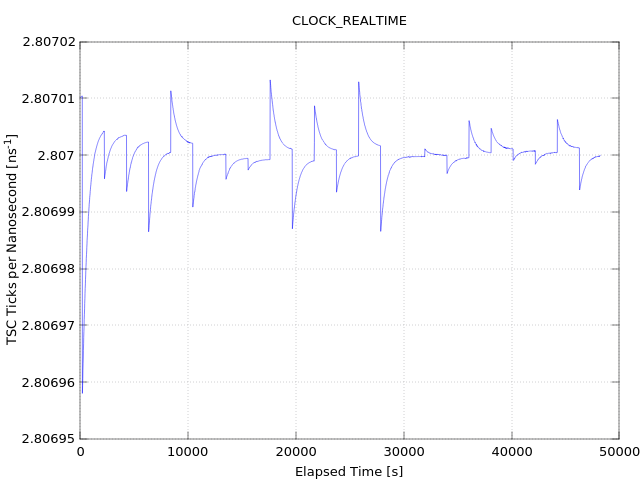

The reason for the drift seen in the OP, at least on my machine, is that the TSC ticks per ns drifts away from its original value of _ticks_per_ns. The following results were from this machine:

don@HAL:~/UNIX/OS/3EZPcs/Ch06$ uname -a

Linux HAL 4.4.0-81-generic #104-Ubuntu SMP Wed Jun 14 08:17:06 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

don@HAL:~/UNIX/OS/3EZPcs/Ch06$ cat /sys/devices/system/clocksource/clocksource0/current_clocksource

tsc

cat /proc/cpuinfo shows constant_tsc and nonstop_tsc flags.

![Sample TSC Ticks per ns of clock_gettime() CLOCK_REALTIME vs. Time.]()

viewRates.cc can be run to see the current TSC Ticks per ns on a machine:

rdtscp.h:

static inline unsigned long rdtscp_start(void) {

unsigned long var;

unsigned int hi, lo;

__asm volatile ("cpuid\n\t"

"rdtsc\n\t" : "=a" (lo), "=d" (hi)

:: "%rbx", "%rcx");

var = ((unsigned long)hi << 32) | lo;

return (var);

}

static inline unsigned long rdtscp_end(void) {

unsigned long var;

unsigned int hi, lo;

__asm volatile ("rdtscp\n\t"

"mov %%edx, %1\n\t"

"mov %%eax, %0\n\t"

"cpuid\n\t" : "=r" (lo), "=r" (hi)

:: "%rax", "%rbx", "%rcx", "%rdx");

var = ((unsigned long)hi << 32) | lo;

return (var);

}

See: Intel's ia-32-ia-64-benchmark-code-execution-paper

viewRates.cc:

#include <time.h>

#include <unistd.h>

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include "rdtscp.h"

using std::cout; using std::cerr; using std::endl;

#define CLOCK CLOCK_REALTIME

uint64_t to_ns(const timespec &ts); // Converts a struct timespec to ns (since epoch).

void view_ticks_per_ns(int runs =10, int sleep =10);

int main(int argc, char **argv) {

int runs = 10, sleep = 10;

if (argc != 1 && argc != 3) {

cerr << "Usage: " << argv[0] << " [ RUNS SLEEP ] \n";

exit(1);

} else if (argc == 3) {

runs = std::atoi(argv[1]);

sleep = std::atoi(argv[2]);

}

view_ticks_per_ns(runs, sleep);

}

void view_ticks_per_ns(int RUNS, int SLEEP) {

// Prints out stream of RUNS tsc ticks per ns, each calculated over a SLEEP secs interval.

timespec clock_start, clock_end;

unsigned long tsc1, tsc2, tsc_start, tsc_end;

unsigned long elapsed_ns, elapsed_ticks;

double rate; // ticks per ns from each run.

clock_getres(CLOCK, &clock_start);

cout << "Clock resolution: " << to_ns(clock_start) << "ns\n\n";

cout << " tsc ticks " << "ns " << " tsc ticks per ns\n";

for (int i = 0; i < RUNS; ++i) {

tsc1 = rdtscp_start();

clock_gettime(CLOCK, &clock_start);

tsc2 = rdtscp_end();

tsc_start = (tsc1 + tsc2) / 2;

sleep(SLEEP);

tsc1 = rdtscp_start();

clock_gettime(CLOCK, &clock_end);

tsc2 = rdtscp_end();

tsc_end = (tsc1 + tsc2) / 2;

elapsed_ticks = tsc_end - tsc_start;

elapsed_ns = to_ns(clock_end) - to_ns(clock_start);

rate = static_cast<double>(elapsed_ticks) / elapsed_ns;

cout << elapsed_ticks << " " << elapsed_ns << " " << std::setprecision(12) << rate << endl;

}

}

linearExtrapolator.cc can be run to re-create the experiment of the OP:

linearExtrapolator.cc:

#include <time.h>

#include <unistd.h>

#include <iostream>

#include <iomanip>

#include <algorithm>

#include <array>

#include "rdtscp.h"

using std::cout; using std::endl; using std::array;

#define CLOCK CLOCK_REALTIME

uint64_t to_ns(const timespec &ts); // Converts a struct timespec to ns (since epoch).

void set_ticks_per_ns(bool set_rate); // Display or set tsc ticks per ns, _ticks_per_ns.

void get_start(); // Sets the 'start' time point: _start_tsc[in ticks] and _start_clock_time[in ns].

uint64_t tsc_to_ns(uint64_t tsc); // Convert tsc ticks since _start_tsc to ns (since epoch) linearly using

// _ticks_per_ns with origin(0) at the 'start' point set by get_start().

uint64_t _start_tsc, _start_clock_time; // The 'start' time point as both tsc tick number, start_tsc, and as

// clock_gettime ns since epoch as _start_clock_time.

double _ticks_per_ns; // Calibrated in set_ticks_per_ns()

int main() {

set_ticks_per_ns(true); // Set _ticks_per_ns as the initial TSC ticks per ns.

uint64_t tsc1, tsc2, tsc_now, tsc_ns, utc_ns;

int64_t ns_diff;

bool first_pass{true};

for (int i = 0; i < 10; ++i) {

timespec utc_now;

if (first_pass) {

get_start(); //Get start time in both ns since epoch (_start_clock_time), and tsc tick number(_start_tsc)

cout << "_start_clock_time: " << _start_clock_time << ", _start_tsc: " << _start_tsc << endl;

utc_ns = _start_clock_time;

tsc_ns = tsc_to_ns(_start_tsc); // == _start_clock_time by definition.

tsc_now = _start_tsc;

first_pass = false;

} else {

tsc1 = rdtscp_start();

clock_gettime(CLOCK, &utc_now);

tsc2 = rdtscp_end();

tsc_now = (tsc1 + tsc2) / 2;

tsc_ns = tsc_to_ns(tsc_now);

utc_ns = to_ns(utc_now);

}

ns_diff = tsc_ns - (int64_t)utc_ns;

cout << "elapsed ns: " << utc_ns - _start_clock_time << ", elapsed ticks: " << tsc_now - _start_tsc

<< ", ns_diff: " << ns_diff << '\n' << endl;

set_ticks_per_ns(false); // Display current TSC ticks per ns (does not alter original _ticks_per_ns).

}

}

void set_ticks_per_ns(bool set_rate) {

constexpr int RUNS {1}, SLEEP{10};

timespec clock_start, clock_end;

uint64_t tsc1, tsc2, tsc_start, tsc_end;

uint64_t elapsed_ns[RUNS], elapsed_ticks[RUNS];

array<double, RUNS> rates; // ticks per ns from each run.

if (set_rate) {

clock_getres(CLOCK, &clock_start);

cout << "Clock resolution: " << to_ns(clock_start) << "ns\n";

}

for (int i = 0; i < RUNS; ++i) {

tsc1 = rdtscp_start();

clock_gettime(CLOCK, &clock_start);

tsc2 = rdtscp_end();

tsc_start = (tsc1 + tsc2) / 2;

sleep(SLEEP);

tsc1 = rdtscp_start();

clock_gettime(CLOCK, &clock_end);

tsc2 = rdtscp_end();

tsc_end = (tsc1 + tsc2) / 2;

elapsed_ticks[i] = tsc_end - tsc_start;

elapsed_ns[i] = to_ns(clock_end) - to_ns(clock_start);

rates[i] = static_cast<double>(elapsed_ticks[i]) / elapsed_ns[i];

}

cout << " tsc ticks " << "ns " << "tsc ticks per ns" << endl;

for (int i = 0; i < RUNS; ++i)

cout << elapsed_ticks[i] << " " << elapsed_ns[i] << " " << std::setprecision(12) << rates[i] << endl;

if (set_rate)

_ticks_per_ns = rates[RUNS-1];

}

constexpr uint64_t BILLION {1000000000};

uint64_t to_ns(const timespec &ts) {

return ts.tv_sec * BILLION + ts.tv_nsec;

}

void get_start() { // Get start time both in tsc ticks as _start_tsc, and in ns since epoch as _start_clock_time

timespec ts;

uint64_t beg, end;

// loop to ensure we aren't interrupted between the two tsc reads

while (1) {

beg = rdtscp_start();

clock_gettime(CLOCK, &ts);

end = rdtscp_end();

if ((end - beg) <= 2000) // max ticks per clock call

break;

}

_start_tsc = (end + beg) / 2;

_start_clock_time = to_ns(ts); // converts timespec to ns since epoch

}

uint64_t tsc_to_ns(uint64_t tsc) { // Convert tsc ticks into absolute ns:

// Absolute ns is defined by this linear extrapolation from the start point where

//_start_tsc[in ticks] corresponds to _start_clock_time[in ns].

uint64_t diff = tsc - _start_tsc;

return _start_clock_time + static_cast<uint64_t>(diff / _ticks_per_ns);

}

Here is output from a run of viewRates immediately followed by linearExtrapolator:

# ./viewRates

Clock resolution: 1ns

tsc ticks ns tsc ticks per ns

28070466526 10000176697 2.8069970538

28070500272 10000194599 2.80699540335

28070489661 10000196097 2.80699392179

28070404159 10000170879 2.80699245029

28070464811 10000197285 2.80699110338

28070445753 10000195177 2.80698978932

28070430538 10000194298 2.80698851457

28070427907 10000197673 2.80698730414

28070409903 10000195492 2.80698611597

28070398177 10000195328 2.80698498942

# ./linearExtrapolator

Clock resolution: 1ns

tsc ticks ns tsc ticks per ns

28070385587 10000197480 2.8069831264

_start_clock_time: 1497966724156422794, _start_tsc: 4758879747559

elapsed ns: 0, elapsed ticks: 0, ns_diff: 0

tsc ticks ns tsc ticks per ns

28070364084 10000193633 2.80698205596

elapsed ns: 10000247486, elapsed ticks: 28070516229, ns_diff: -3465

tsc ticks ns tsc ticks per ns

28070358445 10000195130 2.80698107188

elapsed ns: 20000496849, elapsed ticks: 56141027929, ns_diff: -10419

tsc ticks ns tsc ticks per ns

28070350693 10000195646 2.80698015186

elapsed ns: 30000747550, elapsed ticks: 84211534141, ns_diff: -20667

tsc ticks ns tsc ticks per ns

28070324772 10000189692 2.80697923105

elapsed ns: 40000982325, elapsed ticks: 112281986547, ns_diff: -34158

tsc ticks ns tsc ticks per ns

28070340494 10000198352 2.80697837242

elapsed ns: 50001225563, elapsed ticks: 140352454025, ns_diff: -50742

tsc ticks ns tsc ticks per ns

28070325598 10000196057 2.80697752704

elapsed ns: 60001465937, elapsed ticks: 168422905017, ns_diff: -70335

# ^C

The viewRates output shows that the TSC ticks per ns are decreasing fairly rapidly with time corresponding to one of those steep drops in the plot above. The linearExtrapolator output shows, as in the OP, the difference between the elapsed ns as reported by clock_gettime(), and the elapsed ns obtained by converting the elapsed TSC ticks to elapsed ns using _ticks_per_ns == 2.8069831264 obtained at start time. Rather than a sleep(10); between each print out of elapsed ns, elapsed ticks, ns_diff, I re-run the TSC ticks per ns calculation using a 10s window; this prints out the current tsc ticks per ns ratio. It can be seen that the trend of decreasing TSC ticks per ns observed from the viewRates output is continuing throughout the run of linearExtrapolator.

Dividing an elapsed ticks by _ticks_per_ns and subtracting the corresponding elapsed ns gives the ns_diff, e.g.: (84211534141 / 2.8069831264) - 30000747550 = -20667. But this is not 0 mainly due the drift in TSC ticks per ns. If we had used a value of 2.80698015186 ticks per ns obtained from the last 10s interval, the result would be: (84211534141 / 2.80698015186) - 30000747550 = 11125. The additional error accumulated during that last 10s interval, -20667 - -10419 = -10248, nearly disappears when the correct TSC ticks per ns value is used for that interval: (84211534141 - 56141027929) / 2.80698015186 - (30000747550 - 20000496849) = 349.

If the linearExtrapolator had been run at a time when the TSC ticks per ns had been constant, the accuracy would be limited by how well the (constant) _ticks_per_ns had been determined, and then it would pay to take, e.g., a median of several estimates. If the _ticks_per_ns was off by a fixed 40 parts per billion, a constant drift of about 400ns every 10 seconds would be expected, so ns_diff would grow/shrink by 400 each 10 seconds.

genTimeSeriesofRates.cc can be used to generate data for a plot like above:

genTimeSeriesofRates.cc:

#include <time.h>

#include <unistd.h>

#include <iostream>

#include <iomanip>

#include <algorithm>

#include <array>

#include "rdtscp.h"

using std::cout; using std::cerr; using std::endl; using std::array;

double get_ticks_per_ns(long &ticks, long &ns); // Get median tsc ticks per ns, ticks and ns.

long ts_to_ns(const timespec &ts);

#define CLOCK CLOCK_REALTIME // clock_gettime() clock to use.

#define TIMESTEP 10

#define NSTEPS 10000

#define RUNS 5 // Number of RUNS and SLEEP interval used for each sample in get_ticks_per_ns().

#define SLEEP 1

int main() {

timespec ts;

clock_getres(CLOCK, &ts);

cerr << "CLOCK resolution: " << ts_to_ns(ts) << "ns\n";

clock_gettime(CLOCK, &ts);

int start_time = ts.tv_sec;

double ticks_per_ns;

int running_elapsed_time = 0; //approx secs since start_time to center of the sampling done by get_ticks_per_ns()

long ticks, ns;

for (int timestep = 0; timestep < NSTEPS; ++timestep) {

clock_gettime(CLOCK, &ts);

ticks_per_ns = get_ticks_per_ns(ticks, ns);

running_elapsed_time = ts.tv_sec - start_time + RUNS * SLEEP / 2;

cout << running_elapsed_time << ' ' << ticks << ' ' << ns << ' '

<< std::setprecision(12) << ticks_per_ns << endl;

sleep(10);

}

}

double get_ticks_per_ns(long &ticks, long &ns) {

// get the median over RUNS runs of elapsed tsc ticks, CLOCK ns, and their ratio over a SLEEP secs time interval

timespec clock_start, clock_end;

long tsc_start, tsc_end;

array<long, RUNS> elapsed_ns, elapsed_ticks;

array<double, RUNS> rates; // arrays from each run from which to get medians.

for (int i = 0; i < RUNS; ++i) {

clock_gettime(CLOCK, &clock_start);

tsc_start = rdtscp_end(); // minimizes time between clock_start and tsc_start.

sleep(SLEEP);

clock_gettime(CLOCK, &clock_end);

tsc_end = rdtscp_end();

elapsed_ticks[i] = tsc_end - tsc_start;

elapsed_ns[i] = ts_to_ns(clock_end) - ts_to_ns(clock_start);

rates[i] = static_cast<double>(elapsed_ticks[i]) / elapsed_ns[i];

}

// get medians:

std::nth_element(elapsed_ns.begin(), elapsed_ns.begin() + RUNS/2, elapsed_ns.end());

std::nth_element(elapsed_ticks.begin(), elapsed_ticks.begin() + RUNS/2, elapsed_ticks.end());

std::nth_element(rates.begin(), rates.begin() + RUNS/2, rates.end());

ticks = elapsed_ticks[RUNS/2];

ns = elapsed_ns[RUNS/2];

return rates[RUNS/2];

}

constexpr long BILLION {1000000000};

long ts_to_ns(const timespec &ts) {

return ts.tv_sec * BILLION + ts.tv_nsec;

}

dlsymit). – Sukconstant_tscwas to keep the tscs synchronized across all cores in a system? – Pneumographconstant_tscmeans that the TSC ticks at a constant frequency independent of frequency scaling/TurboBoost/etc. For cores of the same make I'd imagine they'd tick at the same speed. But when each core actually starts its TSC ticking isn't synchronized, so between each core there will be an offset. Software can attempt to synchronize the two, but you usually can't make them match to nanosecond precision. – Kiakiahnonstop_tsc, but then I'm reading in the intel manual Constant TSC behavior ensures that the duration of each clock tick is uniform and supports the use of the TSC as a wall clock timer even if the processor core changes frequency. This is the architectural behavior moving forward. I will try my test withtaskset– Pneumographsched_setaffinityto lock it to a core - no difference – Pneumographsched_setaffinitywould solve it, you'd probably [before that] have seen jitter up/down rather than steady drift. I have independent code for this with some 20 years mileage on it. I'm experimenting now. If I find something, I'll post [At present, my program confirms the drift]. BTW, the best value for CPU khz is derived frombogomips / 2rather than/sys/...– Haleyconstantandnonstopexactly inverted, and you should use Intel's terminology. As you found, constant TSC means constant period/frequency, and Intel's manual, immediately below in 17.14.1 Invariant TSC, describes the TSC as ticking regardless of sleep states, which is what that link should have callednonstop. But the asynchrony between the cores is apparently not the problem here. – Kiakiahtscticks and computing the gap withclock_gettime; The reason being thatclock_gettimeis much more expensive thanrdtscp. 3) You should subtract the overhead ofrdtscp. – Kiakiah