You can try using np.multiply.outer(A,B).

Assume you have the following data:

a = np.array([[4, 2],

[2, 2]])

b = np.array([[4, 3],

[4, 0]])

You want to do the following two multiplications:

np.outer(a[:,0],b[0,:]) = array([[16, 12],

[ 8, 6]])

np.outer(a[:,1],b[1,:]) = array([[8, 0],

[8, 0]])

This can be done using the mp.multiply.outer method. The np.multiply.outer "Apply the ufunc op to all pairs (a, b) with a in A and b in B." (See description here). This function perform all possible outer products between A and B, which in this simple example results in a (2,2,2,2) shape matrix. Obviously, you do not need all possible outer product, you just need to extract the one you want from this matrix.

You can see that:

np.multiply.outer(a,b)[0,:,0,:] = array([[16, 12],

[ 8, 6]])

np.multiply.outer(a,b)[1,:,1,:] = array([[8, 0],

[8, 0]])

Using this method you do not need to do the for loop, but you execute redundant computations. However, the numpy package is optimized, and perhaps this will be faster (for very large A and B you can try speeding things up using the jit decorator

Another method in which you do not compute redundant computations is via using np.newaxis to expand the matrices before multiplication.

Using the same a, b from above, perform the following:

a[:,:,np.newaxis] = array([[[4],

[2]],

[[2],

[2]]])

b[:,np.newaxis,:] = array([[[4, 3]],

[[4, 0]]])

Now you can simply do multiplication to receive:

a[:,:,np.newaxis]*b[:,np.newaxis,:] = array([[[16, 12],

[ 8, 6]],

[[ 8, 0],

[ 8, 0]]])

This is the exact result of the outer products without the redundant computation of np.multiply.outer. All that is left is to sum over the 1st dimension as follows:

results = np.sum(a[:,:,np.newaxis]*b[:,np.newaxis,:], axis=0)

Continuing with the second example, exapnding the example to divide each outer product by a different number can be done as follows:

Assuming the vector pd consists of to numbers (since there fore two outer products), the change needed can now simply be done using::

pd = np.array([[[6]],[[8]]]) # shape is (2,1,1) because there are two outer products

solution = np.sum((a[:,:,np.newaxis] * b[:,np.newaxis,:]) / pd, axis=0)

Another method will be to set pd as a (1,2) shaped array, and divide a prior to multiplication:

pd = np.array([[6,8]]) # shape (1,2) beacuse there are two outer products

solution = np.sum((a / pd)[:,:,np.newaxis]* b[:,np.newaxis,:], axis=0)

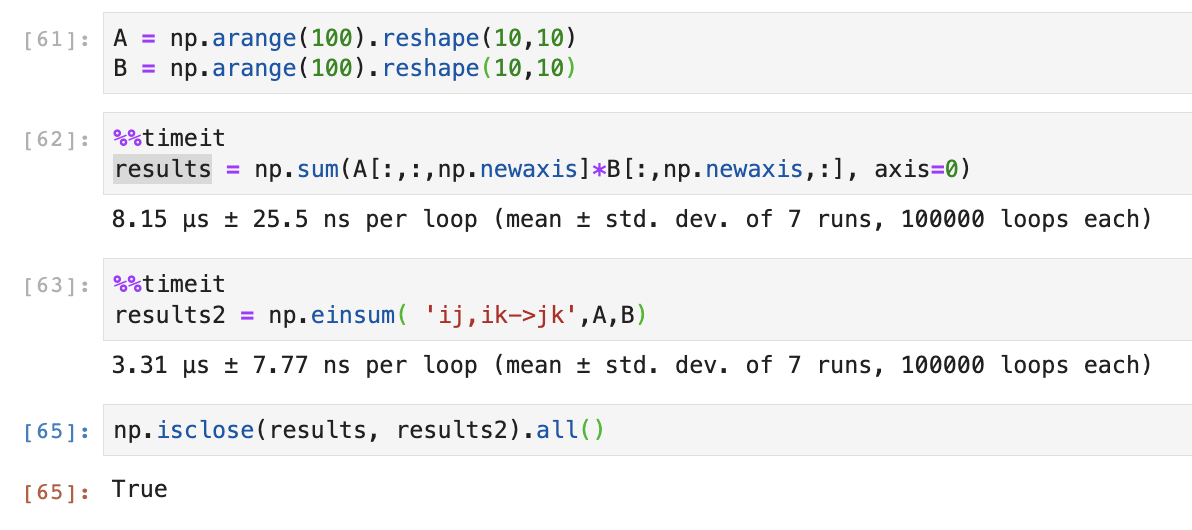

Regaurding the Einstein summation proposed in the other solution, you can go with:

pd = np.array([[6,8]]) # shape (1,2) beacuse there are two outer products

solution = np.einsum( 'ij,ik->jk',a/pd,b)

A @ Bworks fine. – EdlinA @ Bas suggested already, and if it fails you, a next thing to check some Swiss-army knife, likeeinsum– Dyernumpybut sounds like a really bad approach. – Edlin@– Frenchmanmatmul/@in the loop. Just from reading code it isn't obvious how this is any different from using it on the whole arrays,A@B.dot/matmul/einsumare the 'vectorized' sum-of-products functions. – Cygnus@is the operator fornp.matmul. That's an extension ofnp.dot. – CygnusA@B. SoA@Bis the "vectorization". For your question to be meaningful, the function needs to return something different, something that is worth doing in a slower manner. – CygnusAp @ Bp. – EdlinAp @ Bpseems to be the best way here – MexicaliAp/(len(k)*pd_uniform[k]) @ Bp. Still not an approximation. – Edlin