I just wanted to fit a linear regression with sklearn which I use as benchmark for other non-linear approaches, such as MLPRegressor, but also variations of linear regression, such as Ridge, Lasso and ElasticNet (see here for an introduction to this group: http://scikit-learn.org/stable/modules/linear_model.html).

Doing it the same ways as described by @silviomoreto (which worked for all other models) actually for me resulted in an errogenous model (very high errors). This is most likely due to the so called dummy variable trap, which occurs due to multicollinearity in the variables when you include one dummy variable per category for categoric variables -- which is exactly what OneHotEncoder does! See also the following discussion on statsexchange: https://stats.stackexchange.com/questions/224051/one-hot-vs-dummy-encoding-in-scikit-learn.

To avoid this, I wrote a simple wrapper that excludes one variable, which then acts as the default.

class DummyEncoder(BaseEstimator, TransformerMixin):

def __init__(self, n_values='auto'):

self.n_values = n_values

def transform(self, X):

ohe = OneHotEncoder(sparse=False, n_values=self.n_values)

return ohe.fit_transform(X)[:,:-1]

def fit(self, X, y=None, **fit_params):

return self

So building on the code of @silviomoreto, you would change line 6:

enc = DummyEncoder()

This solved the problem for me. Note that OneHotEncoder worked fine (and better) for all other models, such as Ridge, Lasso and ANN.

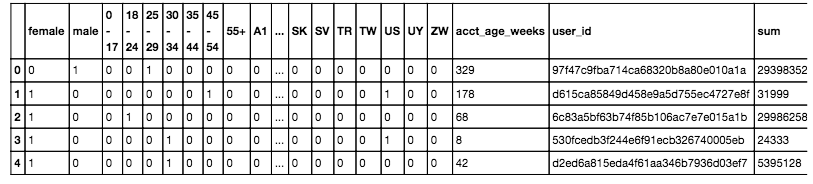

I chose this way, because I wanted to include it in my feature pipeline. But you seem to have the data already encoded. Here, you would have to drop one column per category (e.g. for male/female only include one). So if you for example used pandas.get_dummies(...), this can be done with the parameter drop_first=True.

Last but not least, if you really need to go deeper into linear regression in Python, and not use it just as a benchmark, I would recommend statsmodels over scikit-learn (https://pypi.python.org/pypi/statsmodels), as it provides better model statistics, e.g. p-values per variable, etc.