I am working with the R programming language and trying to learn about how to use Selenium to interact with webpages.

For example, using Google Maps - I am trying to find the name, address and longitude/latitude of all Pizza shops around a certain area. As I understand, this would involve entering the location you are interested in, clicking the "nearby" button, entering what you are looking for (e.g. "pizza"), scrolling all the way to the bottom to make sure all pizza shops are loaded - and then copying the names, address and longitude/latitudes of all pizza locations.

I have been self-teaching myself how to use Selenium in R and have been able to solve parts of this problem myself. Here is what I have done so far:

Part 1: Searching for an address (e.g. Statue of Liberty, New York, USA) and returning a longitude/latitude :

library(RSelenium)

library(wdman)

library(netstat)

selenium()

seleium_object <- selenium(retcommand = T, check = F)

remote_driver <- rsDriver(browser = "chrome", chromever = "114.0.5735.90", verbose = F, port = free_port())

remDr<- remote_driver$client

remDr$navigate("https://www.google.com/maps")

search_box <- remDr$findElement(using = 'css selector', "#searchboxinput")

search_box$sendKeysToElement(list("Statue of Liberty", key = "enter"))

Sys.sleep(5)

url <- remDr$getCurrentUrl()[[1]]

long_lat <- gsub(".*@(-?[0-9.]+),(-?[0-9.]+),.*", "\\1,\\2", url)

long_lat <- unlist(strsplit(long_lat, ","))

> long_lat

[1] "40.7269409" "-74.0906116"

Part 2: Searching for all Pizza shops around a certain location:

library(RSelenium)

library(wdman)

library(netstat)

selenium()

seleium_object <- selenium(retcommand = T, check = F)

remote_driver <- rsDriver(browser = "chrome", chromever = "114.0.5735.90", verbose = F, port = free_port())

remDr<- remote_driver$client

remDr$navigate("https://www.google.com/maps")

Sys.sleep(5)

search_box <- remDr$findElement(using = 'css selector', "#searchboxinput")

search_box$sendKeysToElement(list("40.7256456,-74.0909442", key = "enter"))

Sys.sleep(5)

search_box <- remDr$findElement(using = 'css selector', "#searchboxinput")

search_box$clearElement()

search_box$sendKeysToElement(list("pizza", key = "enter"))

Sys.sleep(5)

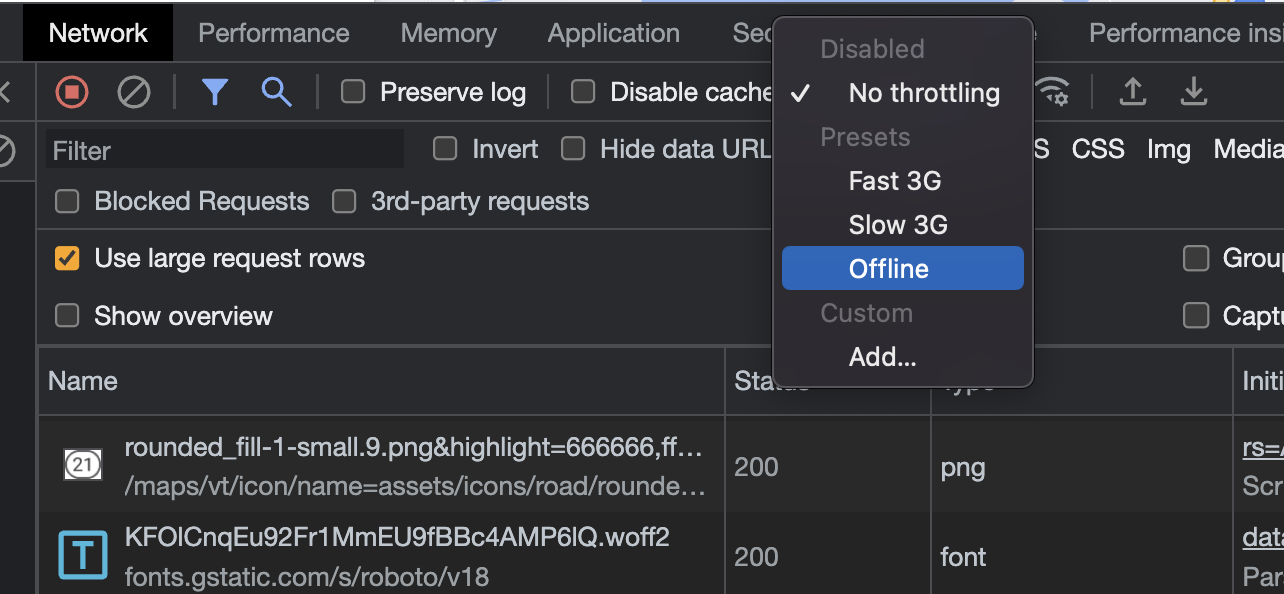

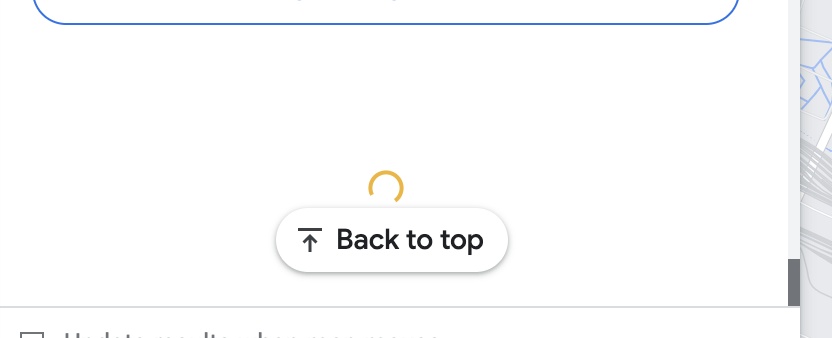

But from here, I do not know how to proceed. I do not know how to scroll the page all the way to the bottom to view all such results that are available - and I do not know how to start extracting the names.

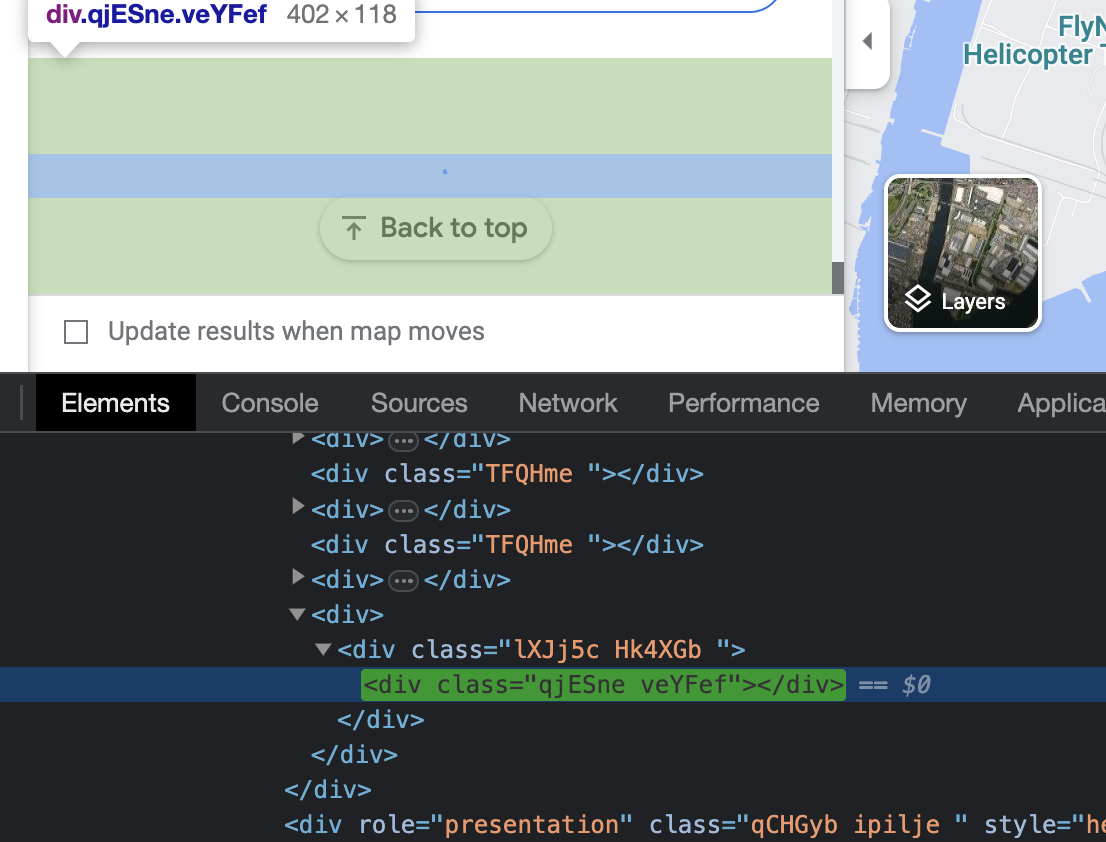

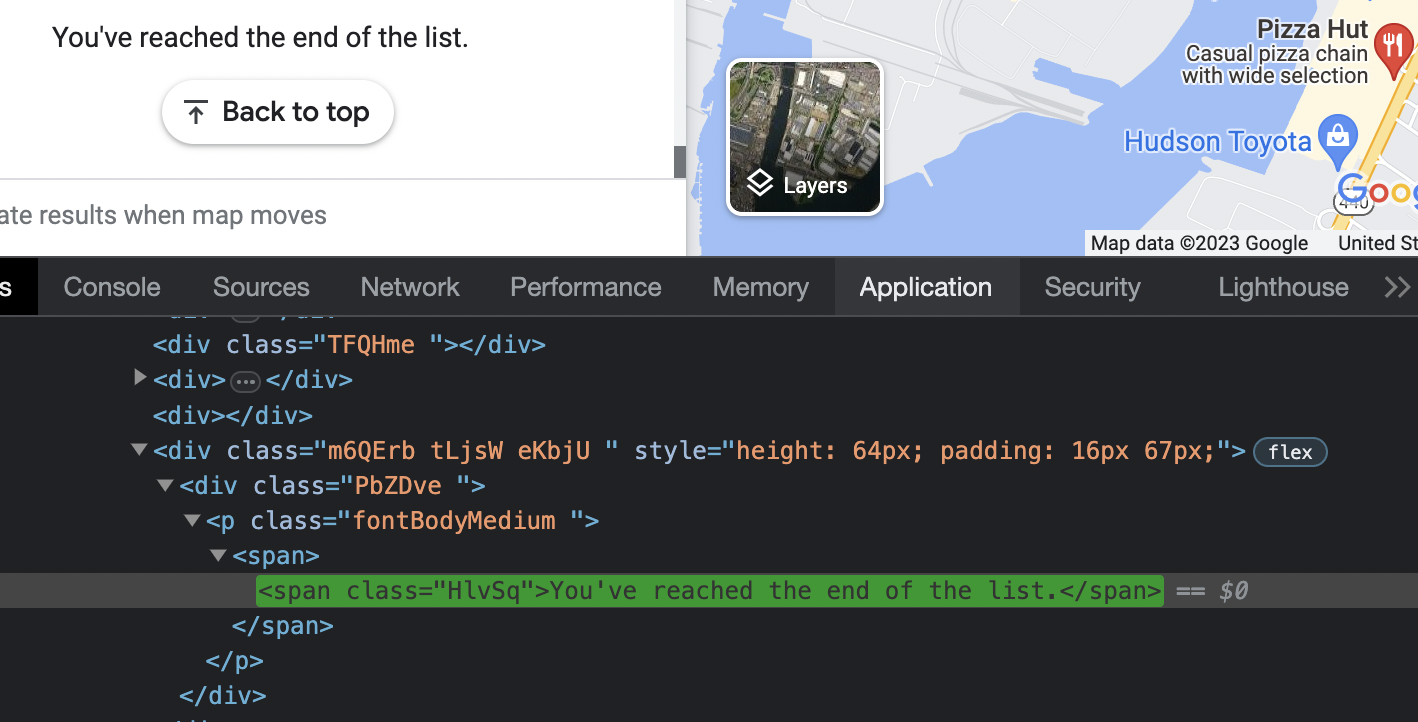

Doing some research (i.e. inspecting the HTML code), I made the following observations:

The name of a restaurant location can be found in the following tags:

<a class="hfpxzc" aria-label=The address of a restaurant location be found in the following tags:

<div class="W4Efsd">

In the end, I would be looking for a result like this:

name address longitude latitude

1 pizza land 123 fake st, city, state, zip code 45.212 -75.123

Can someone please show me how to proceed?

Note: Seeing as more people likely use Selenium through Python - I am more than happy to learn how to solve this problem in Python and then try to convert the answer into R code.r

Thanks!

References:

- https://medium.com/python-point/python-crawling-restaurant-data-ab395d121247

- https://www.youtube.com/watch?v=GnpJujF9dBw

- https://www.youtube.com/watch?v=U1BrIPmhx10

UPDATE: Some further progress with addresses

remDr$navigate("https://www.google.com/maps")

Sys.sleep(5)

search_box <- remDr$findElement(using = 'css selector', "#searchboxinput")

search_box$sendKeysToElement(list("40.7256456,-74.0909442", key = "enter"))

Sys.sleep(5)

search_box <- remDr$findElement(using = 'css selector', "#searchboxinput")

search_box$clearElement()

search_box$sendKeysToElement(list("pizza", key = "enter"))

Sys.sleep(5)

address_elements <- remDr$findElements(using = 'css selector', '.W4Efsd')

addresses <- lapply(address_elements, function(x) x$getElementText()[[1]])

result <- data.frame(name = unlist(names), address = unlist(addresses))