I run simple test for OpenCV camera pose estimation. Having a photo and the same photo scaled up (zoomed in) I use them to detect features, calculate essential matrix and recover camera poses.

Mat inliers;

Mat E = findEssentialMat(queryPoints, trainPoints, cameraMatrix1, cameraMatrix2,

FM_RANSAC, 0.9, MAX_PIXEL_OFFSET, inliers);

size_t inliersCount =

recoverPose(E, queryGoodPoints, trainGoodPoints, cameraMatrix1, cameraMatrix2, R, T, inliers);

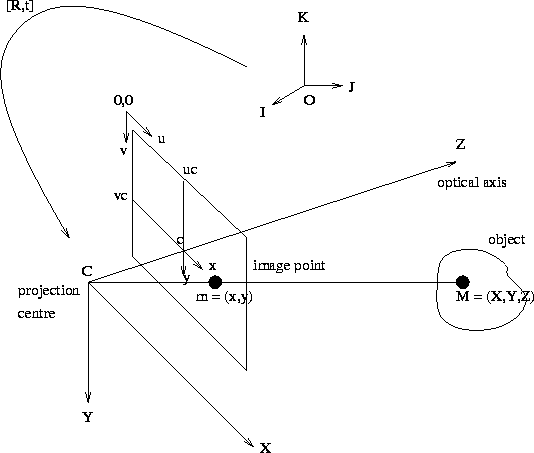

So when I specify the original image as the first one, and the zoomed image as the second one, I get translation T close to [0; 0; -1]. However the second camera (zoomed) is virtually closer to the object than the first one. So if Z-axis goes from image plane into the scene, the second camera should have positive offset along Z-axis. For the result I get, Z-axis goes from the image plane towards camera, which among with other axes (X goes right, Y goes down) forms left-handed coordinate system. Is that true? Why this result differs from the coordinate system illustrated here?

tandR, I should: 1) invert the homogenousE, then 2) recoverPose() ? – Heathenish