Not enough space in comments to provide feedback. Posting an incomplete answer as I need to leave for the day.

You are going to have trouble accomplishing what you are asking for. Based on your comments in Gowdhaman008's answer, the value of a variable is not visible outside of a Data flow until after the finalizer event fires (OnPostExecute, I think). You can cheat and get that data out by making use of a script task to count rows through and firing off events, custom or predefined, to reporting package progress. In fact, just capture the OnPipelineRowsSent event. That will record how many rows are passing through a particular juncture and time surrounding it. SSIS Performance Framework Plus, you don't have to do any custom work or maintenance on your stuff. Out of the box functionality is a definite win.

That said, you aren't really going to know how many rows are coming out of a source until it's finished. That sounds stupid and I completely agree but it's the truth. Imagine a simple case, an OLE DB Source that is going to send 1,000,000 rows straight into an OLE DB Destination. Most likely, not all 1M rows are going to start in the pipeline, maybe only 10k will be in the first buffer. Those buffers are pushed to the destination and now you know 10k rows out of 10k rows have been processed. Lather, rinse, repeat a few times and in this buffer, a row has a NULL where it shouldn't. Boom goes the dynamite and the process fails. We have had 60k rows flow into the pipeline and that's all we know about because of the failure.

The only way to ensure we have accounted for all the source rows is to put an asynchronous transformation into the the pipeline to block all downstream components until all the data has arrived. This will obliterate any chance you have of getting good performance out of your packages. You'd still be subject to the aforementioned restrictions on updating variables but your FireXEvent message would accurately describe how many rows could have been processed in the queue.

If you started an explicit transaction, you could do something ugly like an Execute SQL Task just to get the expected count, write that to a variable and then log rows processed but then you're double querying your data and you increase the likelyhood of blocking on the source system because of the double pump. And that's only going to work for something database like. The same concept would apply for a flat file except now you'd need a script task to read all the rows first.

Where this gets uglier is for a slow starting data source, like a web service. The default buffer size might cause the entire package to run much longer than it'd need to simple because we are waiting on the data to arrive Slow starts

What I'd do

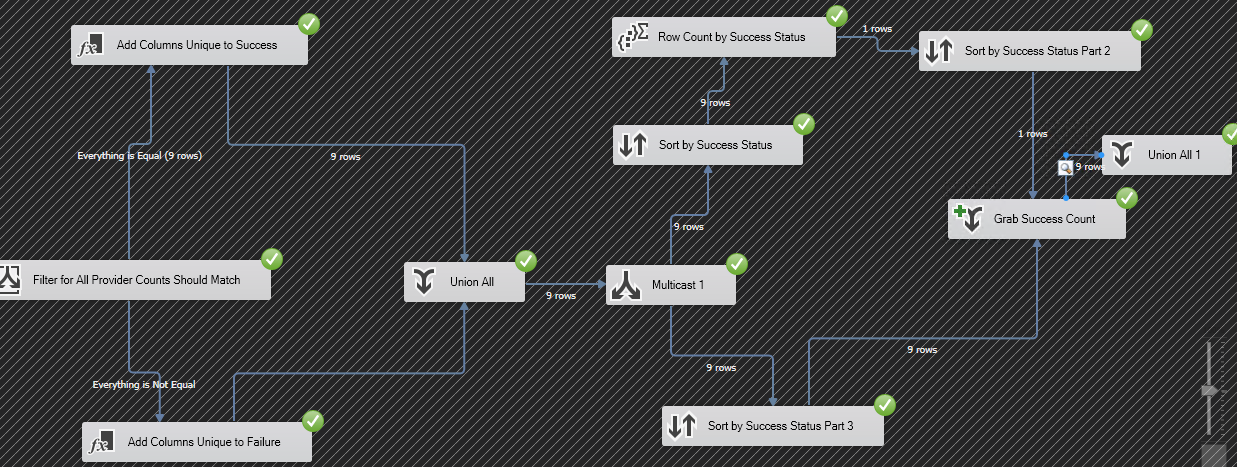

I'd record my starting and error counts (and more) using the Row Count. This will help you account for all the data that came in and where it went. I'd then turn on the OnPipelineRowsSent event to allow me to query the log and see how many rows are flowing through it RIGHT NOW.

![enter image description here]()