The ARFaceTrackingConfiguration of ARKit places ARFaceAnchor with information about the position and orientation of the face onto the scene. Among others, this anchor has the lookAtPoint property that I'm interested in. I know that this vector is relative to the face. How can I draw a point on the screen for this position, meaning how can I translate this point's coordinates?

.lookAtPoint property is for direction's estimation only

Apple documentation says: .lookAtPoint is a position in face coordinate space that is estimating only the gaze of face's direction. It's a vector of three scalar values, and it's just gettable, not settable:

var lookAtPoint: SIMD3<Float> { get }

In other words, this is the resulting vector from the product of two quantities – .rightEyeTransform and .leftEyeTransform instance properties (which also are just gettable):

var rightEyeTransform: simd_float4x4 { get }

var leftEyeTransform: simd_float4x4 { get }

Here's an imaginary situation on how you could use this instance property:

func renderer(_ renderer: SCNSceneRenderer, didUpdate node: SCNNode, for anchor: ARAnchor) {

if let faceAnchor = anchor as? ARFaceAnchor,

let faceGeometry = node.geometry as? ARSCNFaceGeometry {

if (faceAnchor.lookAtPoint.x >= 0) { // Looking (+X)

faceGeometry.firstMaterial?.diffuse.contents = UIImage(named: "redTexture.png")

} else { // Looking (-X)

faceGeometry.firstMaterial?.diffuse.contents = UIImage(named: "cyanTexture.png")

}

faceGeometry.update(from: faceAnchor.geometry)

facialExrpession(anchor: faceAnchor)

DispatchQueue.main.async {

self.label.text = self.textBoard

}

}

}

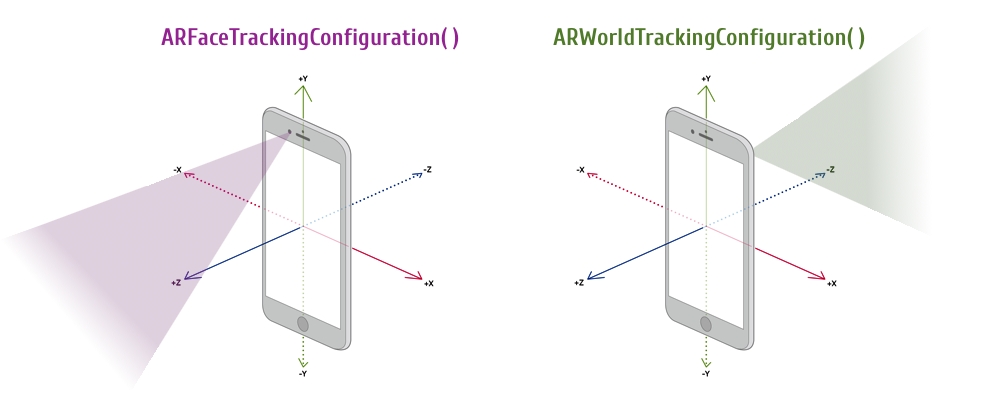

And here's an image showing axis directions for ARFaceTrackingConfiguration():

Answering your question

I could say that you can't manage this point's coordinates directly because it's gettable-only property (and there is just XYZ orientation, not XYZ translation).

So if you need both – translation and rotation – use .rightEyeTransform and .lefttEyeTransform instance properties instead.

Projecting a point

There are two methods for point projection in SceneKit/ARKit. Use the following instance method for projecting a point onto 2D view (for sceneView instance):

func projectPoint(_ point: SCNVector3) -> SCNVector3

or:

let sceneView = ARSCNView()

sceneView.projectPoint(myPoint)

Also, in ARKit you have to implement the following instance method for projecting a point onto 2D view (for arCamera instance):

func projectPoint(_ point: simd_float3,

orientation: UIInterfaceOrientation,

viewportSize: CGSize) -> CGPoint

or:

let camera = ARCamera()

camera.projectPoint(myPoint, orientation: myOrientation, viewportSize: vpSize)

This method helps you project a point from the 3D world coordinate system of the scene to the 2D pixel coordinate system of the renderer.

Unrojecting a point

There's also the SceneKit's method for unprojecting a point:

func unprojectPoint(_ point: SCNVector3) -> SCNVector3

For more details read this post.

...and ARKit's method for unprojecting a point:

@nonobjc func unprojectPoint(_ point: CGPoint,

ontoPlane planeTransform: simd_float4x4,

orientation: UIInterfaceOrientation,

viewportSize: CGSize) -> simd_float3?

© 2022 - 2024 — McMap. All rights reserved.

leftEyeTransformandrightEyeTransforminstead oflookAtPointis also highly appreciated. – Butterbur